Last month, I had the opportunity to attend an online meetup hosted by the local Microsoft MVP Dileepa Rajapaksa from the Singapore .NET Developers Community, where I was introduced to ApiDog.

During the session, Mohammad L. U. Tanjim, the Product Manager of ApiDog, gave a detailed walkthrough of the API-First design and how Apidog can be used for this approach.

Apidog helps us to define, test, and document APIs in one place. Instead of manually writing Swagger docs and using API tool separately, ApiDog combines everything. This means frontend developers can get mock APIs instantly, and backend developers as well as QAs can get clear API specs with automatic testing support.

Hence, for the customised headless APIs, we will adopt an API-First design approach. This approach ensures clarity, consistency, and efficient collaboration between backend and frontend teams while reducing future rework.

API-First Design Approach

By designing APIs upfront, we reduce the likelihood of frequent changes that disrupt development. It also ensures consistent API behaviour and better long-term maintainability.

For our frontend team, with a well-defined API specification, they can begin working with mock APIs, enabling parallel development. This eliminates dependencies where frontend work is blocked by backend completion.

For QA team, API spec will be important to them because it serve as a reference for automated testing. The QA engineers can validate API responses before implementation.

API Design Journey

In this article, we will embark on an API Design Journey by transforming a traditional travel agency in Singapore into an API-first system. To achieve this, we will use Apidog for API design and testing, and Orchard Core as a CMS to manage travel package information. Along the way, we will explore different considerations in API design, documentation, and integration to create a system that is both practical and scalable.

Many traditional travel agencies in Singapore still rely on manual processes. They store travel package details in spreadsheets, printed brochures, or even handwritten notes. This makes it challenging to update, search, and distribute information efficiently.

By introducing a headless CMS like Orchard Core, we can centralise travel package management while allowing different clients like mobile apps to access the data through APIs. This approach not only modernises the operations in the travel agency but also enables seamless integration with other systems.

API Design Journey 01: The Design Phase

Now that we understand the challenges of managing travel packages manually, we will build the API with Orchard Core to enable seamless access to travel package data.

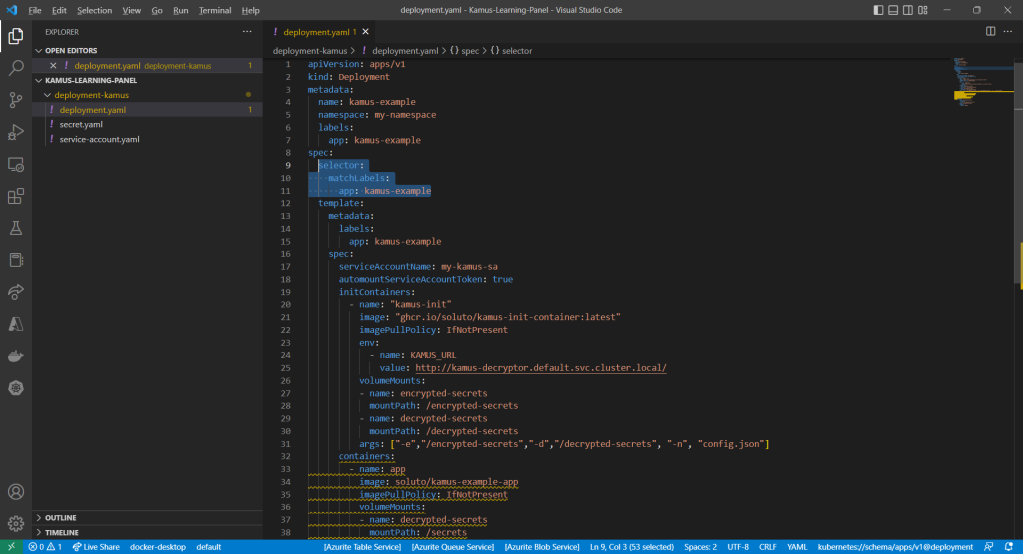

Instead of jumping straight into coding, we will first focus on the design phase, ensuring that our API meets the business requirements. At this stage, we focus on designing endpoints, such as GET /api/v1/packages, to manage the travel packages. We also plan how we will structure the response.

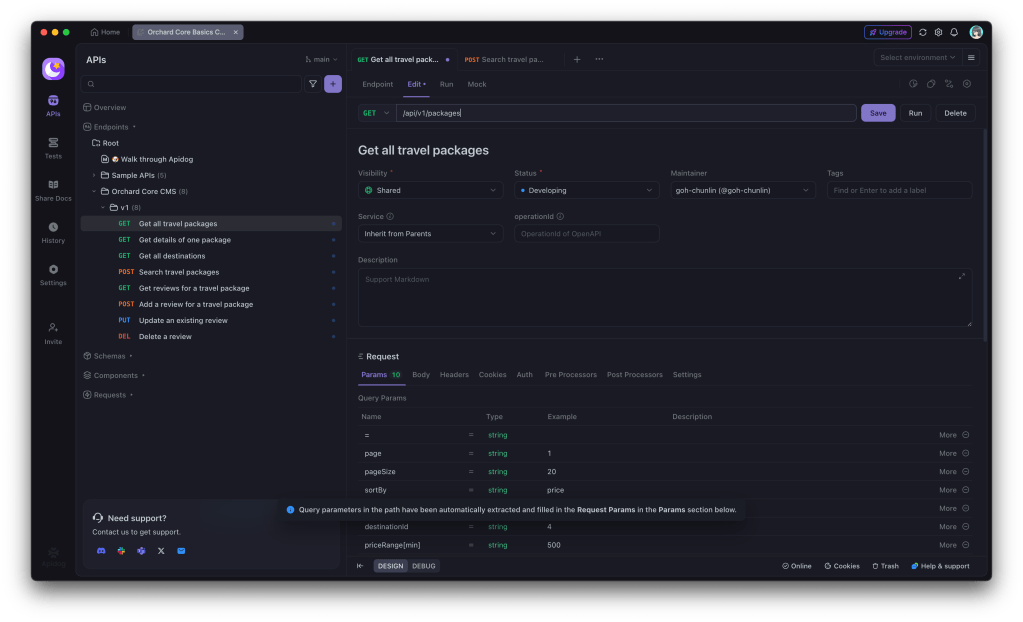

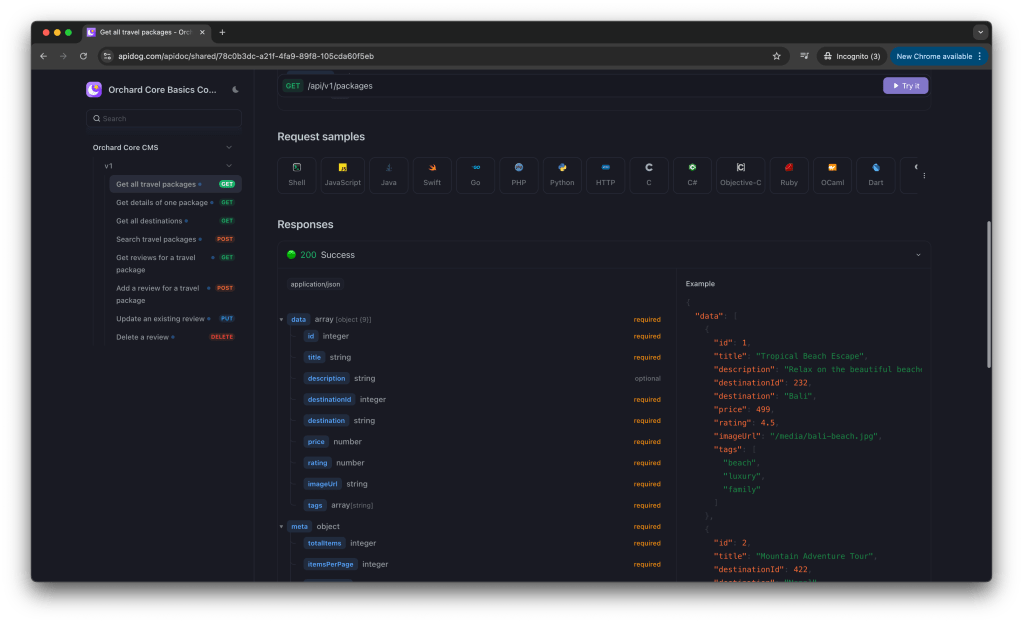

Given the scope and complexity of a full travel package CMS, this article will focus on designing a subset of API endpoints, as shown in the screenshot below. This allows us to highlight essential design principles and approaches that can be applied across the entire API journey with Apidog.

For the first endpoint “Get all travel packages”, we design it with the following query parameters to support flexible and efficient result filtering, pagination, sorting, and text search. This approach ensures that users can easily retrieve and navigate through travel packages based on their specific needs and preferences.

GET /api/v1/packages?page=1&pageSize=20&sortBy=price&sortOrder=asc&destinationId=4&priceRange[min]=500&priceRange[max]=2000&rating=4&searchTerm=spa

Same with the request section, the Response also can be generated based on a sample JSON that we expect the endpoint to return, as shown in the following screenshot.

In the screenshot above, the field “description” is marked as optional because it is the only property that does not exist in all the other entry in “data”.

Besides the success status, we also need another important HTTP 400 status code which tells the client that something is wrong with their request.

The reason why we need HTTP 400 is that, instead of processing an invalid request and returning incorrect or unexpected results, our API should explicitly reject it, ensuring that the client knows what needs to be fixed. This improves both developer experience and API reliability.

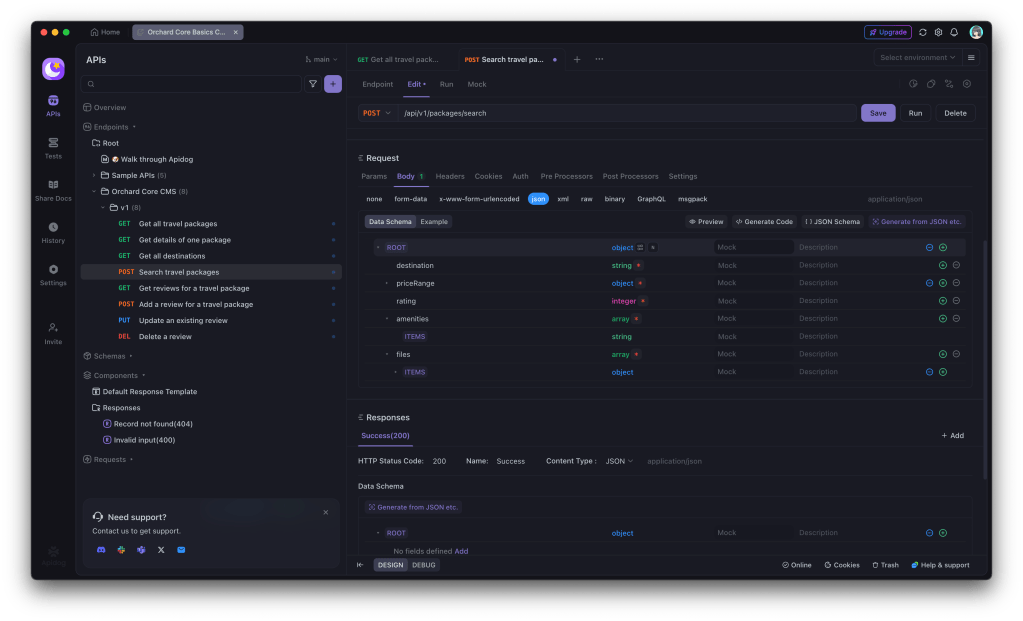

After completing the endpoint for getting all travel packages, we also have another POST endpoint to search travel packages.

While GET is the standard method for retrieving data from an API, complex search queries involving multiple parameters, filters, or file uploads might require the use of a POST request. This is particularly true when dealing with advanced search forms or large amounts of data, which cannot be easily represented as URL query parameters. In these cases, POST allows us to send the parameters in the body of the request, ensuring the URL remains manageable and avoiding URL length limits.

For example, let’s assume this POST endpoint allows us to search for travel packages with the following body.

{

"destination": "Singapore",

"priceRange": {

"min": 500,

"max": 2000

},

"rating": 4,

"amenities": ["pool", "spa"],

"files": [

{

"fileType": "image",

"file": "base64-encoded-image-content"

}

]

}

We can also easily generate the data schema for the body by pasting this JSON as example into Apidog, as shown in the screenshot below.

When making an HTTP POST request, the client sends data to the server. While JSON in the request body is common, there is also another format used in APIs, i.e. multipart/form-data (also known as form-data).

The form-data is used when the request body contains files, images, or binary data along with text fields. So, if our endpoint /api/v1/packages/{id}/reviews allows users to submit both text (review content and rating) and an image, using form-data is the best choice, as demonstrated in the following screenshot.

API Design Journey 02: Prototyping with Mockups

When designing the API, it is common to debate, for example, whether reviews should be nested inside packages or treated as a separate resource. By using Apidog, we can quickly create mock APIs for both versions and tested how they would work in different use cases. This helps us make a data-driven decision instead of endless discussions.

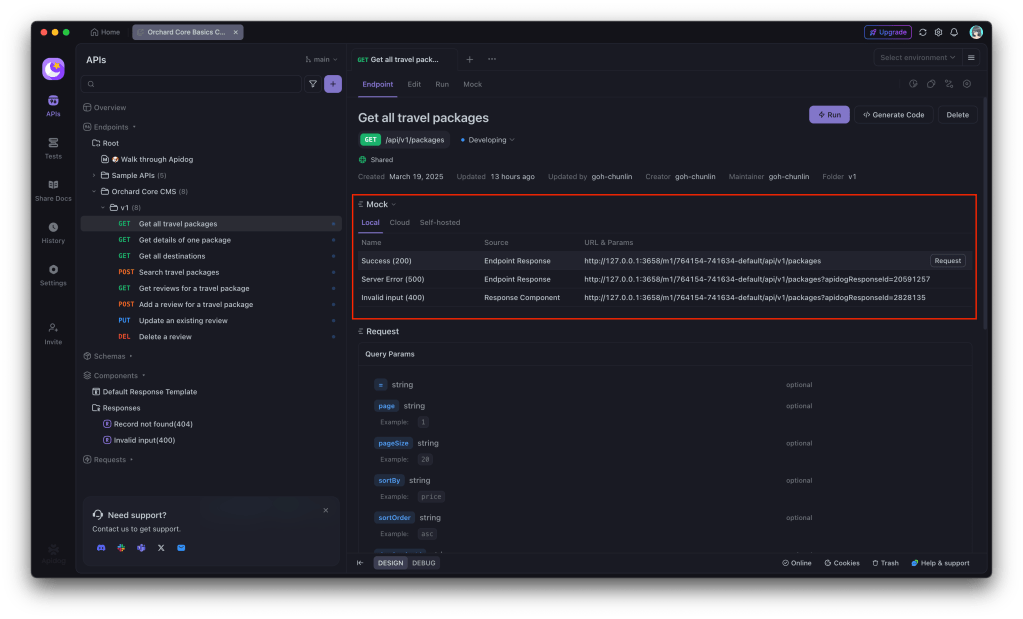

Once our endpoint is created, Apidog automatically generates a mock API based on our defined API spec, as shown in the following screenshot.

Clicking on the “Request” button next to each of the mock API URL will bring us to the corresponding mock response, as shown in the following screenshot.

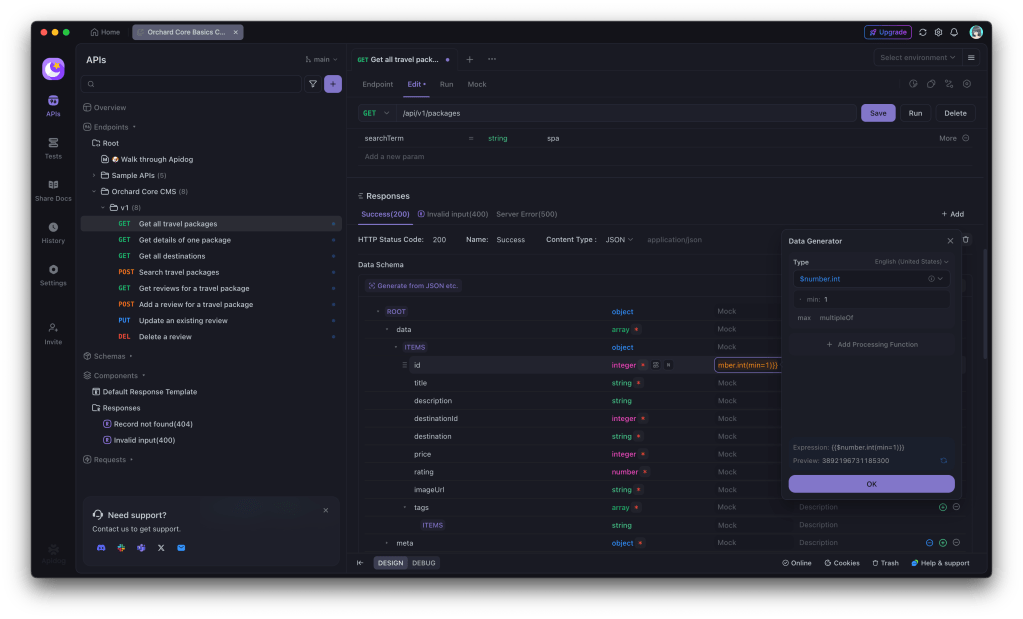

As shown in the screenshot above, some values in the mock response are not making any sense, for example negative id and destinationId, rating which is supposed to be between 1 and 5, “East” as sorting direction, and so on. How could we fix them?

Firstly, we will set the id (and destinationId) to be any positive integer number starting from 1.

Secondly, we update both the price and rating to be float. In the following screenshot, we specify that the rating can be any float from 1.0 to 5.0 with single fraction digit.

Finally, we will indicate that the sorting direction can only be either ASC or DESC, as shown in the following screenshot.

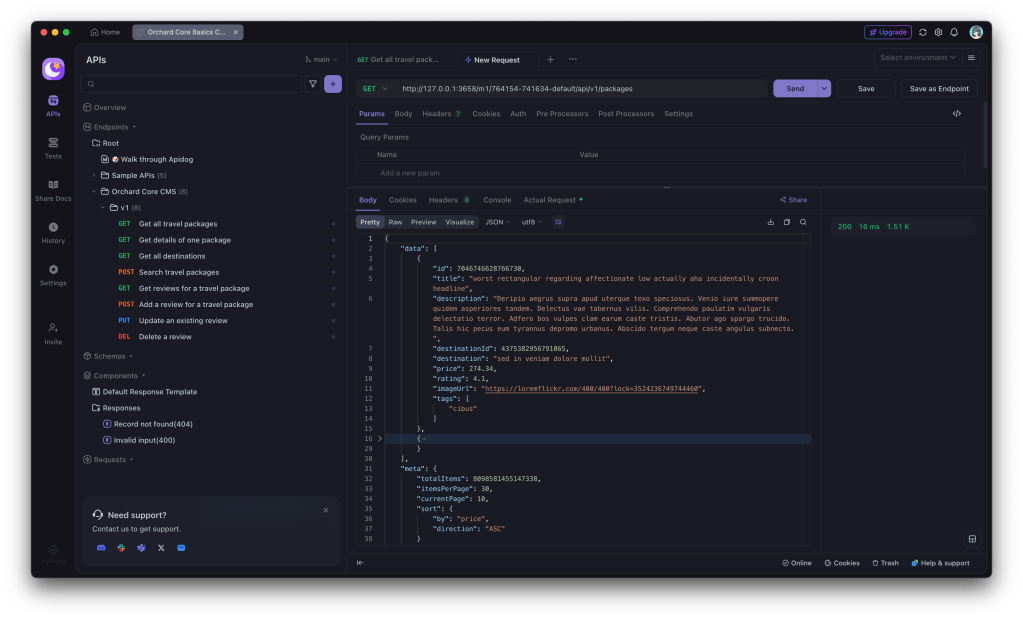

With all the necessary mock values configuration, if we fetch the mock response again, we should be able to get a response with more reasonable values, as demonstrated in the screenshot below.

With the mock APIs, our frontend developers will be able to start building UI components without waiting for the backend to be completed. Also, as shown above, a mock API responds instantly, unlike real APIs that depend on database queries, authentication, or network latency. This makes UI development and unit testing faster.

Speaking of testing, some test cases are difficult to create with a real API. For example, what if an API returns an error (500 Internal Server Error)? What if there are thousands of travel packages? With a mock API, we can control the responses and simulate rare cases easily.

In addition, Apidog supports returning different mock data based on different request parameters. This makes the mock API more realistic and useful for developers. This is because if the mock API returns static data, frontend developers may only test one scenario. A dynamic mock API allows testing of various edge cases.

For example, our travel package API allows admins to see all packages, including unpublished ones, while regular users only see public packages. We thus can setup in such a way that different bearer token will return different set of mock data.

With Mock Expectation feature, Apidog can return custom responses based on request parameters as well. For instance, it can return normal packages when the destinationId is 1 and trigger an error when the destinationId is 2.

API Design Journey 03: Documenting Phase

With endpoints designed properly in earlier two phases, we can now proceed to create documentation which is offers a detailed explanation of the endpoints in our API. This documentation will include the information such as HTTP methods, request parameters, and response formats.

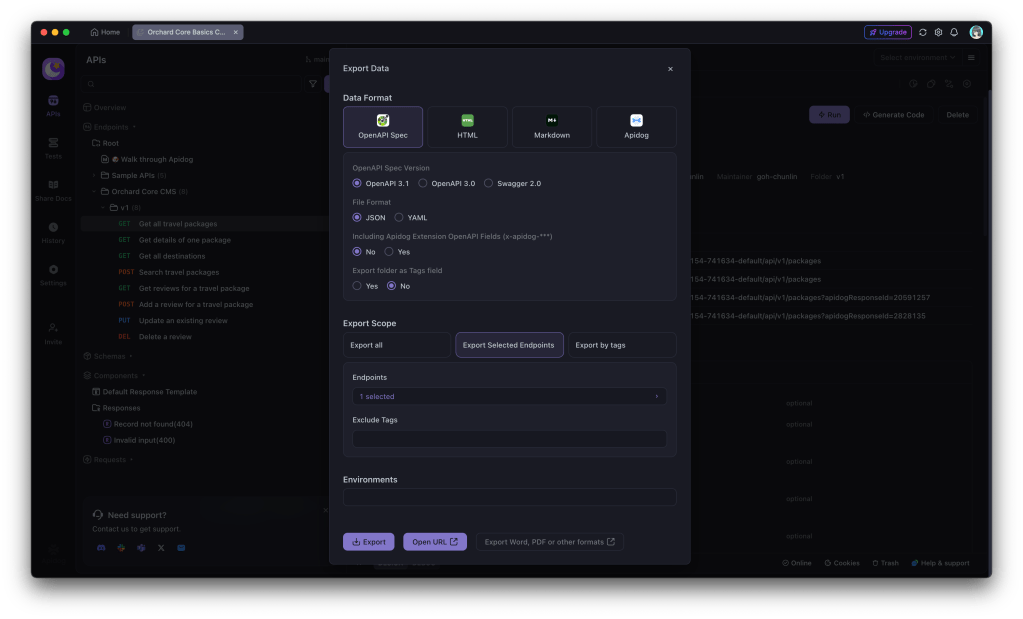

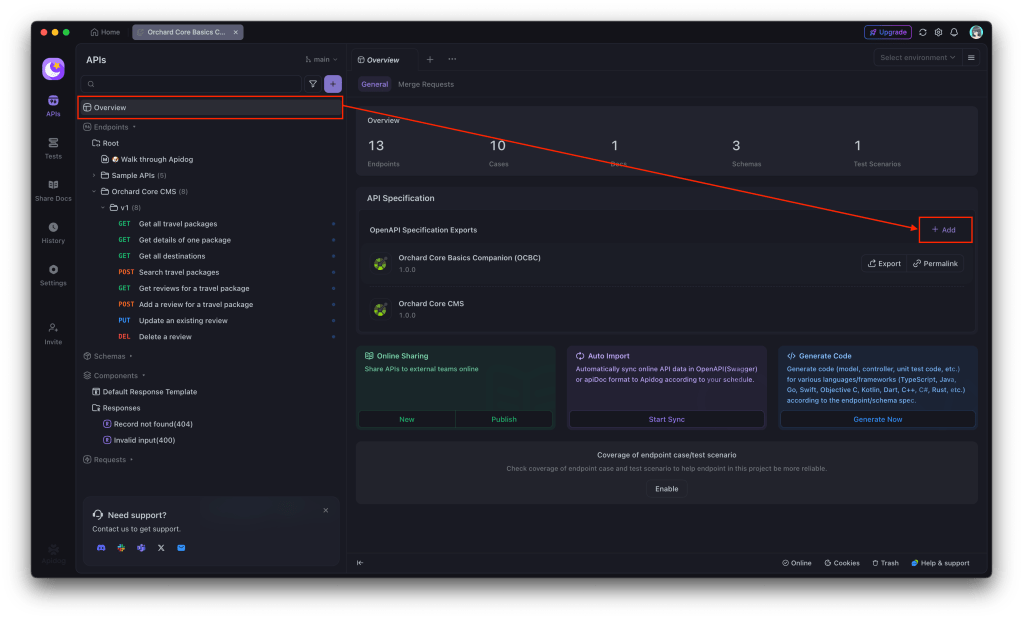

Fortunately, Apidog makes the documentation process smooth by integrating well within the API ecosystem. It also makes sharing easy, letting us export the documentation in formats like OpenAPI, HTML, and Markdown.

We can also export our documentation on folder basis to OpenAPI Specification in Overview, as shown below.

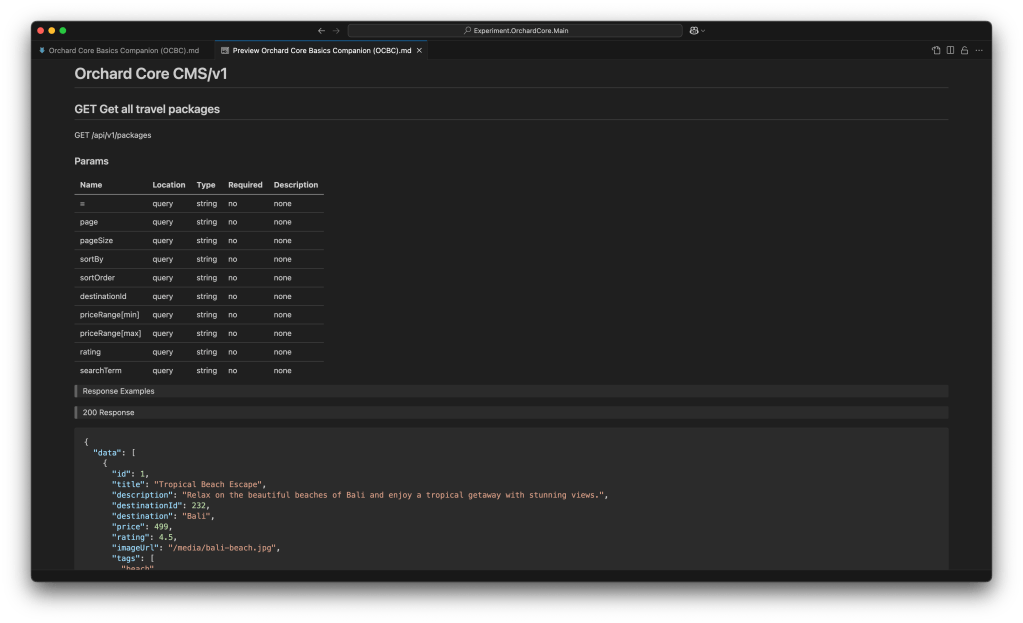

We can also export the data as an offline document. Just click on the “Open URL” or “Permalink” button to view the raw JSON/YAML content directly in the Internet browser. We then can place the raw content into the Swagger Editor to view the Swagger UI of our API, as demonstrated in the following screenshot.

Let’s say now we need to share the documentation with our team, stakeholders, or even the public. Our documentation thus needs to be accessible and easy to navigate. That is where exporting to HTML or Markdown comes in handy.

Finally, Apidog also allows us to conveniently publish our API documentation as a webpage. There are two options: Quick Share, for sharing parts of the docs with collaborators, and Publish Docs, for making the full documentation publicly available.

Quick Share is great for API collaborators because we can set a password for access and define an expiration time for the shared documentation. If no expiration is set, the link stays active indefinitely.

API Design Journey 04: The Development Phase

With our API fully designed, mocked, and documented, it is time to bring it to life with actual code. Since we have already defined information such as the endpoints, request format, and response formats, implementation becomes much more straightforward. Now, let’s start building the backend to match our API specifications.

Orchard Core generally supports two main approaches for designing APIs, i.e. Headless and Decoupled.

In the headless approach, Orchard Core acts purely as a backend CMS, exposing content via APIs without a frontend. The frontend is built separately.

In the decoupled approach, Orchard Core still provides APIs like in the headless approach, but it also serves some frontend rendering. It is a hybrid approach because we use Razor Pages some parts of the UI are rendered by Orchard, while others rely on APIs.

So in fact, we can combine the good of both approaches so that we can build a customised headless APIs on Orchard Core using services like IOrchardHelper to fetch content dynamically and IContentManager to allow us full CRUD operations on content items. This is in fact the approach mentioned in the Orchard Core Basics Companion (OCBC) documentation.

For the endpoint of getting a list of travel packages, i.e. /api/v1/packages, we can define it as follows.

[ApiController]

[Route("api/v1/packages")]

public class PackageController(

IOrchardHelper orchard,

...) : Controller

{

[HttpGet]

public async Task<IActionResult> GetTravelPackages()

{

var travelPackages = await orchard.QueryContentItemsAsync(q =>

q.Where(c => c.ContentType == "TravelPackage"));

...

return Ok(travelPackages);

}

...

}

In the code above, we are using Orchard Core Headless CMS API and leveraging IOrchardHelper to query content items of type “TravelPackage”. We are then exposing a REST API (GET /api/v1/packages) that returns all travel packages stored as content items in the Orchard Core CMS.

API Design Journey 05: Testing of Actual Implementation

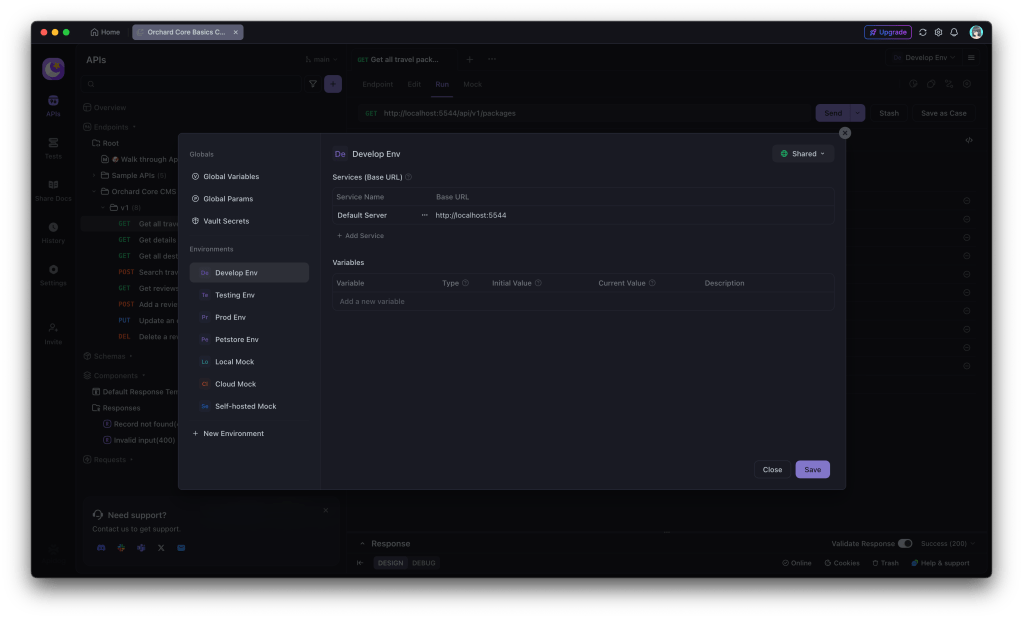

Let’s assume our Dev Server Base URL is localhost. This URL is set as a variable in the Develop Env, as shown in the screenshot below.

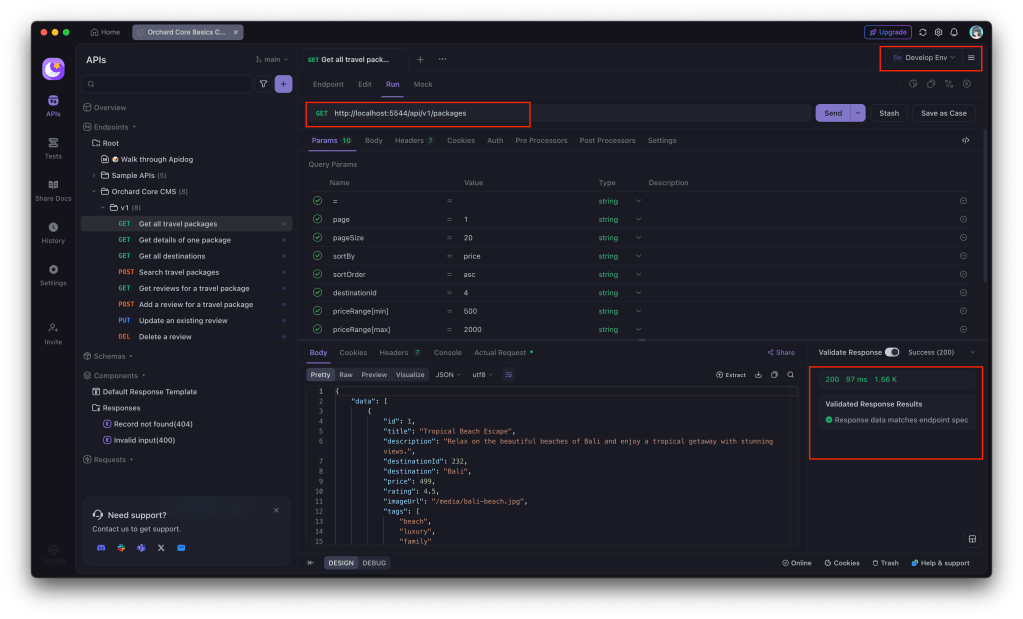

With the environment setup, we can now proceed to run our endpoint under that environment. As shown in the following screenshot, we are able to immediately validate the implementation of our endpoint.

The screenshot above shows that through API Validation Testing, the implementation of that endpoint has met all expected requirements.

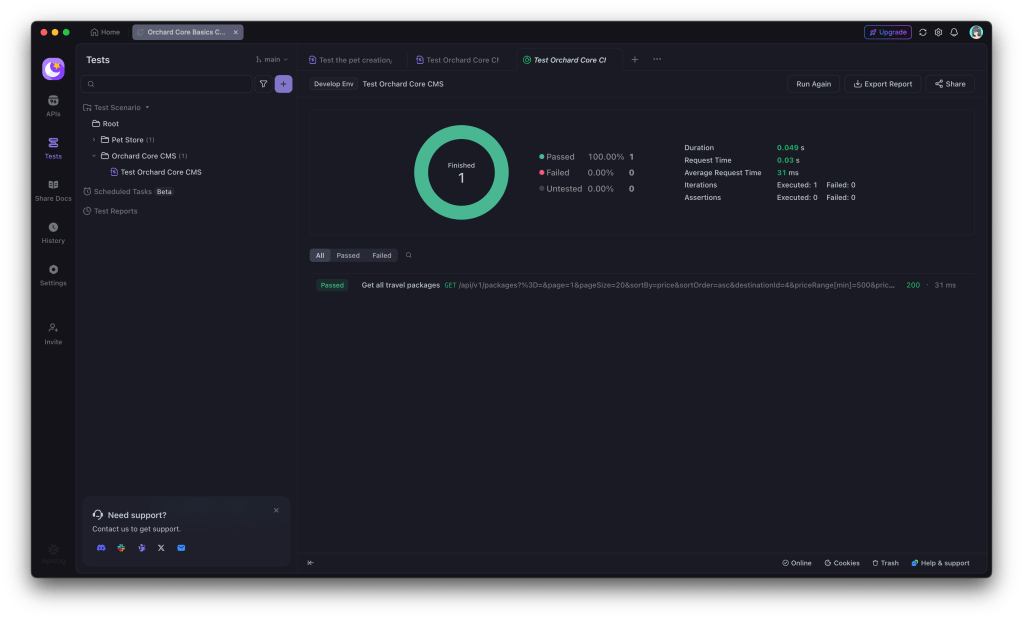

API validation tests are not just for simple checks. The feature is great for handling complex, multi-step API workflows too. With them, we can chain multiple requests together, simulate real-world scenarios, and even run the same requests with different test data. This makes it easier to catch issues early and keep our API running smoothly.

In addition, we can also set up Scheduled Tasks, which is still in Beta now, to automatically run our test scenarios at specific times. This helps us monitor API performance, catch issues early, and ensure everything works as expected automatically. Plus, we can review the execution results to stay on top of any failures.

Wrap-Up

Throughout this article, we have walked through the process of designing, mocking, documenting, implementing, and testing a headless API in Orchard Core using Apidog. By following an API-first approach, we ensure that our API is well-structured, easy to maintain, and developer-friendly.

With this approach, teams can collaborate more effectively, reduce friction in development. Now that the foundation is set, the next step could be integrating this API into a frontend app, optimising our API performance, or automating even more tests.

Finally, with .NET 9 moving away from built-in Swagger UI, developers now have to find alternatives to set up API documentation. As we can see, Apidog offers a powerful alternative, because it combines API design, testing, and documentation in one tool. It simplifies collaboration while ensuring a smooth API-first design approach.