In the realm of software development and business practices, not automating processes when it could bring significant benefits will normally be considered a missed opportunity or an inefficient use of resources. It could lead to wasted time, increased chances of errors, and reduced productivity.

Background Story

My teammate encountered this strange issue that a third-party core component in the system which run as a Windows service would stop randomly. The service is listening to a certain TCP port. When the service was down, telnet to that port would show that the connection was not successful.

After weeks of intensive log investigation, my teammate still could not figure out the reason why it would stop working. However, a glimmer of insight emerged: restarting the Windows service would consistently bring the component back online.

Hence, he solution is creating an alert system which would trigger email to him and the team to restart the Windows service when it goes down. The alert system is basically a scheduler checking the health of the TCP port which the service is listening to.

Since my teammate was only the few ones who could login to the server, he had to standby during weekends too to restart the Windows service. Not long after that, he submitted his resignation and left the company. Other teammates thus had to take over this manual restarting Windows service task.

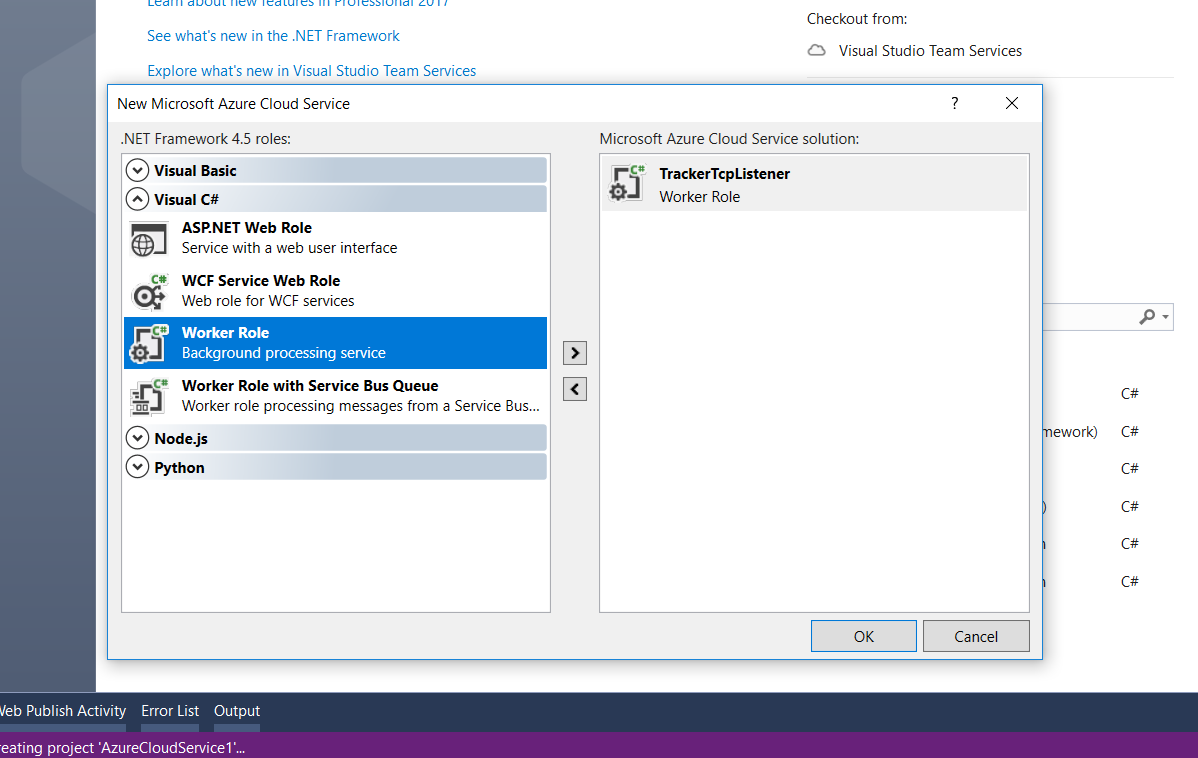

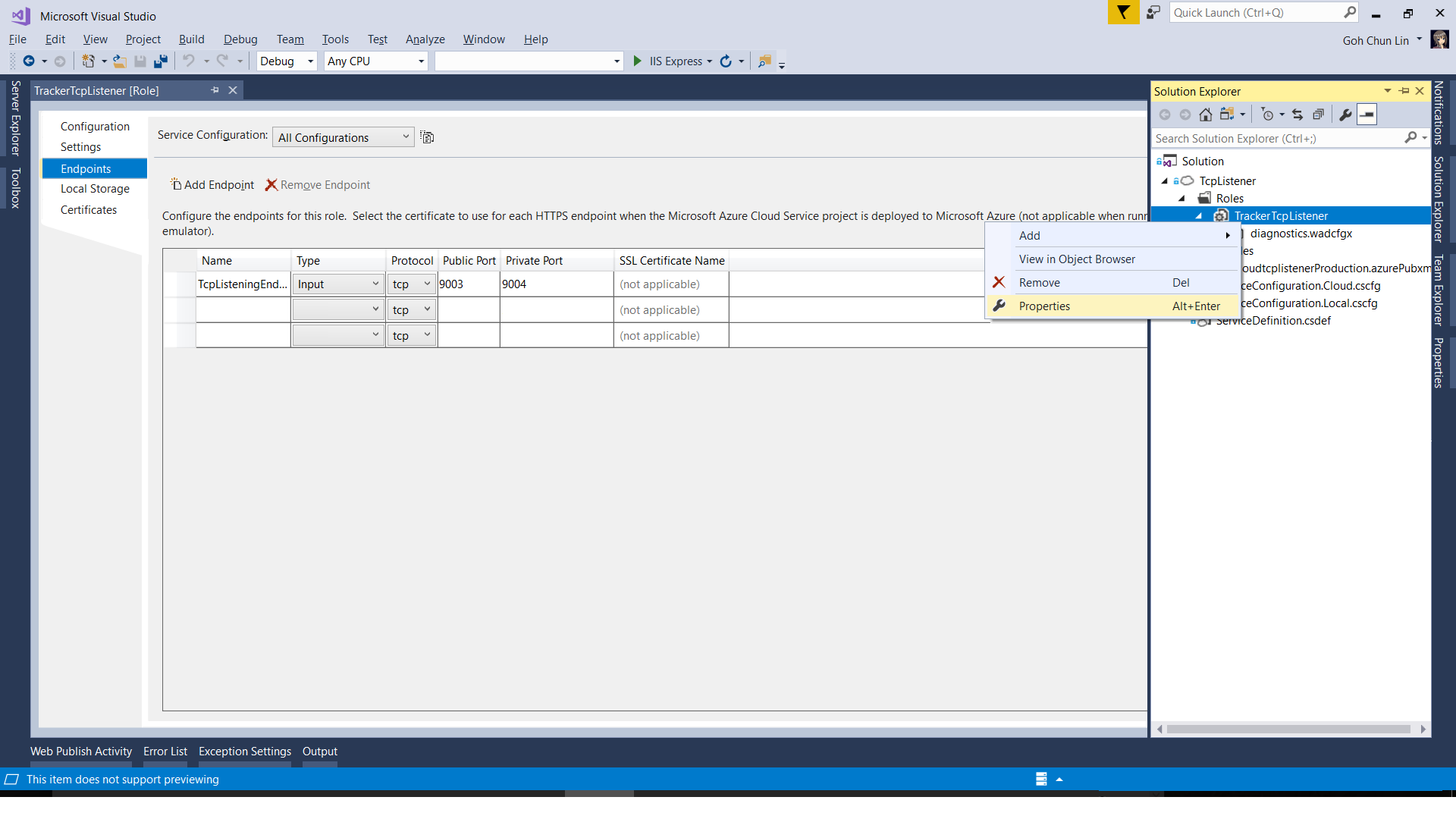

Auto Restart Windows Service with C#

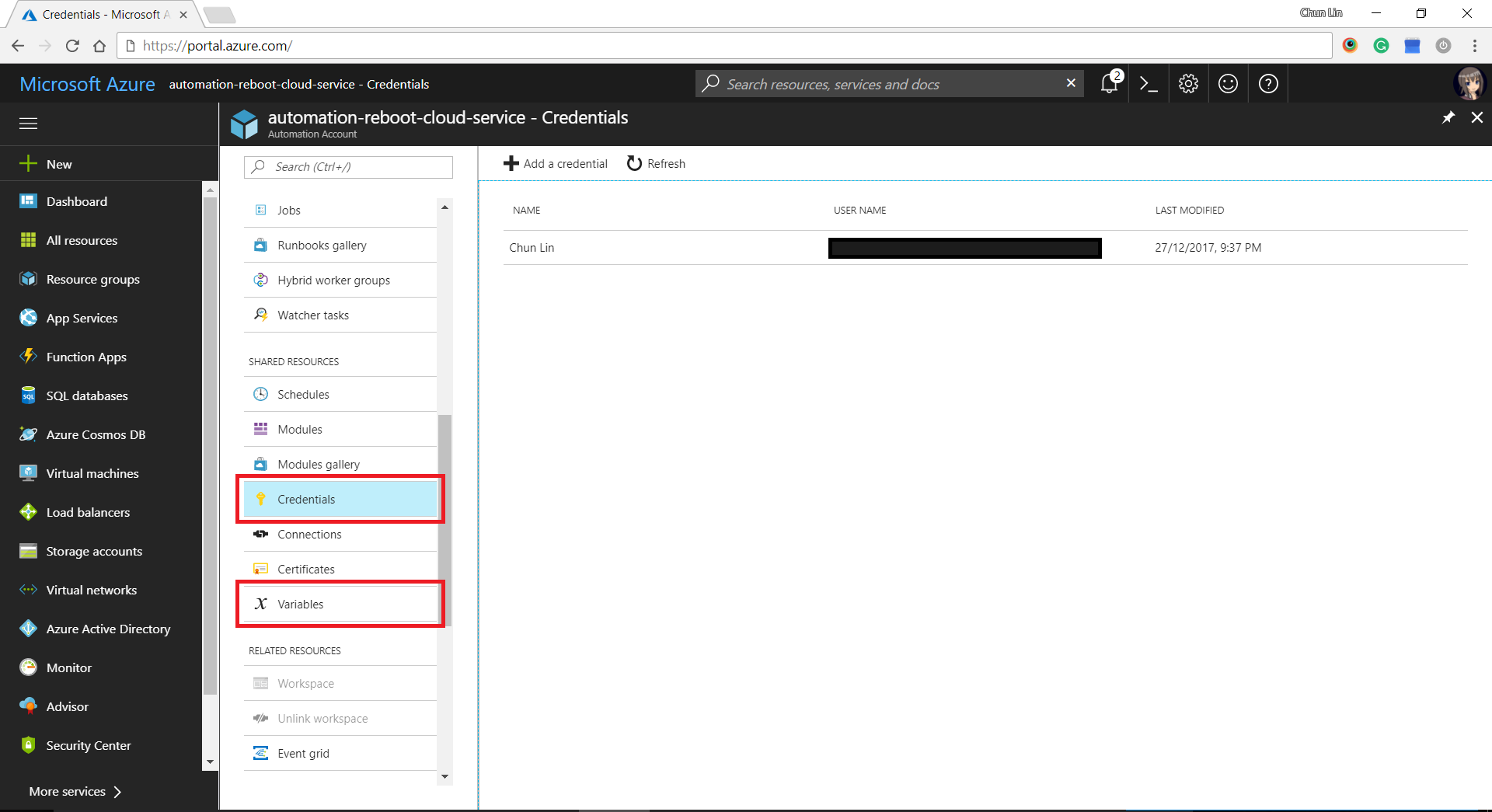

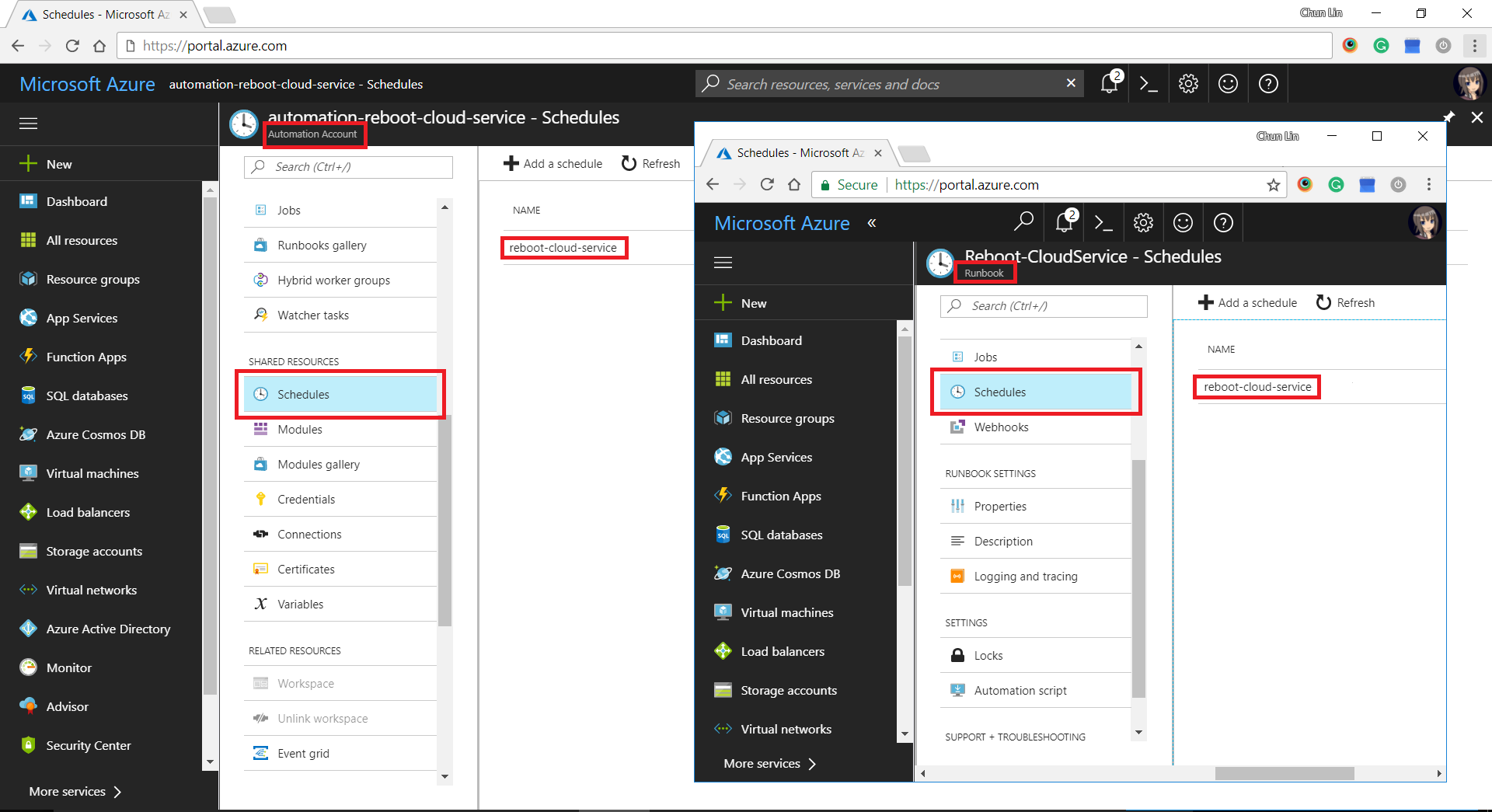

In order to avoid teammates getting burnout from manually restarting Window service frequently even at nights and during weekends, I decided to develop a C# programme which will be executed every 10 minutes at the server. The C# programme will make a connection to the port being listened by the Windows service to check whether the service is running or not. If it is not, the programme will restart it.

The code is as follows.

try

{

using (TcpClient tcpClient = new())

{

tcpClient.Connect(serverIpAddress, port);

}

Console.WriteLine("No issue...");

}

catch (Exception)

{

int timeoutMilliseconds = 120000;

ServiceController service = new(targetService);

try

{

Console.WriteLine("Restarting...");

int millisec1 = Environment.TickCount;

TimeSpan timeout = TimeSpan.FromMilliseconds(timeoutMilliseconds);

if (service.Status != ServiceControllerStatus.Stopped)

{

Console.WriteLine("Stopping...");

service.Stop();

service.WaitForStatus(ServiceControllerStatus.Stopped, timeout);

}

Console.WriteLine("Stopped!");

int millisec2 = Environment.TickCount;

timeout = TimeSpan.FromMilliseconds(timeoutMilliseconds - (millisec2 - millisec1));

Console.WriteLine("Starting...");

service.Start();

service.WaitForStatus(ServiceControllerStatus.Running, timeout);

Console.WriteLine("Restarted!");

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

In the programme above, we implement a timeout of 2 minutes. So after waiting the Windows service to stop, we will use the remaining time to wait for the service to be back to the Running status within the remaining time.

After the team had launched this programme as a scheduler, no one has to wake up at midnight just to login to server to restart the Windows service anymore.

Converting Comma-Delimted CSV to Tab-Delimted CSV

Soon, we realised another issue. The input files sent to the Windows service to process has invalid file content. The service is expecting tab-delimited CSV files but the actual content is comma-delimited. The problem has been there since last year, so there are hundreds of files not being processed.

In order to save his time, I wrote a Powershell script to do the conversion.

Get-ChildItem "<directory contains the files>" -Filter *.csv |

Foreach-Object {

Import-Csv -Path $_.FullName -Header 1,2,3,4,5,6,7,8,9 | Export-Csv -Path ('<output directory>' + $_.BaseName + '_out.tmp') -Delimiter `t -NoTypeInformation

Get-Content ('<output directory>' + $_.BaseName + '_out.tmp') | % {$_ -replace '"', ''} | Select-Object -Skip 1 | out-file -FilePath ('<output directory>' + $_.BaseName + '.csv')

Remove-Item ('<output directory>' + $_.BaseName + '_out.tmp')

}

The CSV files do not have the header row and they all have 9 columns. Hence, that is the reason why I use “-Header 1,2,3,4,5,6,7,8,9” to add a temporary header. Otherwise, the script will treat the first line in the file to be header. This means that if the first line has multiple columns having the same value, the Import-Csv will fail. This is the reason why we need to add a temporary header with unique column values.

When using Export-Csv, all fields in the CSV are enclosed in quotation marks. Hence, we need to remove the quotation marks and remove the temporary header before we generate a tab-delimited CSV file as the output.

With this my teammate easily transform all the files to the correct format in less than 5 minutes.

Searching File Content with PowerShell

A few days after that, I found out that another teammate was reading the log files manually to find out the lines containing a keyword “access”. I was shocked by what he was doing because there were hundreds of logs everyday and that would mean he needed to spend hours or even days on the task.

Hence, I wrote him another simple PowerShell just to do the job.

Get-ChildItem "<directory contains the files>" -Filter *.log |

Foreach-Object {

Get-Content $_.FullName | % { if($_ -match "access") {write-host $_}}

}

With this, my teammate finally could finish his task early.

Wrap-Up

Automating software development processes is a common practice in the industry because of the benefits it offers. It saves time, reduces errors, improves productivity, and allows the team to focus on more challenging and creative tasks.

From a broader perspective, not automating the process but doing it manually might not be a tragic event in the traditional sense, as it does not involve loss of life or extreme suffering. However, it could be seen as a missed chance for improvement and growth.