Project GitHub Repository

The complete source code of this project can be found at https://github.com/goh-chunlin/WebcamWinForm.

Project Introduction

In 2017, inspired by “How to use a web cam in C# with .NET Framework 4.0 and Microsoft Expression Encoder 4”, a project on Code Project by Italian software developer Massimo Conti, I built a simple demo to show how we can connect C# Windows Form with a webcam to record both video and audio.

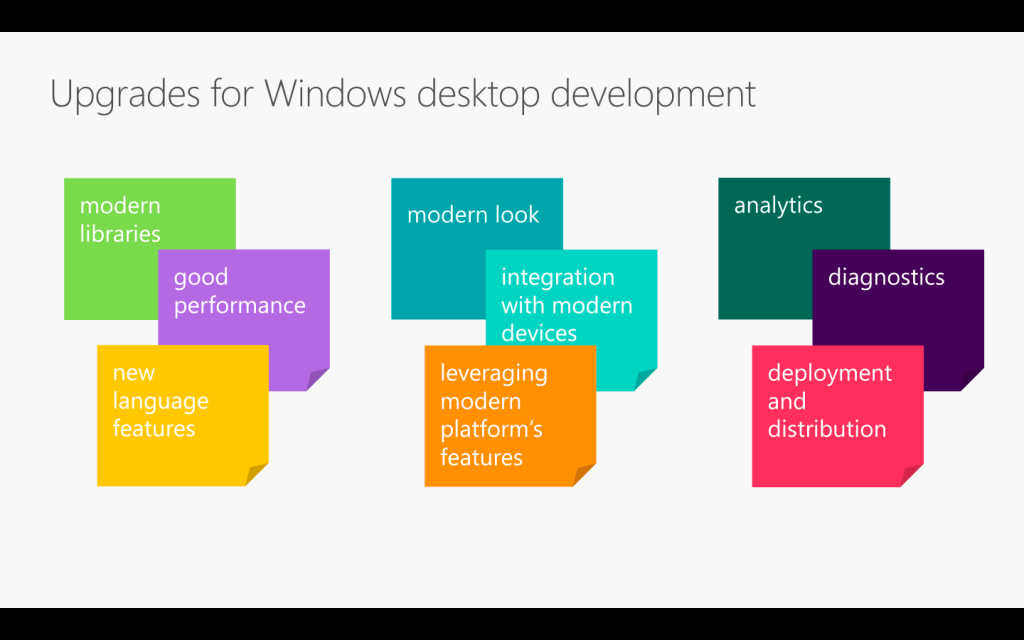

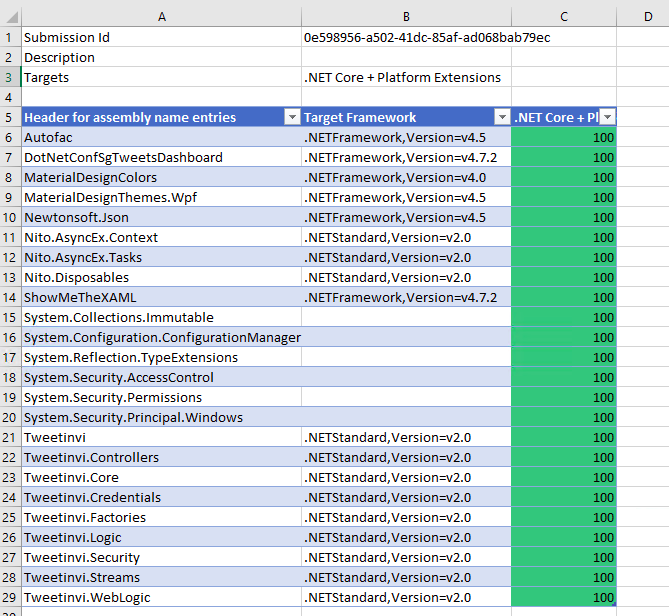

Few weeks ago, I noticed that actually back in 2019, GitHub had already informed me that some libraries that I used in the project need to be looked into because there is a potential security vulnerability. Meanwhile, in the keynote of .NET Conf 2020, there is a highlight of WinForms. Hence, taking this opportunity, I decided to upgrade the project to use .NET 5 and also to replace the deprecated libraries in the project.

In this article, I will share some of the issues that I encountered in the journey of modernising the WinForms app and how I fixed them.

Disappearance of Microsoft Expression Encoder

Previously, we relied a lot on Microsoft Expression Encoder to do the video and audio recording. It is very easy to use. However, after its 4th version released in March 2016, Microsoft no longer upgraded the package. Soon after that, the Microsoft.Expression.Encoder NuGet package is marked as deprecated and later unlisted from the NuGet website.

So now we need to find alternatives to perform the following three tasks which were previously done easily using Microsoft.Expression.Encoder library.

- Listing the devices for video and audio on the machine;

- Recording video;

- Recording audio.

What Devices Are Available?

Sometimes our machine has more than one webcam devices connected to it. So, how do we locate the devices in our application?

Here, we will need to use another Microsoft multimedia API which is called the DirectShow. Thankfully, there is a latest DirectShow NuGet package for .NET Standard 2.0 available, so we will just be using it in the project.

Using DirectShow, we can then list out the available video and audio devices on our machine. For example, we can iterate through the list of video devices with the following codes.

var videoDevices = new

List(DsDevice.GetDevicesOfCat(FilterCategory.VideoInputDevice));

foreach (var device in videoDevices)

{

ddlVideoDevices.Items.Add(device.Name);

}

If we would like to get the audio devices, we just need to filter the devices with FilterCategory.AudioInputDevice.

The video source is important because we need to later use it to specify which webcam we will be using for recording.

In fact, we can simply use DirectShow to do both video and audio recording. However, I find it to be too troublesome, so let’s choose another easier route, which is using OpenCV, BasicAudio, and FFMpeg for the recording instead.

Recording Video with OpenCV

There is an OpenCV wrapper for .NET available. To use OpenCV for video recording, we first need to pass in the device index. Conveniently, the device index corresponds to the order of the devices returned from the DirectShow library. Hence, we can now directly do the following.

int deviceIndex = ddlVideoDevices.SelectedIndex;

capture = new VideoCapture(deviceIndex);

capture.Open(deviceIndex);

outputVideo = new VideoWriter("video.mp4", FourCC.HEVC, 29, new OpenCvSharp.Size(640, 480));

The video recording will be stored in a file called video.mp4.

The second parameter in VideoWriter is the FourCC (an identifier for a video codec) used to compress the frames. Previously, I was using Intel Iyuv codec and the generated video file is always too huge. Hence, I later changed to use HEVC (High Efficient Video Coding, aka H.265) codec. In the Bitmovin Video Developer Report 2019, HEVC is used by 43% of video developers, and is the second most widely used video coding format after AVC.

The third parameter is the FPS. Here we use 29 because after a few round of experiments, we realise the video generated under FPS of 29 has the real speed and will sync with the audio recording later.

The actual recording takes place in a timer which has interval of 17ms. Hence its Tick method will be triggered 58.82 times per second. In the Tick method, we will capture the video frame and then convert it to bitmap so that it can be shown on the application. Finally, we also write the video frame to the VideoWriter.

...

frame = new Mat();

capture.Read(frame);

...

if (imageAlternate == null)

{

isUsingImageAlternate = true;

imageAlternate = BitmapConverter.ToBitmap(frame);

}

else if (image == null)

{

isUsingImageAlternate = false;

image = BitmapConverter.ToBitmap(frame);

}

pictureBox1.Image = isUsingImageAlternate ? imageAlternate : image;

outputVideo.Write(frame);

...

As you notice in the code above, the image being shown in the pictureBox1 will come from either imageAlternate or image. The reason of doing this swap is so that I can dispose the Bitmap and prevent memory leak. This method was suggested by Rahul and Kennyzx on the Stack Overflow discussion.

Recording Audio with BasicAudio

Since OpenCV only takes care of the video part, we need another library to help us record the audio. The library that we choose here is BasicAudio, which provides audio recording for Windows desktop applications. In November 2020, .NET 5 support was added to this library as well.

Currently, BasicAudio will pick the current audio device. Hence, we don’t need to worry about choosing audio device. We simply need the following code to start/stop the audio recording.

audioRecorder = new Recording(); audioRecorder.Filename = "sound.wav"; ... audioRecorder.StartRecording(); ... audioRecorder.StopRecording();

I’ve also found an interesting video where InteropService is used to directly send commands to an MCI device to do audio recording. I haven’t tried it successfully. However, if you would like to play around with it, feel free to check out the following video.

Merging Video and Audio into One File

Due to the fact that we have one video file and one audio file, we need to merge them into one single multimedia file for convenience. Fortunately, FFmpeg can do that easily. There is also a .NET Standard FFmpeg/FFprobe wrapper available which allows us to merge video and audio file with just one line of code.

FFMpeg.ReplaceAudio("video.mp4", "sound.wav", "output.mp4", true);

The last parameter is set to true so that if video file is shorter than the audio file, then the output file will use the video length; otherwise, it will follow the audio length.

However, to make this line of code to work, we must first have FFmpeg installed on our machine, as shown in the screenshot below.

Uploading the Multimedia File to Azure Blob Storage

This is just a bonus feature added in this project. The previous version of the project also had this feature but it was using the WindowsAzure.Storage library which had been deprecated since year 2018. I have upgraded the project now to use Azure.Storage.Blobs.

With all these done, we can finally get a webcam recording function on our WinForms application, as shown in the screenshot below.

References

- How to Use a Webcam in C# with .NET Framework 4.0 and Microsoft Expression Encoder 4;

- Visual Studio C# VideoCapture;

- Memory Leak while converting Opencv Mat to .net BitMap;

- memory leak with videocapture, memory goes up with the function of read(image);

- openCV video saving in python;

- EMGU CV videowriter is unable to make video;

- WebCam Video Recording in MPEG using C# Desktop Application;

- GitHub: rosenbjerg/FFMpegCore

- Look at me! Windows Image Acquisition;

- Record audio in Windows Form C#;

- Multimedia Command Strings;

- How to Install FFmpeg on Windows;

- GitHub: BtbN/FFmpeg-Builds;

- Quickstart: Azure Blob storage client library v12 for .NET.

The code described in this article can be found in my GitHub repository: https://github.com/goh-chunlin/WebcamWinForm.