Objective

In November 2023, Azure conveyed through an email notification that, starting from 31st October 2024, all interactions with their services must be safeguarded using Transport Layer Security (TLS) version 1.2 or later. Post this date, their support for TLS versions 1.0 and 1.1 will be discontinued.

By default, Azure Storage already supports TLS 1.2 on public HTTPS endpoints. However, for some companies, they are still using TLS 1.0 or 1.1. Hence, to maintain their connections to Azure Storage, they have to update their OS and apps to support TLS 1.2.

About TLS

The history of TLS can be traced back to SSL.

SSL stands for “Secure Sockets Layer,” and it was developed by Netscape in the 1990s. SSL was one of the earliest cryptographic protocols developed to provide secure communication over a computer network.

SSL has been found to have several vulnerabilities over time, and these issues have led to its deprecation in favor of more secure protocols like TLS. In 2019, TLS 1.0 was introduced as an improvement over SSL. Nowadays, while the term “SSL” is still commonly used colloquially to refer to the broader category of secure protocols, it typically means TLS.

While TLS addressed some SSL vulnerabilities, it still had weaknesses, and over time, security researchers identified new threats and attacks. Subsequent versions of TLS, i.e. TLS 1.1, TLS 1.2, and TLS 1.3, were developed to further enhance security and address vulnerabilities.

Why TLS 1.2?

By the mid-2010s, it became increasingly clear that TLS 1.2 was a more secure choice, and we were encouraged to upgrade our systems to support it instead. TLS 1.2 introduced new and stronger cipher suites, including Advanced Encryption Standard (AES) cipher suites, providing better security compared to older algorithms.

Ten years after TLS 1.2 was officially released as a standardised protocol, TLS 1.3 was introduced by the Internet Engineering Task Force (IETF).

The coexistence of TLS 1.2 and TLS 1.3 is currently part of a transitional approach, allowing organisations to support older clients that may not yet have adopted TLS 1.3.

For Microsoft Azure, if the service we are using still have a dependency on TLS 1.0 or 1.1, we are advised to migrate them to TLS 1.2 or 1.3 by 31 October 2024.

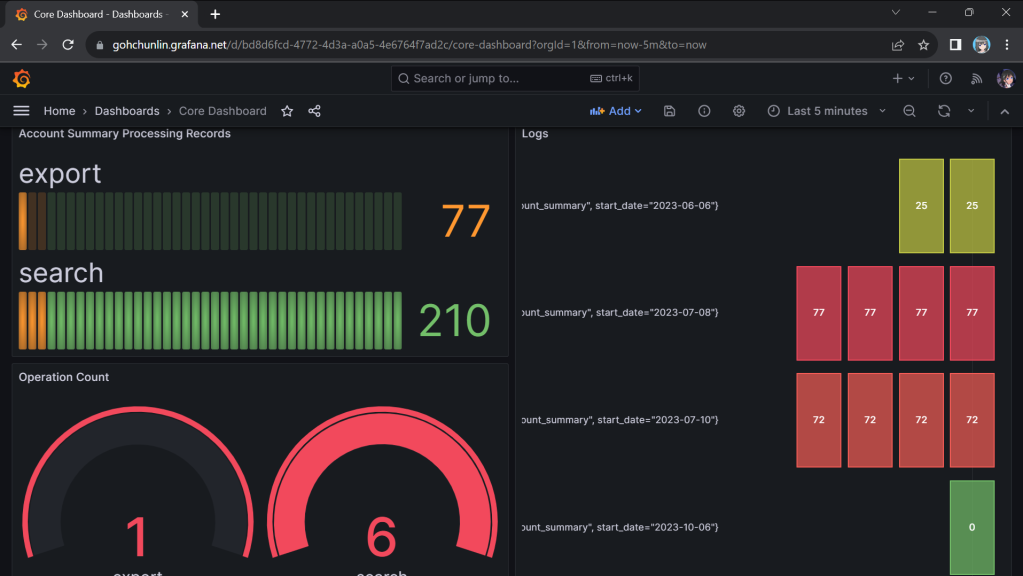

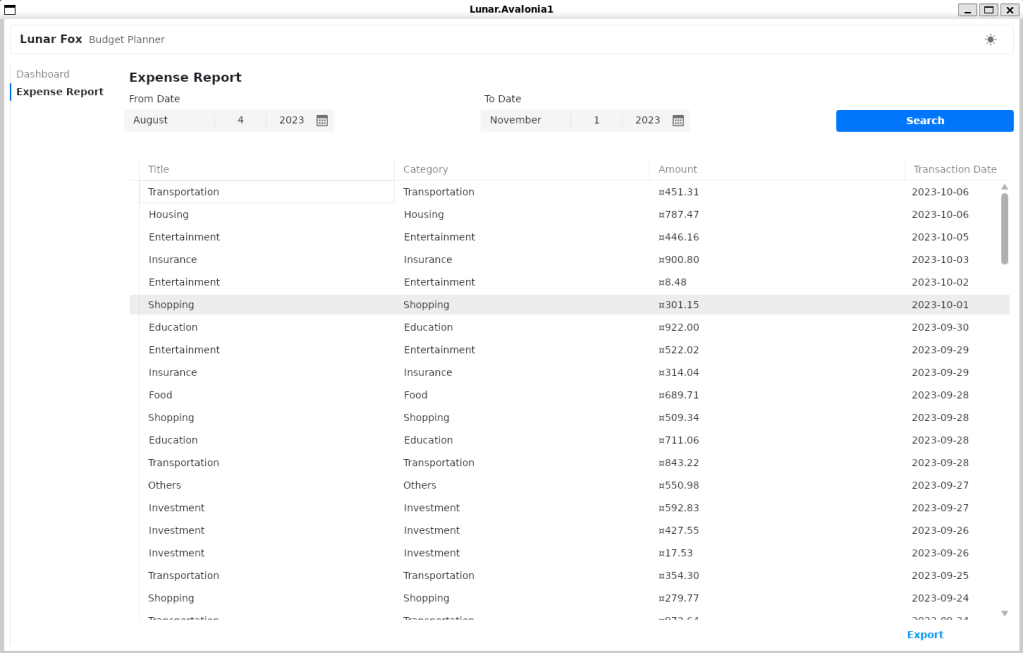

Monitoring TLS Version of Requests

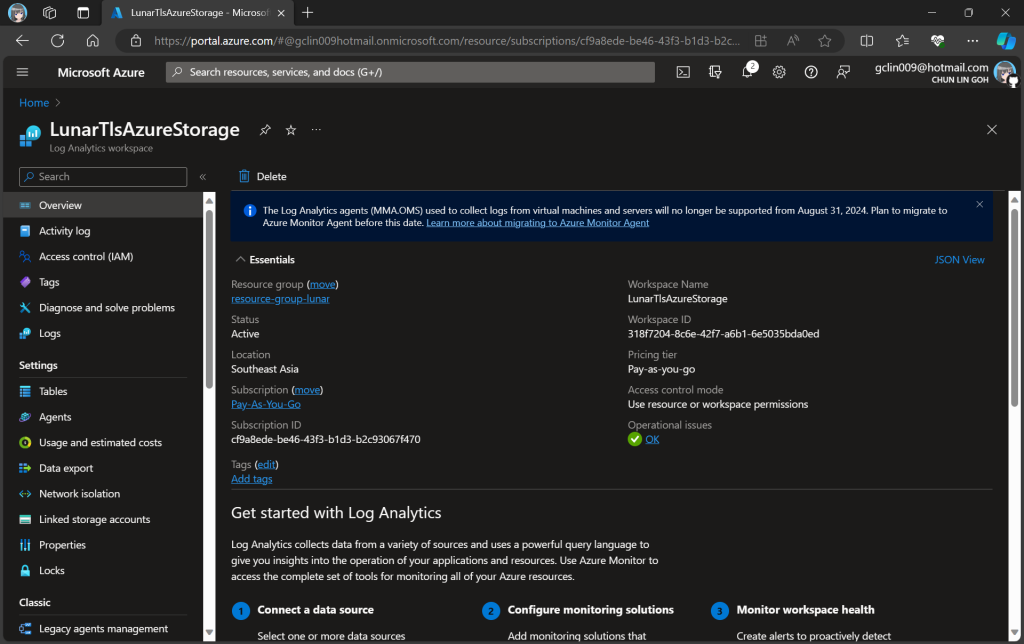

Before we enabling that, we should setup logging to make sure that our Azure policy is working as intended. Here, we will be using Azure Monitor.

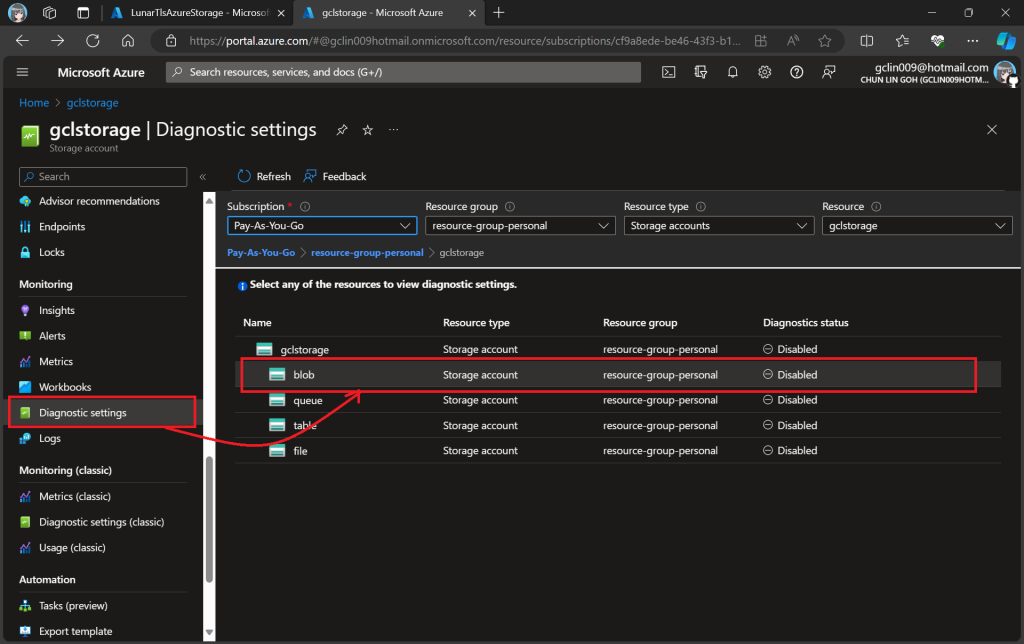

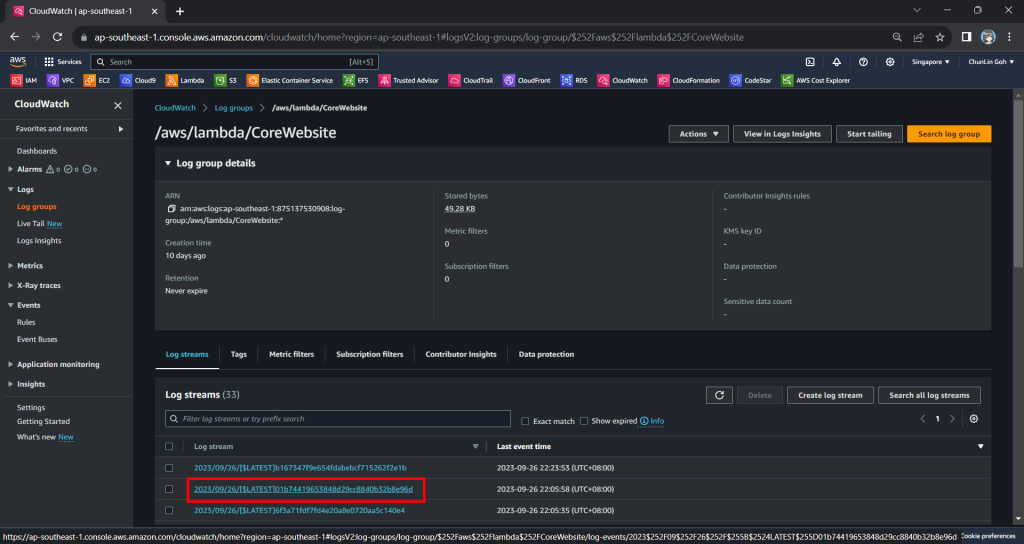

In this article, we will only be logging requests for the Blob Storage, hence, we will be setting up the Diagnostic of the Storage Account as shown in the screenshot below.

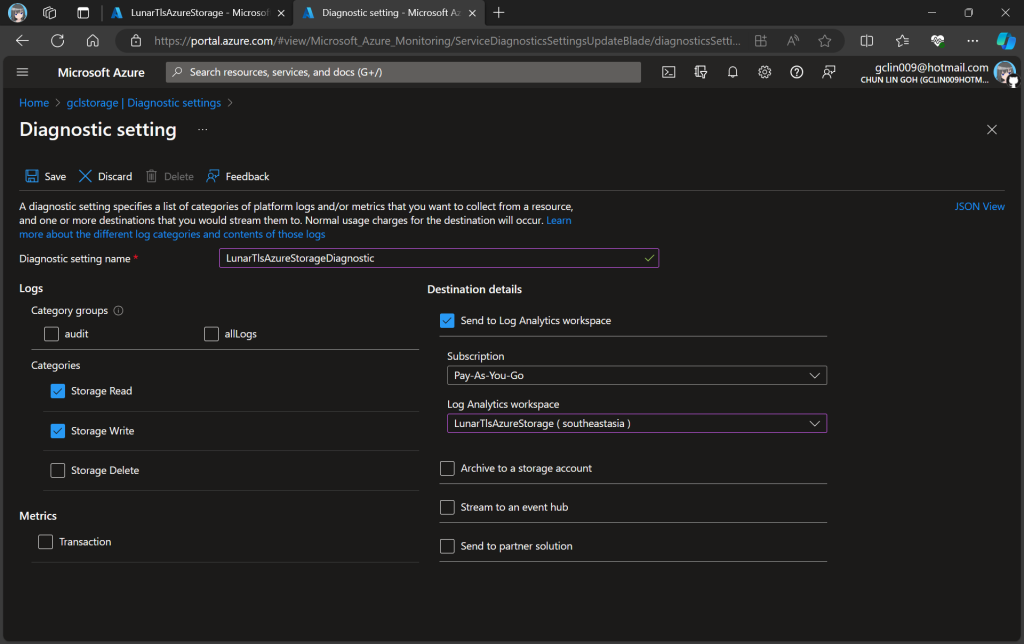

In the next step, we need to specify that we would like to collect the logs of only read and write requests of the Azure Blob Storage. After that, we will send the logs to Log Analytics we have just created above.

After we have created the diagnostic setting, requests to the storage account are subsequently logged according to that setting.

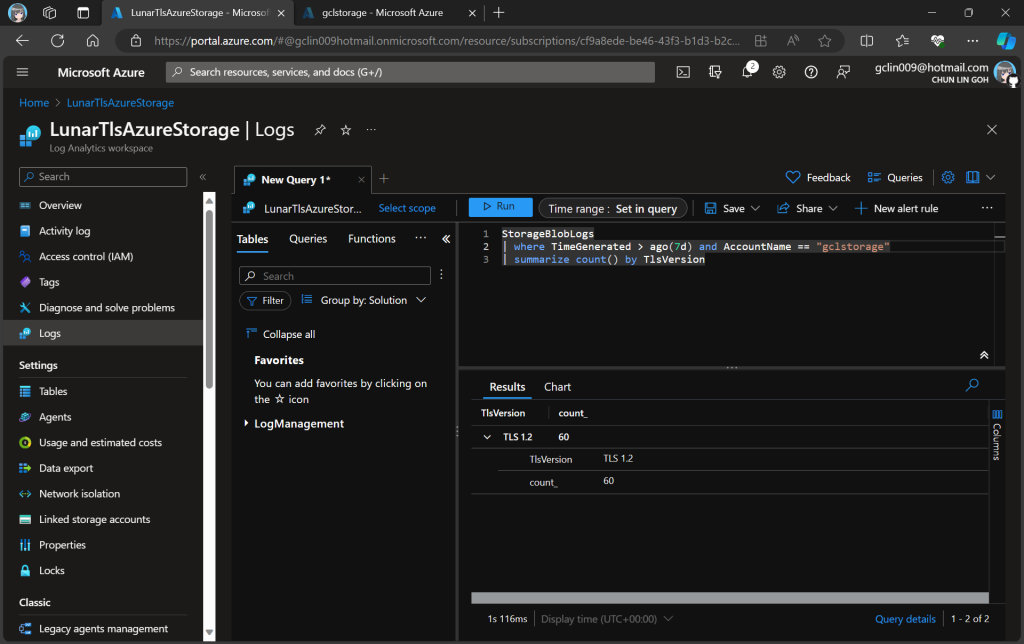

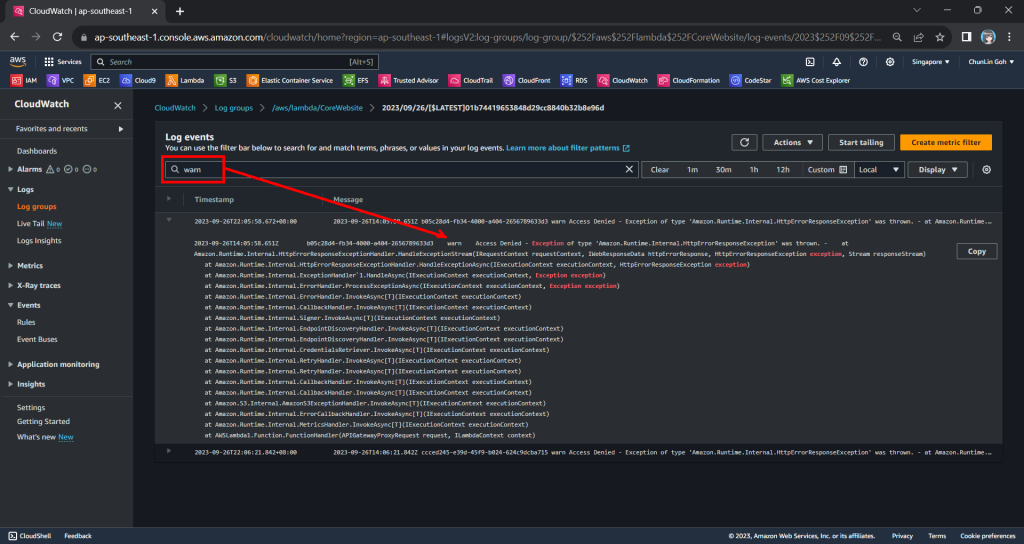

As demonstrated in the following screenshot, we use the query below to find out how many requests were made against our blob storage with different versions of TLS over the past seven day.

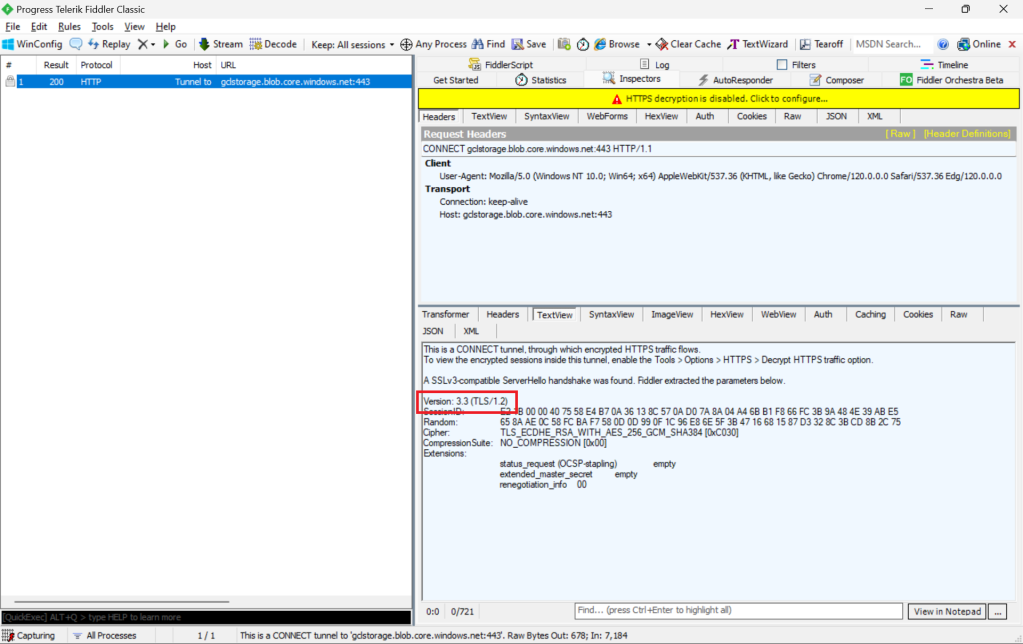

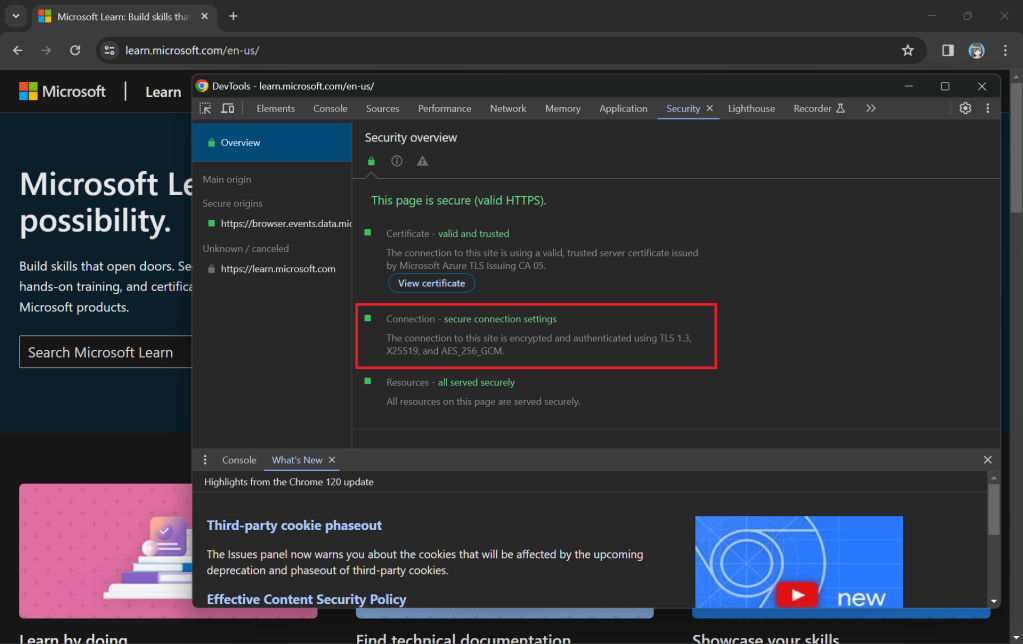

Verify with Telerik Fiddler

Fiddler is a popular web debugging proxy tool that allows us to monitor, inspect, and debug HTTP traffic between our machine and the Internet. Fiddler can thus be used to inspect and analyze both TLS and SSL requests.

We can refer to the Fiddler trace to confirm that the correct version of TLS 1.2 was used to send the request to the blob storage “gclstorage”, as shown in the following screenshot.

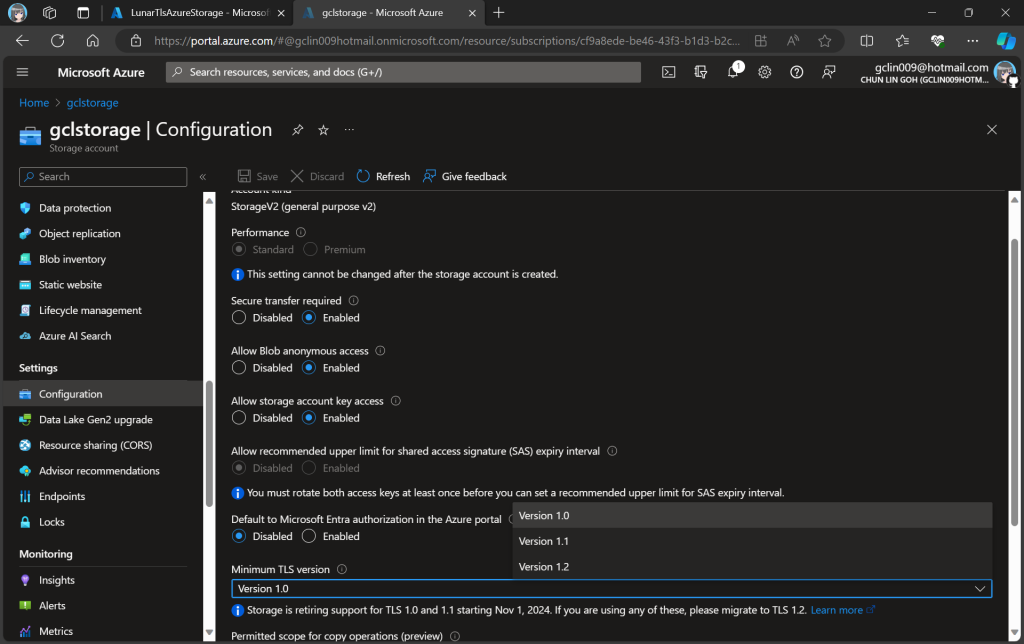

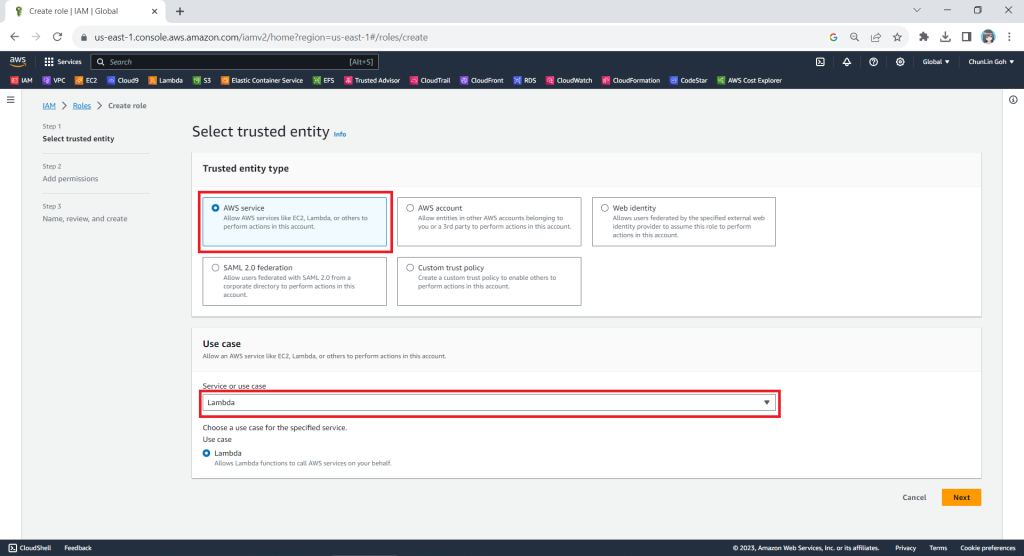

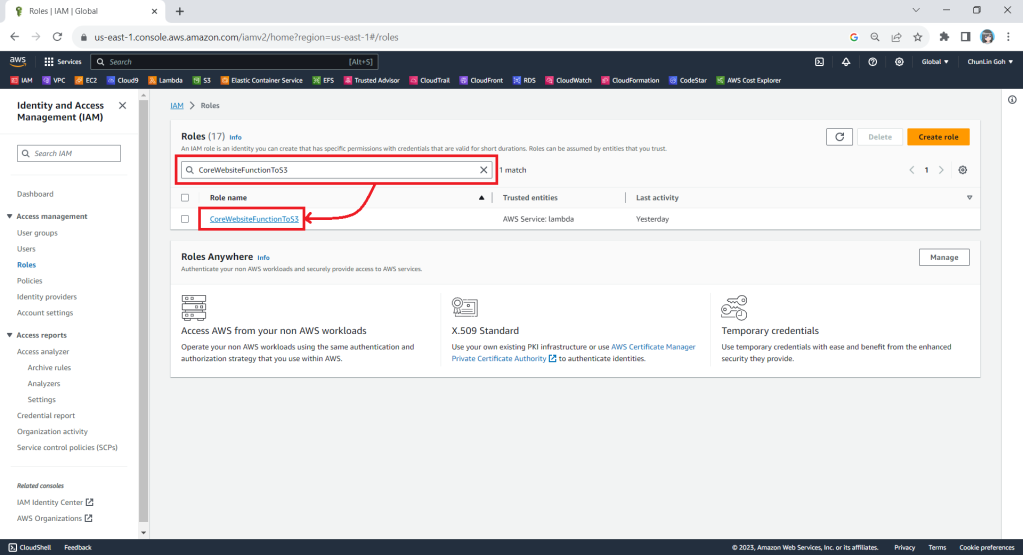

Enforce the Minimum Accepted TLS Version

Currently, the minimum TLS version accepted by storage account is set to TLS 1.0 by default before November 2014.

In advance of the deprecation date, we can enable Azure policy to enforce minimum TLS version to be TLS 1.2. Hence, we can now update the value to 1.2 so that we can reject all requests from clients that are sending data to our Azure Storage with an TLS 1.0 and 1.1.

Change in Kestrel for ASP .NET Core

Meanwhile, Kestrel, the cross-platform web server for ASP.NET Core, now also uses the system default TLS protocol versions rather than restricting connections to the TLS 1.1 and TLS 1.2 protocols like it did previously.

Thus, if we are running our apps on the latest Windows servers, then the latest TLS should be automatically used by our apps without any configuration from our side.

In fact, according to the TLS best practices guide from Microsoft, we should not specify the TLS version. Instead, we shall configure our code to let the OS decide on the TLS version for us.

Wrap-Up

Enhancing the security stance for Windows users, as of September 2023, the default configuration of the operating system will deactivate TLS versions 1.0 and 1.1.

As developers, we should ensure that all apps and services running on Windows are using up-to-date versions that support TLS 1.2 or higher. Hence, prior to the enforcement of TLS updates, we must test our apps in a controlled environment to verify compatibility with TLS 1.2 or later.

While TLS 1.0 and 1.1 will be disabled by default, it is also good to confirm these settings and ensure they align with your security requirements.

By taking these proactive measures, we should be able to have a seamless transition to updated TLS versions, maintaining a secure computing environment while minimising any potential disruptions to applications or services.

References

- Azure support for TLS 1.0 and TLS 1.1 will end by 31 October 2024;

- TLS 1.0 and TLS 1.1 soon to be disabled in Windows;

- Migrate to TLS 1.2 for Azure Blob Storage;

- Enforce a minimum required version of Transport Layer Security (TLS) for requests to a storage account;

- Configure Transport Layer Security (TLS) for a client application;

- Breaking change: Kestrel: Default supported TLS protocol versions changed – .NET;

- Transport Layer Security (TLS) best practices with the .NET Framework;

- ServicePointManager.SecurityProtocol Property;

- Don’t manually change the TLS version using ServicePointManager.SecurityProtocol.