I don’t know why people like to call software developers as hackers. Even though calling them hackers is way better than using other names like ninjas or code monkeys, I think it’s still not appropriate to call developers hackers. So, I find it quite weird when I met someone who is Growth Hacker. Err… Hack what?

Growth Hacker

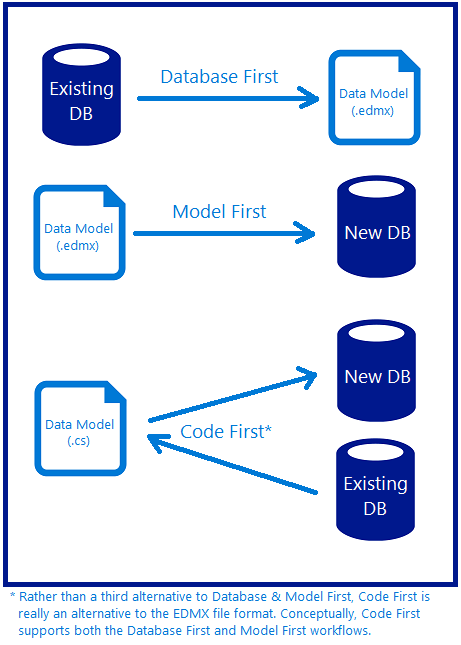

Andrew Chen, an investor for tech startups, describes growth hacker as

a hybrid of marketer and coder, one who looks at the traditional question of “How do I get customers for my product?” and answers with A/B tests, landing pages, viral factor, email deliverability, and Open Graph.

A growth hacker is someone who has thrown out the playbook of traditional marketing and replaced it with only what is testable, trackable, and scalable. Their tools are e-mails, pay-per-click ads, blogs, and platform APIs instead of commercials, publicity, and money.

(Holiday, Ryan. 2013. Growth Hacker Marketing. New York : Penguin Group, 2013)

Hence, growth hacker plays an important role in startup or SME which has little to no resources. Growth hacker needs to make use of their programming skills to provide more scientifically way of understanding who customers are and where they are.

Growth hacking highlights the importance of Product Market Fit. Instead of expecting marketers to show a product that nobody wants, company with growth hacking mindset will now spend time on trying different way of improving product based on customer feedback.

Growth hacker tests the ideas by letting customers to try our different version of the product and then ask the customers what they like about the product. One way to receive feedback is by looking at conversion rate or by the number of Facebook likes received. Hence, this helps the company to publish a product which is worth marketing and has majority of its customers love to use.

Different Stages in Growth Hacking

I’m glad to have talked to people who are experienced in growth hacking. They recommended me two books to read. One is the Growth Hacker Marketing by Ryan Holiday.

In Ryan Holiday’s book, growth hacking basically has 3 stages as follows.

- Finding your growth hack;

- Going viral;

- Closing the loop: Retention and optimization.

Growth Hacking Tactics

Another book which is recommended to me to read is Growth Hacking Handbook written by Jon Yongfook. The book suggested 100 growth tactics which many startups have successfully applied over the last 2 decades.

I am not going to list all the 100 items here. So, I will just highlight some of them which I find to be interesting.

Tactic 01: The We Can’t Go Back Jack Hack

On the signup page or checkout page, disable or remove all navigational elements that would enable a user to go back to the previous page. This includes disabling your site logo, which is often linked to the homepage.

The reason of having this tactic is because preventing users from leaving a process is sometimes good enough to force them to complete the entire signup/booking process.

I don’t really like this idea because it’s sort of locking customers in your shop and then telling the customers that they can’t leave your shop until they make the payment. It creates a bad user experience.

Tactic 02: The Pre-filled Form Hack

Instead of forcing customers to buy our goods before leaving our shop, why don’t we just create a fast signup/checkout process?

One way of doing that is to make the form short and pre-fill form fields with information if we already have it, such as customer’s email address.

For example the checkout form below will scare customers away not because it’s too long but it also requests too much personal information from the customers without giving any explanation why the information is needed.

Tactic 03: The As Seen On TV Hack

Users feel more comfortable when they know the products they are using now are something that other famous people have already used it. So having logos of media outlets who have mentioned about our product on our homepage is another form of social proof.

Tactic 04: The Multi Post Hack

This tactic is also quite straightforward. It basically means giving the option to post the website content to other social networks with just one click. That will help to push our product to a wider network via social networks.

Tactic 05: The Timebomb Hack

This is a pressure tactic where a time limit is set when a user is making critical decision such as a purchase. I don’t like to use this tactic in my ecommerce website because as a consumer, I prefer to choose what to buy without any pressure. I normally just quit the website when it forces me to finish a task in a very little time.

Tactic 06: The Winback Hack and the Negative Follow Up Hack

These two tactics are quite similar. The goal is to get feedback from users who are inactive and having incomplete transactions and incentivize them to return to the website.

A simple winback campaign can be automatically sending highly personalized email to those users who have not logged in for past 30 days. Normally, a winback campaign is our last chance at communicating with our users before they unsubscribe from us.

Sending email to customers who have signed up but do not buy any of our product is also important. That will help us to better understand why some customers do not want to buy from us (and sometimes it could be because of having bugs in the payment module).

The following is an email I received after subscribing an online service.

Hi Chun Lin,

I noticed you recently added our product to your cart but did not submit the order – wondering if you have any questions about our platform or pricing?

We’re always here to help so please do not hesitate to contact me! You can find my contact info, including my direct number, in the email signature below.

Direct number and contact info! Wow!

I don’t sign up with them in the end because I don’t really need their product at that moment. However, I’m still very impressed by their friendly email.

Tactic 07: The Intro Video Hack

If a picture is worth a thousand words, then a video is worth a million. Most people will find video content more interesting than standard text content. Hence, video content is useful to attract a significant number of inbound links and social shares for our website.

Recently, Google Search results page also displays Rich Snippet which contains information about the video embedded on the page. Hence, the Rich Snippet helps our web page to stand out from the other search results on the page. Therefore, users will be more inclined to click on the link pointing to our website.

Tactic 08: The Register to Save Hack

Instead of getting users to sign up first, we can choose to only ask users for their email after they have gone through some steps. This is to encourage users to try our product first to understand about the product better so that the eventual conversion to signup is easy.

Tactic 09: The Widget Hack and The Affiliate Program Hack

Instead of asking users to share our website via a link in text, why not giving them an embeddable widget to our product which can be easily added to any other website or blog? Then from there we can get those who embed our widget on their websites/blogs to participate in our affiliate program so that people are motivated to refer customers to our website.

Tactic 10: The Remarketing Tag Hack

Nowadays, on Facebook, there is an option to create Custom Audiences from our website which enable us to target our Facebook Adverts to only those who have visited our website. Alternatively, it also allows excluding existing customers so that we can focus on new customer acquisition campaigns.