Prior to my team lead’s resignation, he assigned me a task of updating a legacy codebase written in .NET Framework 3.5. The task itself involved submitting a multipart form request to a Web API, which made it relatively straightforward.

However, despite potential alternatives, my team lead insisted that I continue using .NET Framework 3.5. Furthermore, I was not allowed to incorporate any third-party JSON library into the changes I made.

SHOW ME THE CODE!

The complete source code of this project can be found at https://github.com/goh-chunlin/gcl-boilerplate.csharp/tree/master/console-application/HelloWorld.MultipartForm.

Multipart Form

A multipart form request is a type of form submission used to send data that includes binary or non-textual files, such as images, videos, or documents, along with other form field values. In a multipart form, the data is divided into multiple parts or sections, each representing a different form field or file.

The multipart form data format allows the data to be encoded and organized in a way that can be transmitted over HTTP and processed by web servers. For the challenge given by my team lead, the data consists of two sections, i.e. main_message, a field containing a JSON object, and a list of files.

The JSON object is very simple, which has only two fields called message and documentType, which can only be the value “PERSONAL”, for now.

{

"message": "...",

"documentType": "PERSONAL"

}

When the API server handles a multipart form request, it will parse the data by separating the different parts based on the specified boundaries and retrieves the values of each field or file for further processing or storage.

About the Boundaries

In a multipart form, the boundary is a unique string that serves as a delimiter between different parts of the form data. It helps in distinguishing one part from another. The following is an example of what a multipart form data request might look like with the boundary specified.

POST /submit-form HTTP/1.1

Host: example.com

Content-Type: multipart/form-data; boundary=--------------------------8db792beb8632a9

----------------------------8db792beb8632a9

Content-Disposition: form-data; name="main_message"

{

"message": "...",

"documentType": "PERSONAL"

}

----------------------------8db792beb8632a9

Content-Disposition: form-data; name="attachments"; filename="picture.jpg"

Content-Type: application/octet-stream

In the example above, the boundary is set to ----------------------------8db792beb8632a9. Each part of the form data is separated by this boundary, as indicated by the dashed lines. When processing the multipart form data on the server, the server uses the boundary to identify the different parts and extract the values of each form field or file.

The format of the boundary string used in a multipart form data request is specified in the HTTP/1.1 specification. The boundary string must meet the following requirements:

- It must start with two leading dashes “–“.

- It may be followed by any combination of characters, excluding ASCII control characters and the characters used to specify the end boundary (typically dashes).

- It should be unique and not appear in the actual data being transmitted.

- It should not exceed a length of 70 characters (including the leading dashes).

Besides the leading dashes, the number of dashes depends on how many we want there. It also can be zero, if you like, it is just that more dashes makes the boundary more obvious. Also to make it unique, we use timestamp converted into hexadecimal.

Boundary = "----------------------------" + DateTime.Now.Ticks.ToString("x");

Non-Binary Fields

In the example above, besides sending the files to the server, we also have to send the data in JSON. Hece, we will be having a code to generate the section in following format.

----------------------------8db792beb8632a9

Content-Disposition: form-data; name="main_message"

{

"message": "...",

"documentType": "PERSONAL"

}

To do so, we have a form data template variable defined as follows.

static readonly string FormDataTemplate = "\r\n--{0}\r\nContent-Disposition: form-data; name=\"{1}\";\r\n\r\n{2}";

Binary Fields

For the section containing binary file, we will need a code to generate something as follows.

----------------------------8db792beb8632a9

Content-Disposition: form-data; name="attachments"; filename="picture.jpg"

Content-Type: application/octet-stream

Thus, we have the following variable defined.

static readonly string FileHeaderTemplate = "Content-Disposition: form-data; name=\"{0}\"; filename=\"{1}\"\r\nContent-Type: application/octet-stream\r\n\r\n";

Ending BOUNDARY

The ending boundary is required in a multipart form data request to indicate the completion of the form data transmission. It serves as a signal to the server that there are no more parts to process and that the entire form data has been successfully transmitted.

The ending boundary is constructed in a similar way to the regular boundary but with an additional two trailing dashes “–” at the end to indicate its termination. Hence, we have the following codes.

void WriteTrailer(Stream stream)

{

byte[] trailer = Encoding.UTF8.GetBytes("\r\n--" + Boundary + "--\r\n");

stream.Write(trailer, 0, trailer.Length);

}

Stream.CopyTo Method

As you may have noticed in our code, we have a method called CopyTo as shown below.

void CopyTo(Stream source, Stream destination, int bufferSize)

{

byte[] array = new byte[bufferSize];

int count;

while ((count = source.Read(array, 0, array.Length)) != 0)

{

destination.Write(array, 0, count);

}

}

The reason we have this code is because the Stream.CopyTo method, that reads the bytes from the current stream and writes them to another stream, is only introduced in .NET Framework 4.0. Hence, we have to write our own CopyTo method.

JSON Handling

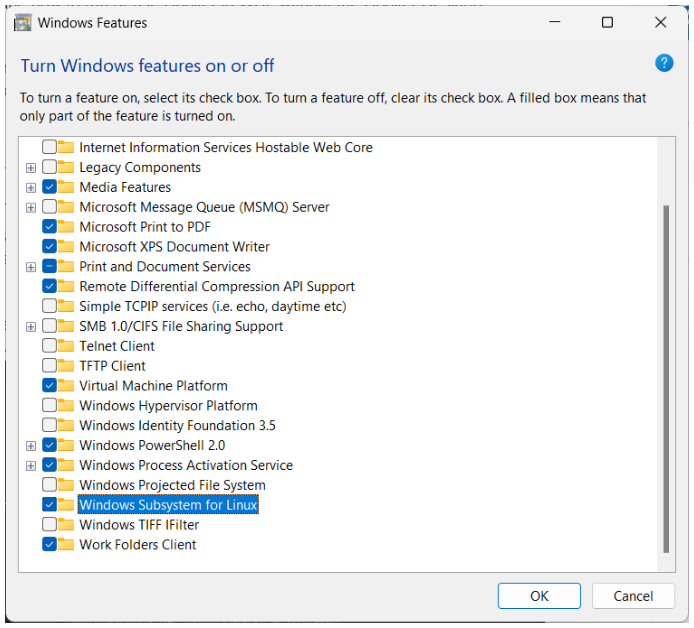

To handle the JSON object, the following items are all not available in a .NET Framework 3.5 project.

Hence, if we are not allowed to use any third-party JSON library such as Json.NET from Newtonsoft, then we can only write our own in C#.

string mainMessage =

"{ " +

"\"message\": \"" + ConvertToUnicodeString(message) + "\", " +

"\"documentType\": \"PERSONAL\" " +

"}";

The method ConvertToUnicodeString is to support unicode characters in our JSON.

private static string ConvertToUnicodeString(string text)

{

StringBuilder sb = new StringBuilder();

foreach (var c in text)

{

sb.Append("\\u" + ((int)c).ToString("X4"));

}

return sb.ToString();

}

Wrap-Up

This concludes the challenge my team lead presented to me before his resignation.

He did tell me that migrating the project to the modern .NET framework requires significant resources, including development time, training, and potential infrastructure changes. Hence, he foresaw that with limited budgets, the team could only prioritise other business needs over framework upgrades.

After completing the challenge, my team lead has resigned for quite some time. With his departure in mind, I have written this blog post in the hopes that it may offer assistance to other developers facing similar difficulties in their career.

The complete source code of this sample can be found on my GitHub project: https://github.com/goh-chunlin/gcl-boilerplate.csharp/tree/master/console-application/HelloWorld.MultipartForm.

References