We use Amazon S3 to store data for easy sharing among various applications. However, each application has its unique requirements and might require a different perspective on the data. To solve this problem, at times, we store additional customised datasets of the same data, ensuring that each application has its own unique dataset. This sometimes creates another set of problems because we now need to maintain additional datasets.

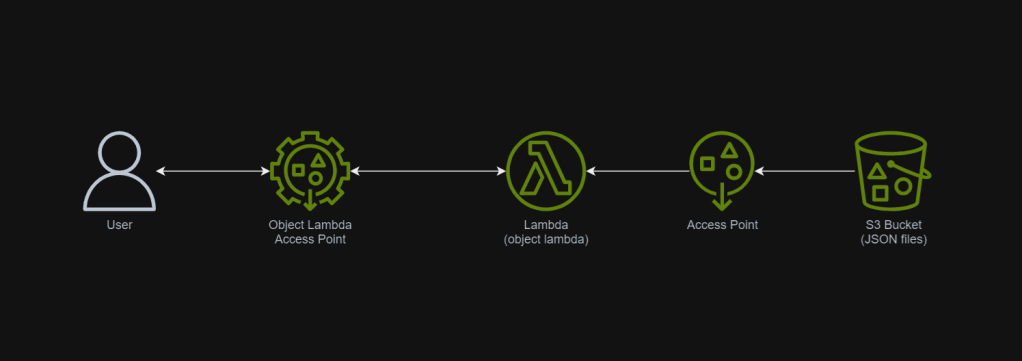

In March 2021, a new feature known as S3 Object Lambda was introduced. Similar to the idea of setting up a proxy layer in front of S3 to intercept and process data as it is requested, Object Lambda uses AWS Lambda functions to automatically process and transform your data as it is being retrieved from S3. With Object Lambda, we only need to change our apps to use the new S3 Object Lambda Access Point instead of the actual bucket name to retrieve data from S3.

Example: Turning JSON to Web Page with S3 Object Lambda

I have been keeping details of my visits to medical centres as well as the treatments and medicines I received in a JSON file. So, I would like to take this opportunity to show how S3 Object Lambda can help in doing data processing.

The JSON file looks something as follows.

{

"visits": [

{

"medicalCentreName": "Tan Tock Seng Hospital",

"centreType": "hospital",

"visitStartDate": {

"year": 2024,

"month": 3,

"day": 24

},

"visitEndDate": {

"year": 2024,

"month": 4,

"day": 19

},

"purpose": "",

"treatments": [

{

"name": "Antibiotic Meixam(R) 500 Cloxacillin Sodium",

"type": "medicine",

"amount": "100ml per doese every 4 hours",

"startDate": {

"year": 2024,

"month": 3,

"day": 26

},

"endDate": {

"year": 2024,

"month": 4,

"day": 19

}

},

...

]

},

...

]

}

In this article, I will show the steps I took to setup the S3 Object Lambda architecture for this use case.

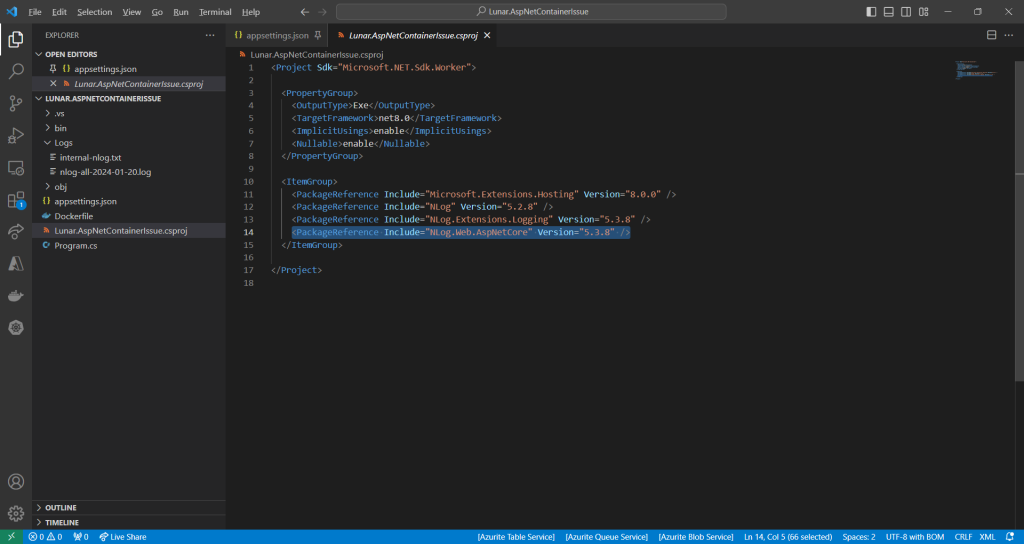

Step 1: Building the Lambda Function

Before we begin, we need to take note that the maximum duration for a Lambda function used by S3 Object Lambda is 60 seconds.

We need a Lambda Function to do the data format transformation from JSON to HTML. To keep things simple, we will be developing the Function using Python 3.12.

In the beginning, we can have the code as follows. The code basically does two things. Firstly, it performs some logging. Secondly, it reads the JSON file from S3 Bucket.

import json

import os

import logging

import boto3

from urllib import request

from urllib.error import HTTPError

from types import SimpleNamespace

logger = logging.getLogger()

logger.addHandler(logging.StreamHandler())

logger.setLevel(getattr(logging, os.getenv('LOG_LEVEL', 'INFO')))

s3_client = boto3.client('s3')

def lambda_handler(event, context):

object_context = event["getObjectContext"]

# Get the presigned URL to fetch the requested original object from S3

s3_url = object_context["inputS3Url"]

# Extract the route and request token from the input context

request_route = object_context["outputRoute"]

request_token = object_context["outputToken"]

# Get the original S3 object using the presigned URL

req = request.Request(s3_url)

try:

response = request.urlopen(req)

responded_json = response.read().decode()

except Exception as err:

logger.error(f'Exception reading S3 content: {err}')

return {'status_code': 500}

json_object = json.loads(responded_json, object_hook=lambda d: SimpleNamespace(**d))

visits = json_object.visits

html = ''

s3_client.write_get_object_response(

Body = html,

ContentType = 'text/html',

RequestRoute = request_route,

RequestToken = request_token)

return {'status_code': 200}

Step 1.1: Getting the JSON File with Presigned URL

In the event that an Object Lambda receives, there is a property known as the getObjectContext, which contains useful information for us to figure out the inputS3Url, which is the presigned URL of the object in S3.

By default, all S3 objects are private and thus for a Lambda Function to access the S3 objects, we need to configure the Function to have S3 read permissions to retrieve the objects. However, with the presigned URL, the Function can get the object without the S3 read permissions.

In the code above, we can retrieve the JSON file from the S3 using its presigned URL. After that we parse the JSON file content with json.loads() method and convert it into a JSON object with SimpleNamespace. Thus the variable visits now should have all the visits data from the original JSON file.

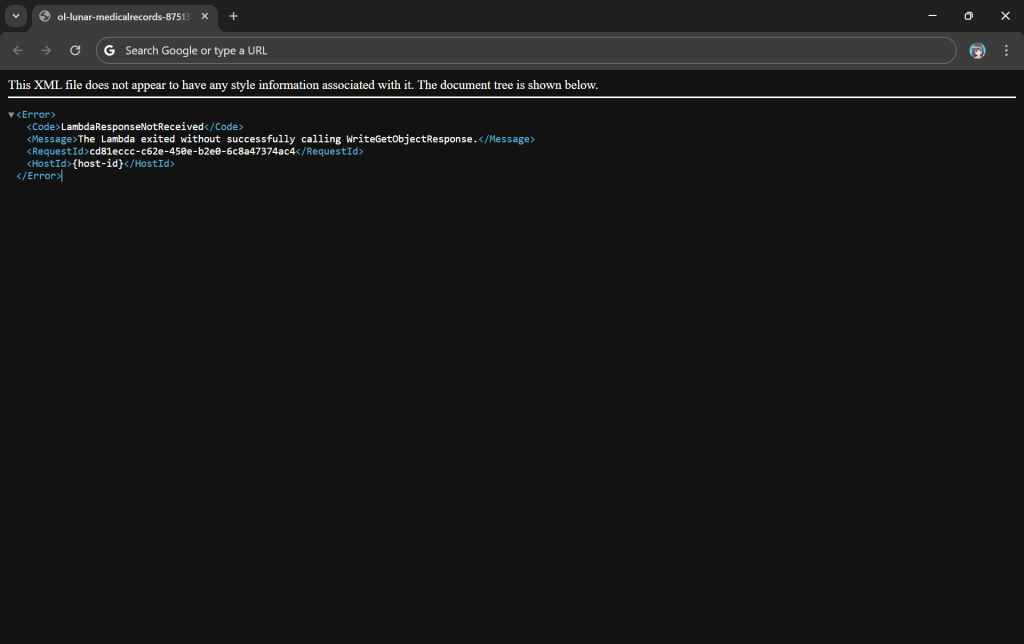

Step 1.2: Call WriteGetObjectResponse

Since the purpose of Object Lambda is to process and transform our data as it is being retrieved from S3, we need to pass transformed object to a GetObject operation in the Function via the method write_get_object_response. Without this method, there will be an error from the Lambda complaining that it is missing.

The method write_get_object_response requires two compulsory parameters, i.e. RequestRoute and RequestToken. Both of them are available from the property getObjectContext under the name outputRoute and outputToken.

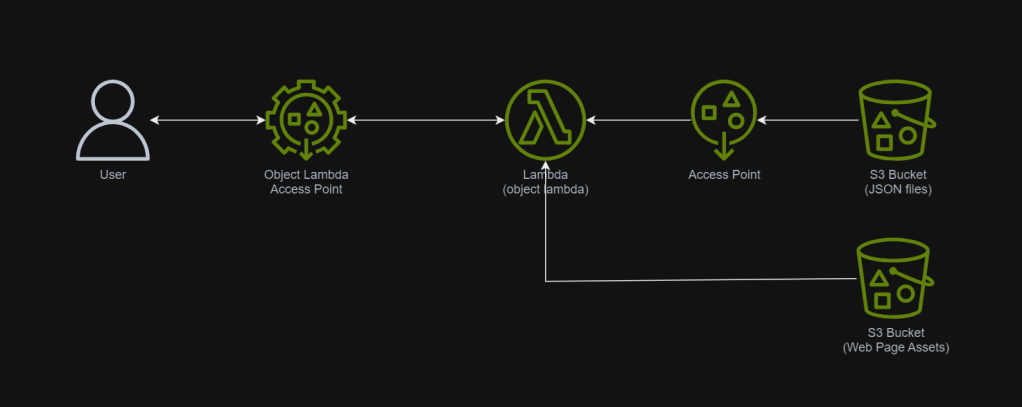

Step 1.3: Get the HTML Template from S3

To make our Lambda code cleaner, we will not write the entire HTML there. Instead, we keep a template of the web page in another S3 bucket.

Now, the architecture above will be improved to include second S3 bucket which will provide web page template and other necessary static assets.

Now, we will replace the line html = '' earlier with the Python code below.

template_response = s3_client.get_object(

Bucket = 'lunar.medicalrecords.static',

Key = 'medical-records.html'

)

template_object_data = template_response['Body'].read()

template_content = template_object_data.decode('utf-8')

dynamic_table = f"""

<table class="table accordion">

<thead>

<tr>

<th scope="col">#</th>

<th scope="col">Medical Centre</th>

<th scope="col">From</th>

<th scope="col">To</th>

<th scope="col">Purpose</th>

</tr>

</thead>

<tbody>

...

</tbody>

</table>"""

html = template_content.replace('{{DYNAMIC_TABLE}}', dynamic_table)

Step 2: Give Lambda Function Necessary Permissions

With the setup we have gone through above, we understand that our Lambda Function needs to have the following permissions.

- s3-object-lambda:WriteGetObjectResponse

- s3:GetObject

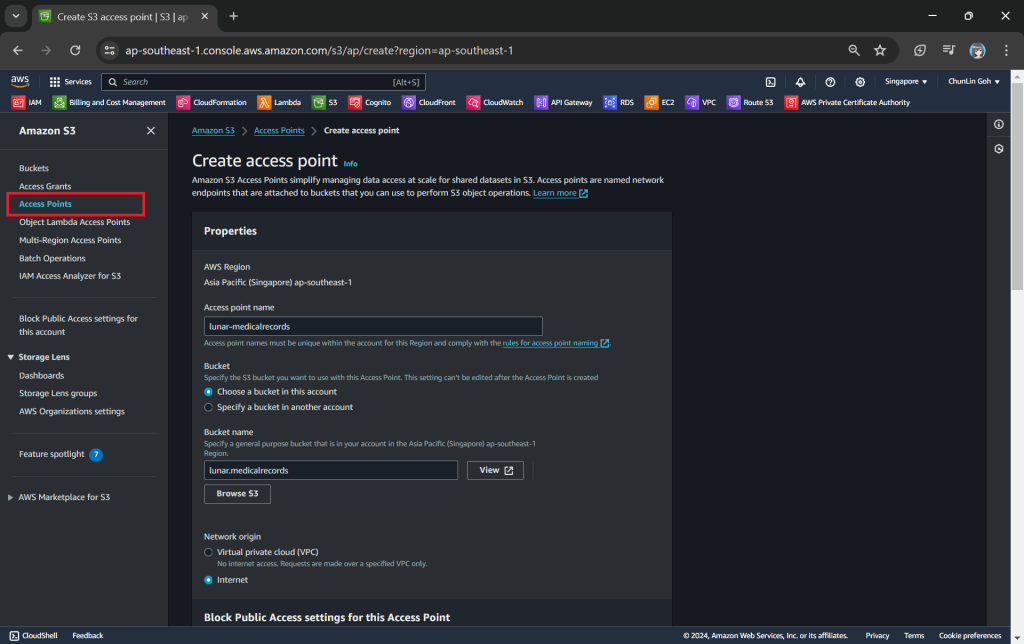

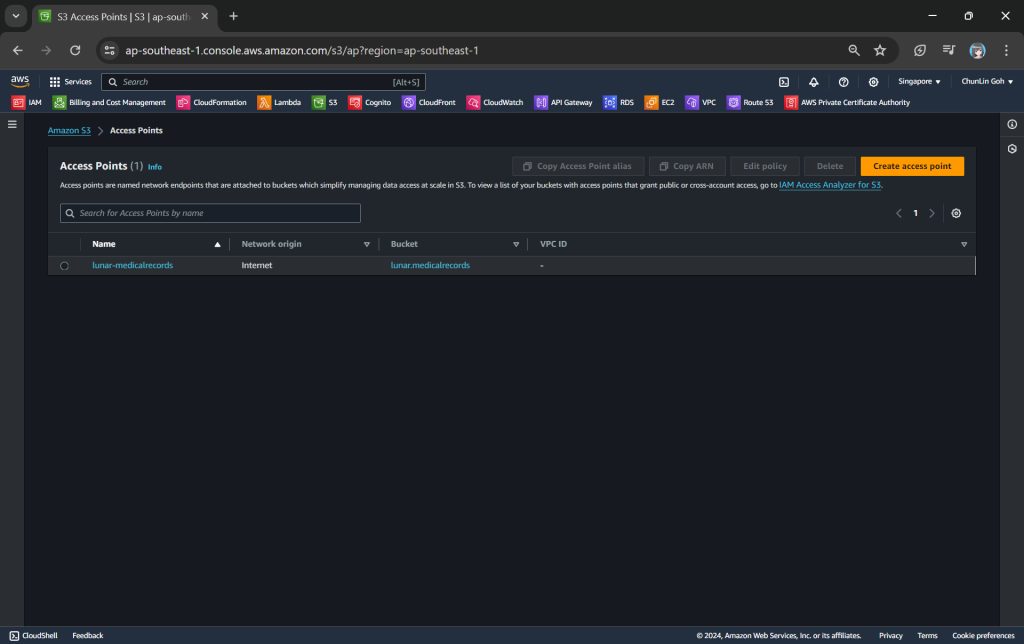

Step 3: Create S3 Access Point

Next, we will need to create a S3 Access Point. It will be used to support the creation of the S3 Object Lambda Access Point later.

One of the features that S3 Access Point offers is that we can specify any name that is unique within the account and region. For example, as shown in the screenshot below, we can actually have a “lunar-medicalrecords” access point in every account and region.

When we are creating the access point, we need to specify the bucket which resides in the same region that we want to use with this Access Point. In addition, since we are not restricting the access of it to only a specific VPC in our case, we will be choosing “Internet” for the “Network origin” field.

After that, we keep all other defaults as is. We can directly proceed to choose the “Create access point” button.

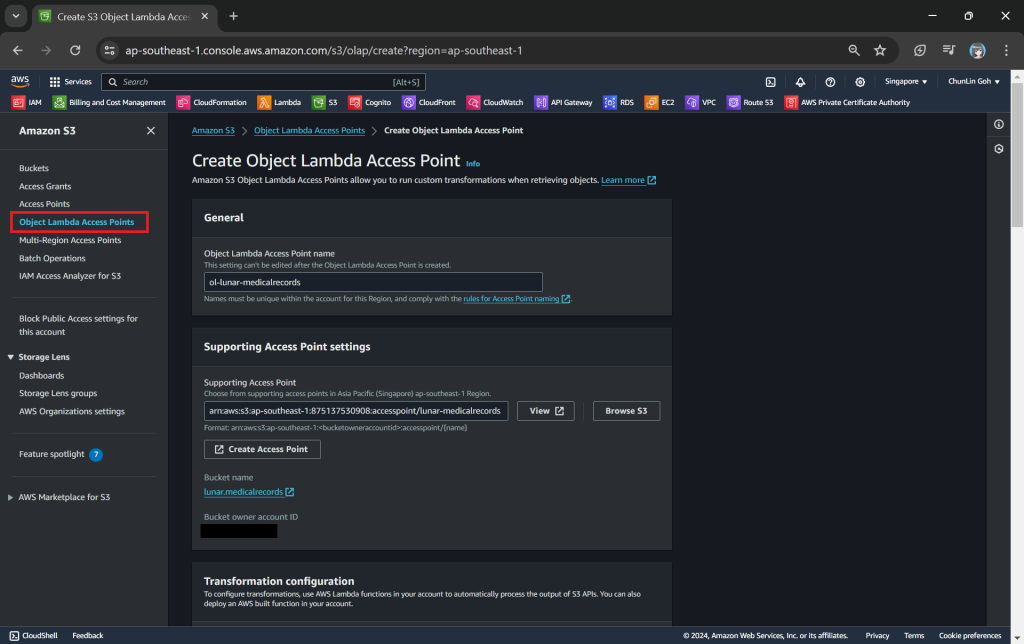

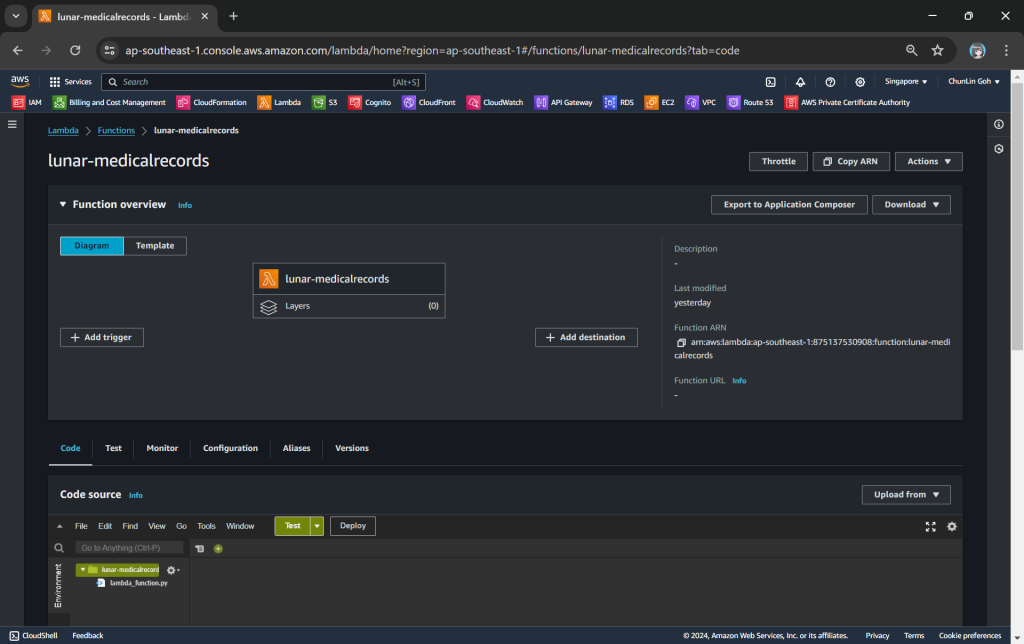

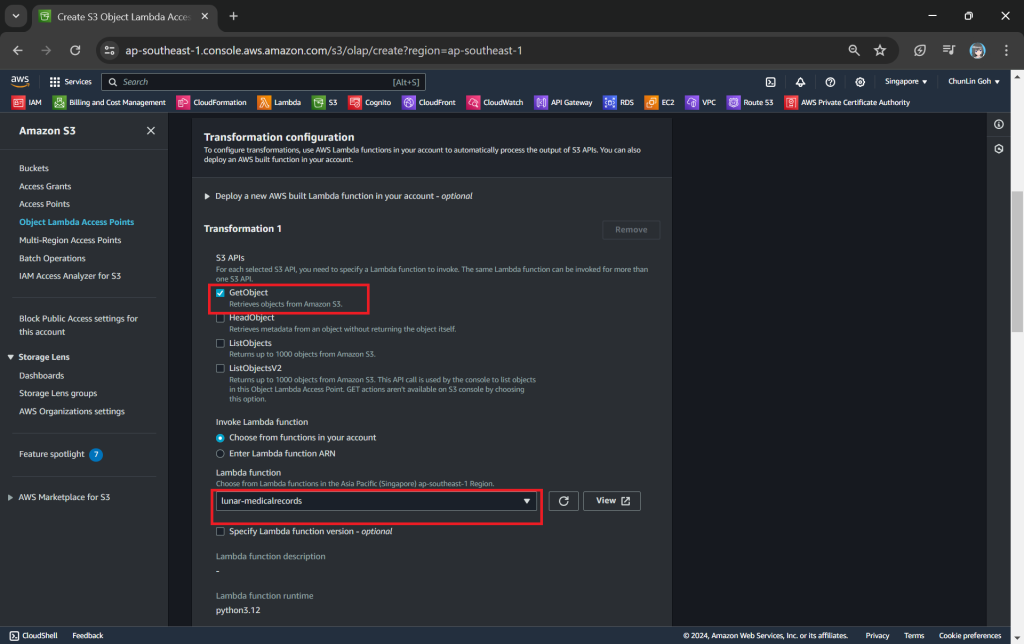

Step 4: Create S3 Object Lambda Access Point

After getting our S3 Access Point set up, we can then move on to create our S3 Object Lambda Access Point. This is the actual access point that our app will be using to access the JSON file in our S3 bucket. It then should return a HTML document generated by the Object Lambda that we built in Step 1.

In the Object Lambda Access Point creation page, after we give it a name, we need to provide the Supporting Access Point. This access point is the Amazon Resource Name (ARN) of the S3 Access Point that we created in Step 3. Please take note that both the Object Lambda Access Point and Supporting Access Point must be in the same region.

Next we need to setup the transformation configuration. In our case, we will be retrieving the JSON file from the S3 bucket to perform the data transformation via our Lambda Function, so we will be choosing GetObject as the S3 API we will be using, as shown in the screenshot below.

Once all these fields are keyed in, we can proceed to create the Object Lambda Access Point.

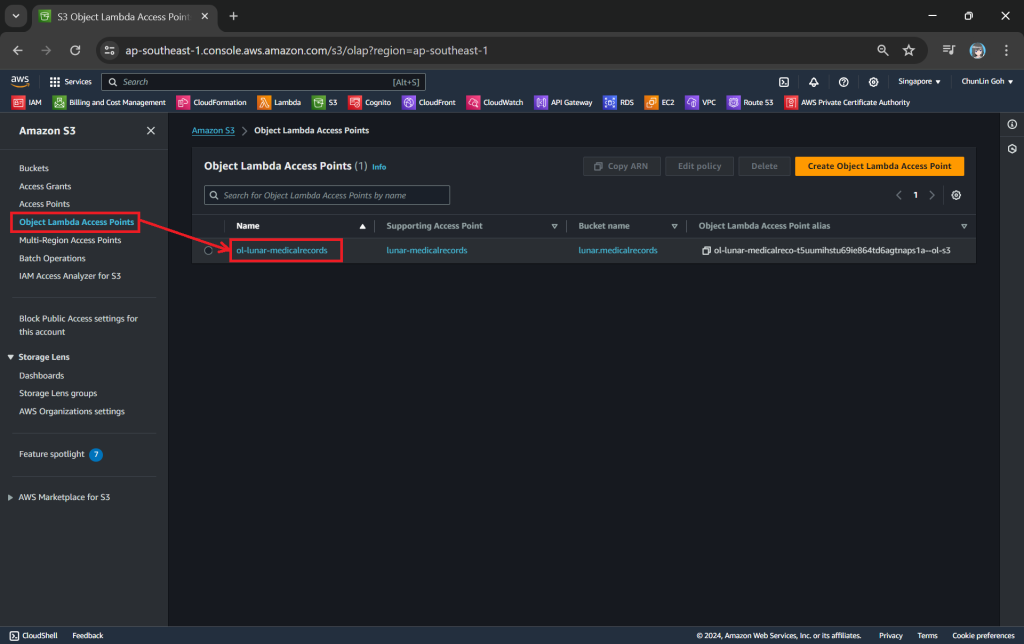

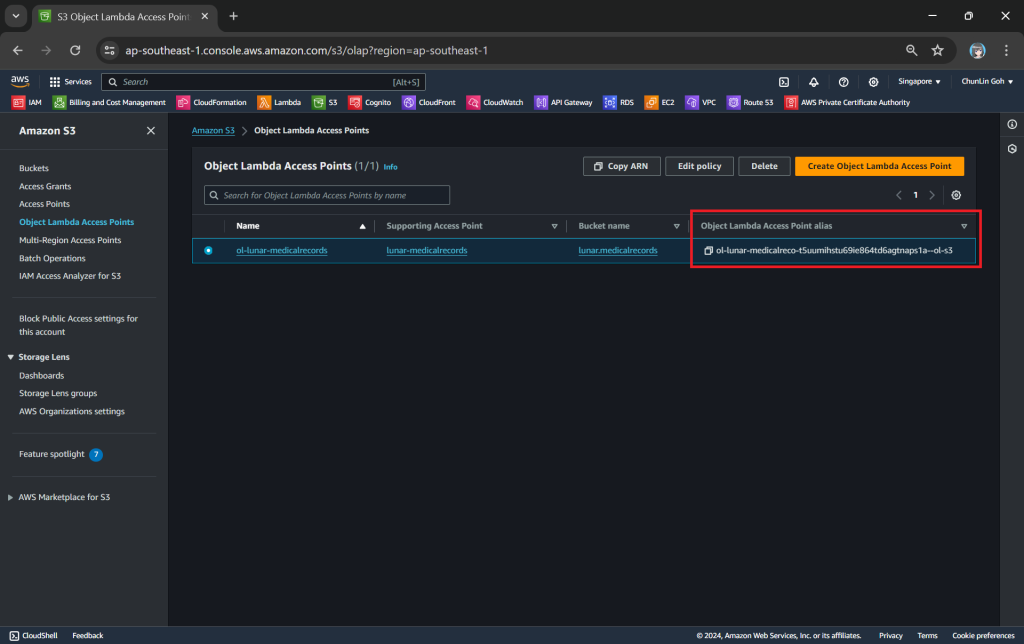

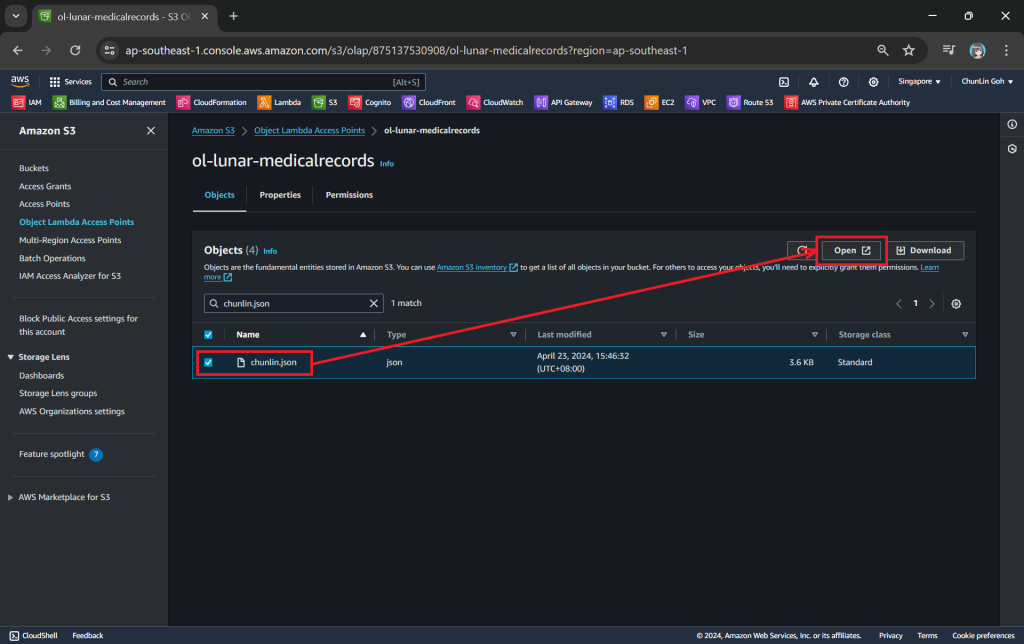

Now, we will access the JSON file via the Object Lambda Access Point to verify that the file is really transformed into a web page during the request. To do so, firstly, we need to select the newly create Object Lambda Access Point as shown in the following screenshot.

Secondly, we will be searching for our JSON file, for example chunlin.json in my case. Then, we will click on the “Open” button to view it. The reason why I name the JSON file containing my medical records is because later I will be adding authentication and authorisation to only allow users retrieving their own JSON file based on their login user name.

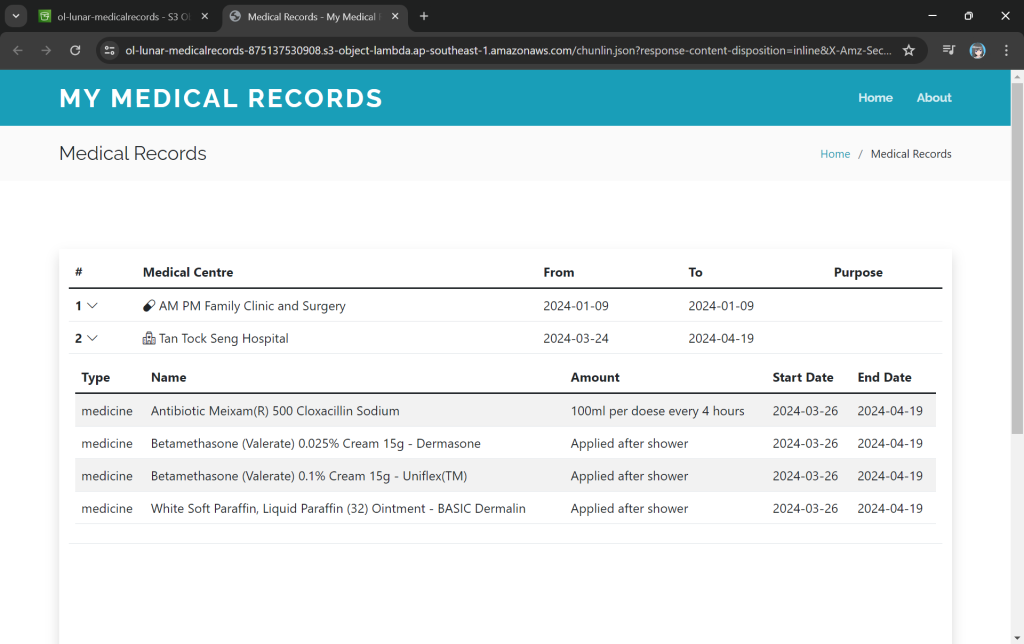

There will be new tab opened showing the web page as demonstrated in the screenshot below. As you have noticed in the URL, it is still pointing to the JSON file but the returned content is a HTML web page.

Using the Object Lambda Access Point from Our App

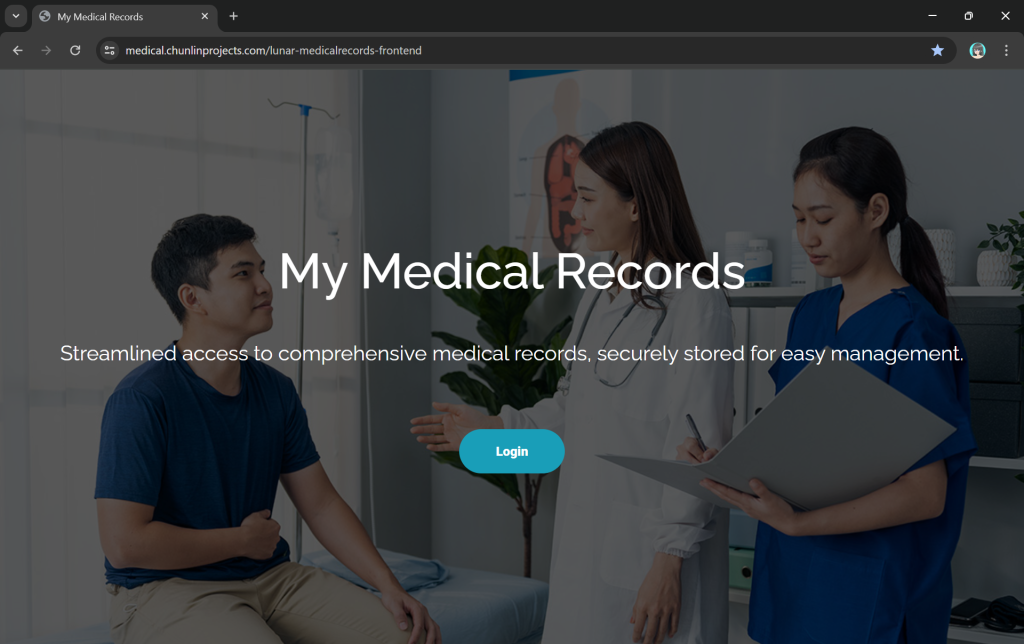

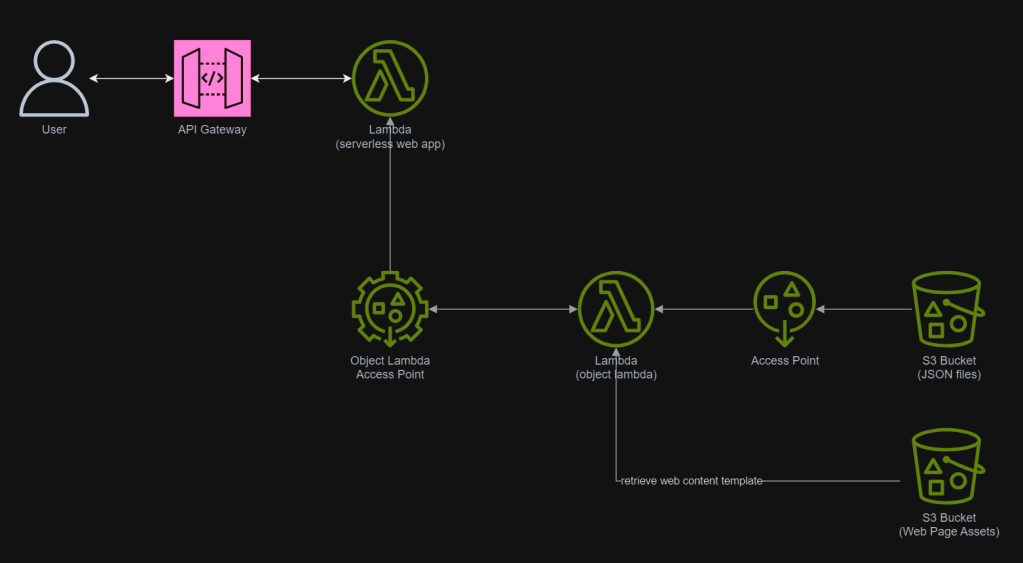

With the Object Lambda Access Point successfully setup, we will show how we can use it. To not overcomplicate things, for the purposes of this article, I will host a serverless web app on Lambda which will be serving the medical record website above.

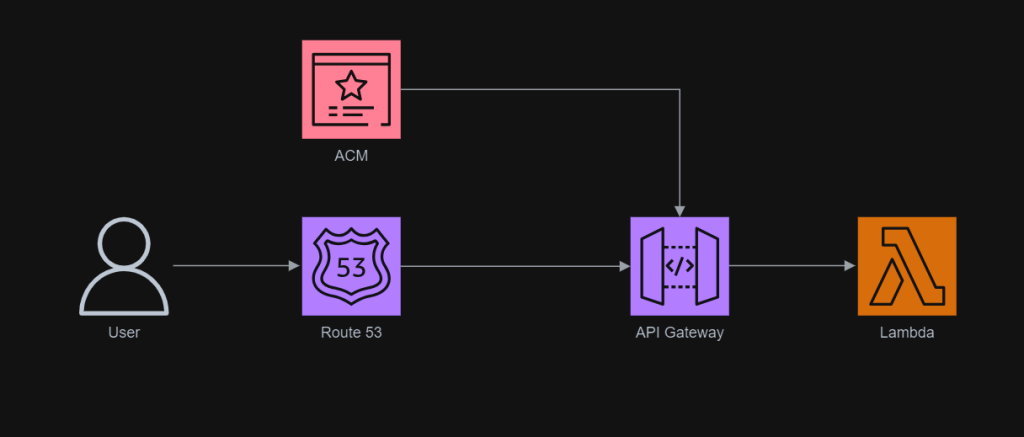

In addition, since Lambda Functions are by default not accessible from the Internet, we will be using API Gateway so that we can have a custom REST endpoint in the AWS and thus we can map this endpoint to the invokation of our Lambda Function. Technically speaking, the architecture diagram now looks as follows.

In the newly created Lambda, we will still be developing it with Python 3.12. We name this Lambda lunar-medicalrecords-frontend. We will be using the following code which will retrieve the HTML content from the Object Lambda Access Point.

import json

import os

import logging

import boto3

logger = logging.getLogger()

logger.addHandler(logging.StreamHandler())

logger.setLevel(getattr(logging, os.getenv('LOG_LEVEL', 'INFO')))

s3_client = boto3.client('s3')

def lambda_handler(event, context):

try:

bucket_name = 'ol-lunar-medicalreco-t5uumihstu69ie864td6agtnaps1a--ol-s3'

object_key = 'chunlin.json'

response = s3_client.get_object(

Bucket=bucket_name,

Key=object_key

)

object_data = response['Body'].read()

object_string = object_data.decode('utf-8')

return {

'statusCode': 200,

'body': object_string,

'headers': {

'Content-Type': 'text/html'

}

}

except Exception as err:

return {

'statusCode': 500,

'body': json.dumps(str(err))

}

As shown in the code above, we are still using the same function get_object from the S3 client to retrieve the JSON file, chunlin.json. However, instead of providing the bucket name, we will be using the Object Lambda Access Point Alias, which is located at the S3 Object Lambda Access Points listing page.

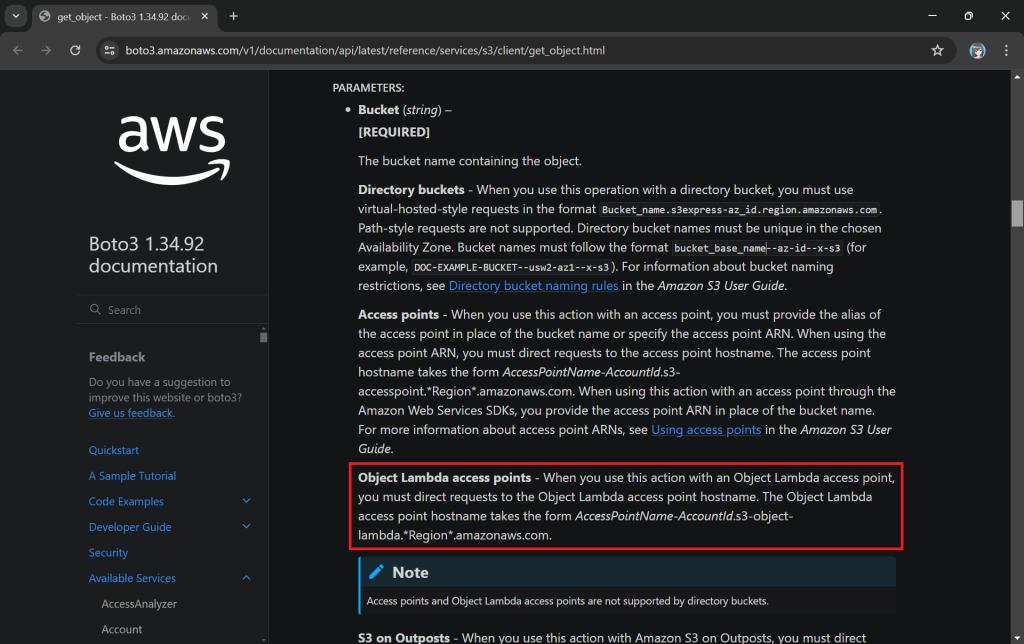

You can read more about the Boto3 get_object documentation to understand more about its Bucket parameter.

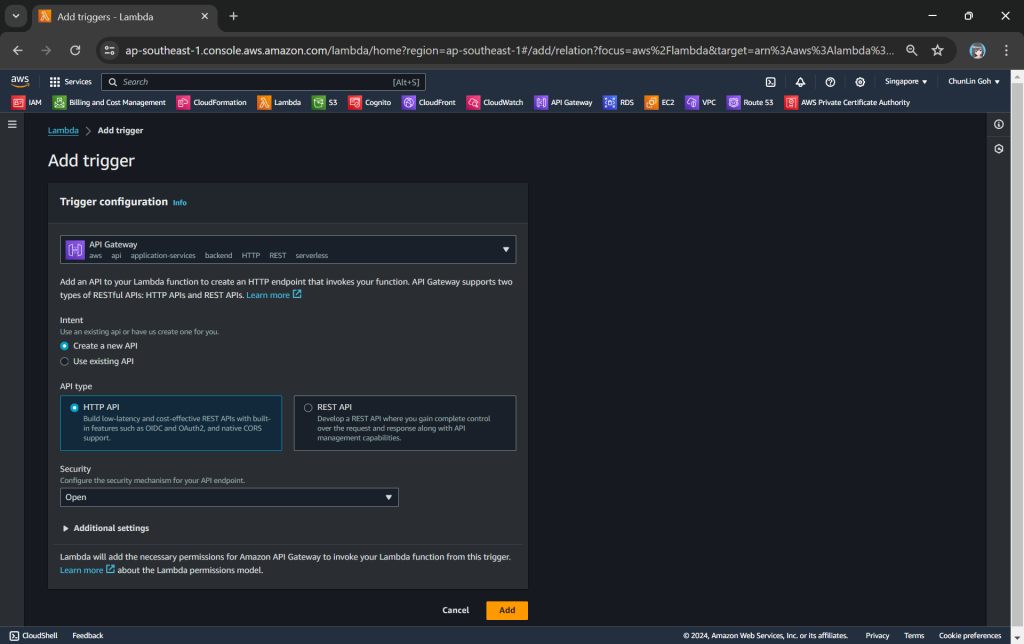

The API Gateway for the Lambda Function is created with HTTP API through the “Add Trigger” function (which is located at the Function overview page). For the Security field, we will be choosing “Open” for now. We will add the login functionality later.

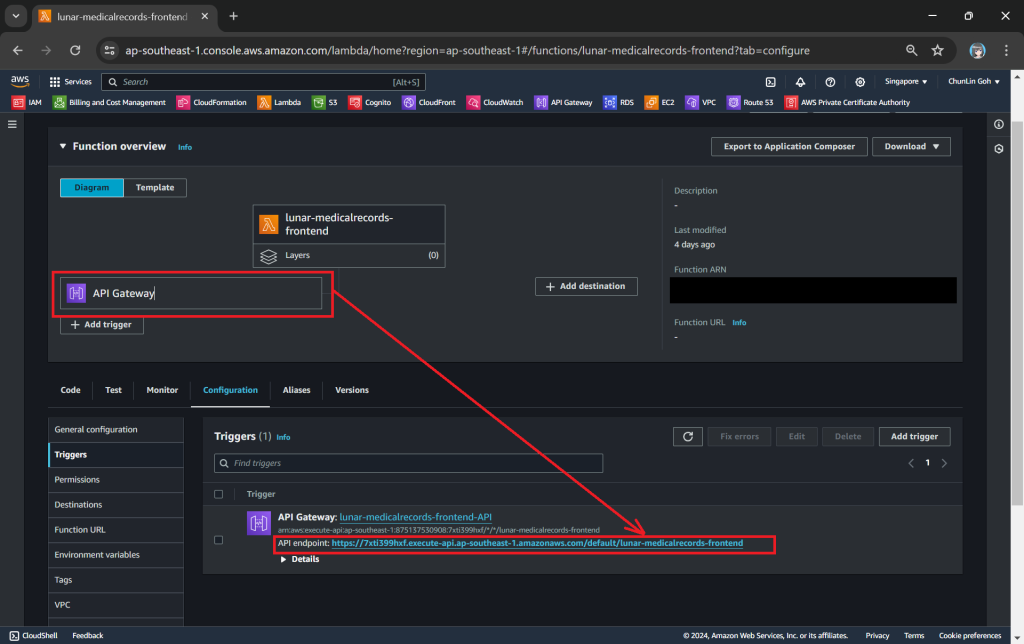

Once this is done, we will be provided an API Gateway endpoint, as shown in the screenshot below. Visiting the endpoint should be rendering the same web page listing the medical records as we have seen above.

Finally, for the Lambda Function permission, we only need to grand it the following.

- s3:GetObject.

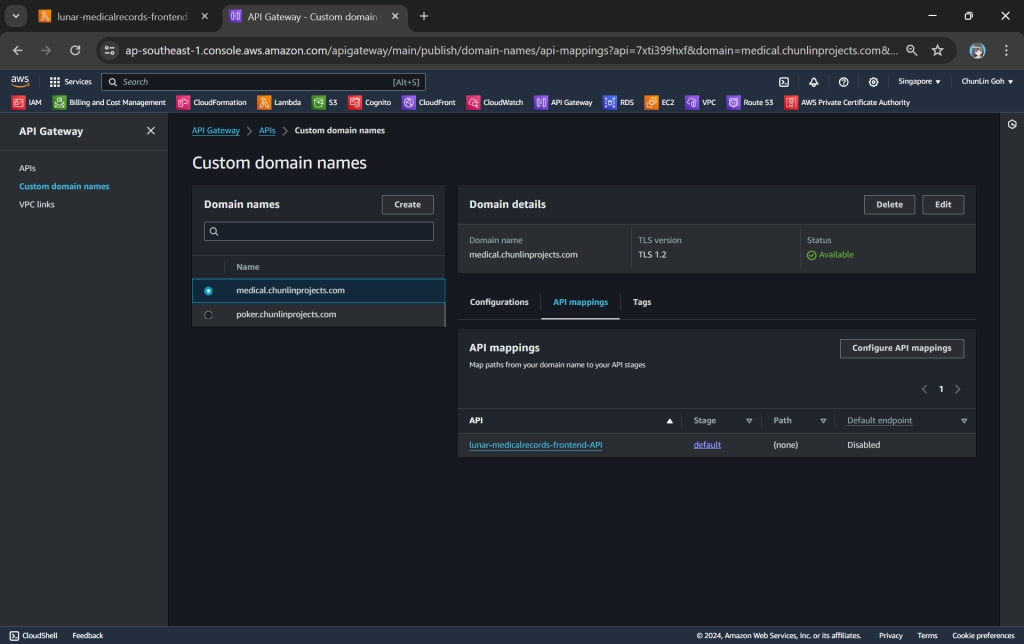

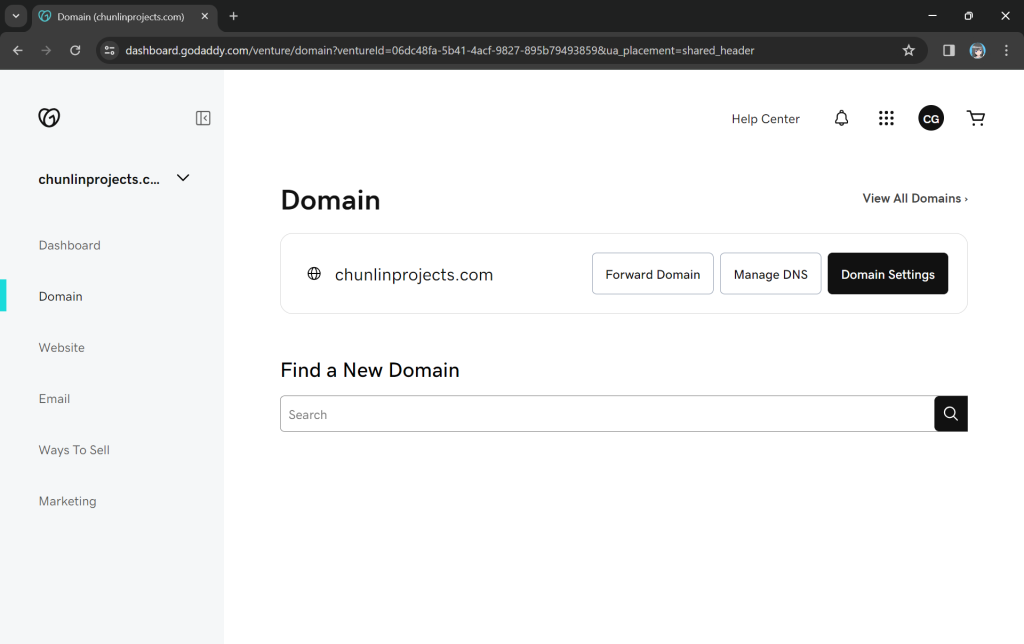

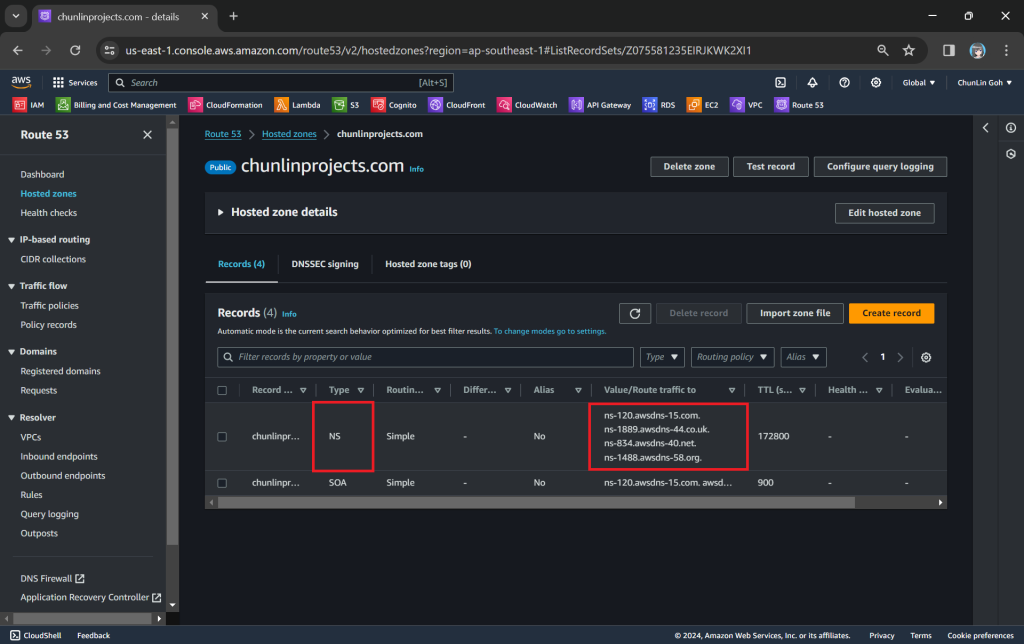

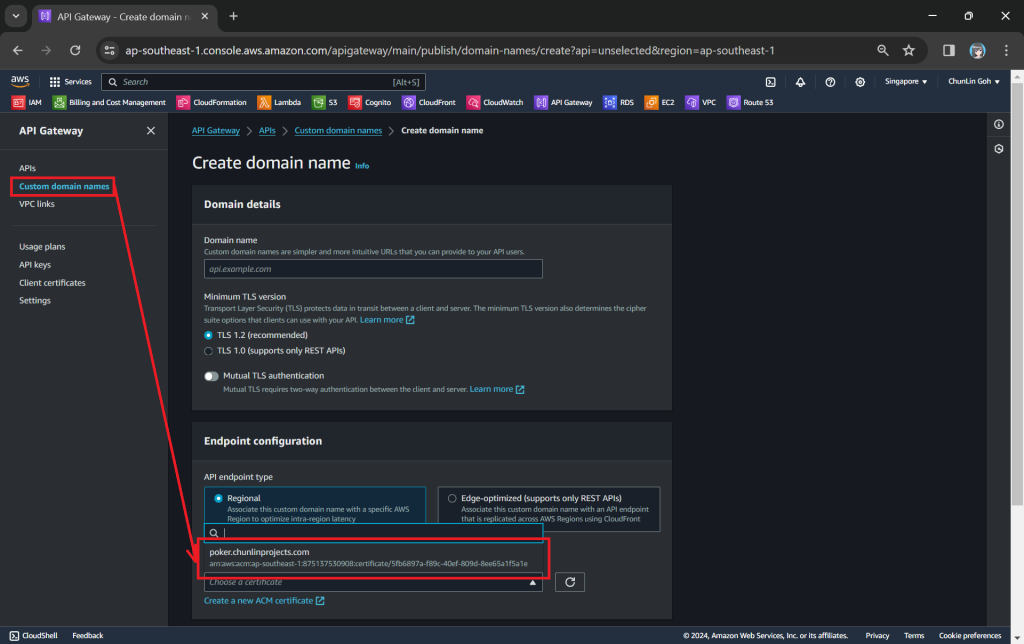

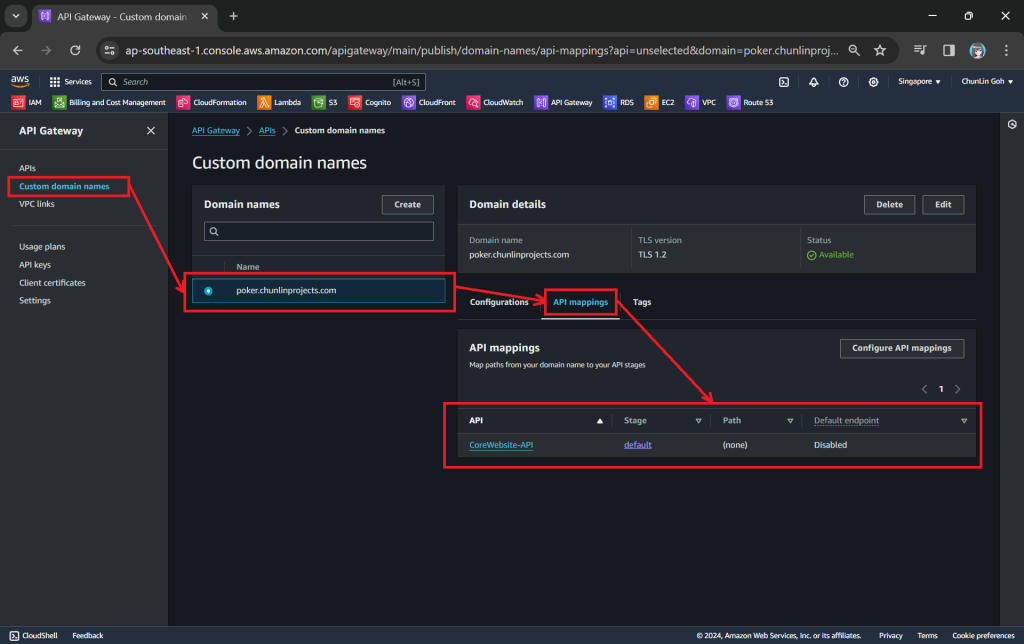

To make the API Gateway endpoint looks more user friendly, we can also introduce Custom Domain to the API Gateway, following the guide in one of our earlier posts.

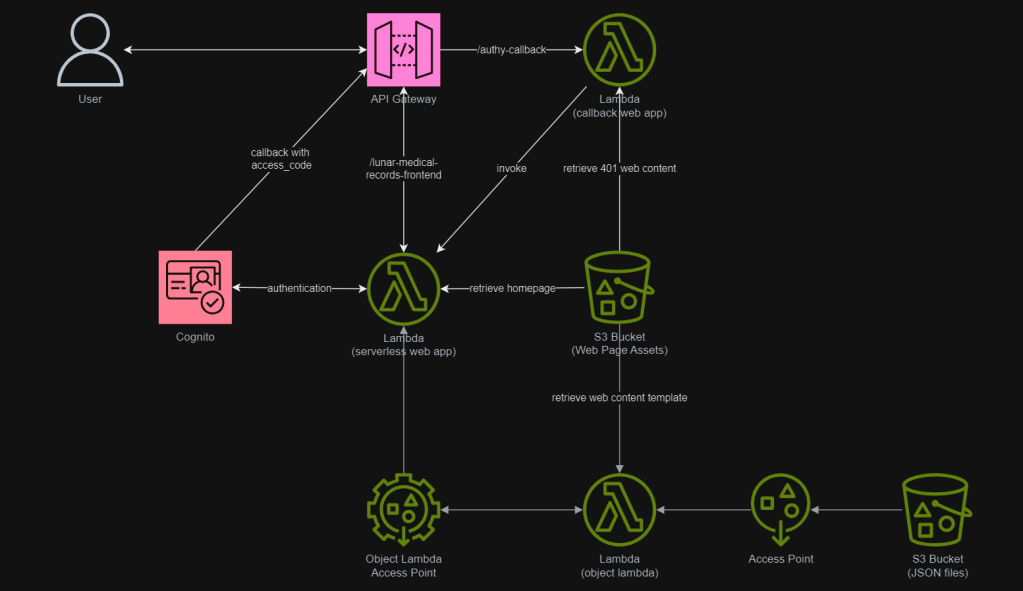

Protecting Data with Cognito

In order to ensure that only authenticated and authorised users can access their own medical records, we need to securely control access to our the app with the help from Amazon Cognito. Cognito is a service that enables us to add user sign-in and access control to our apps quickly and easily. Hence it helps authenticate and authorise users before they can access the medical records.

Step 1: Setup Amazon Cognito

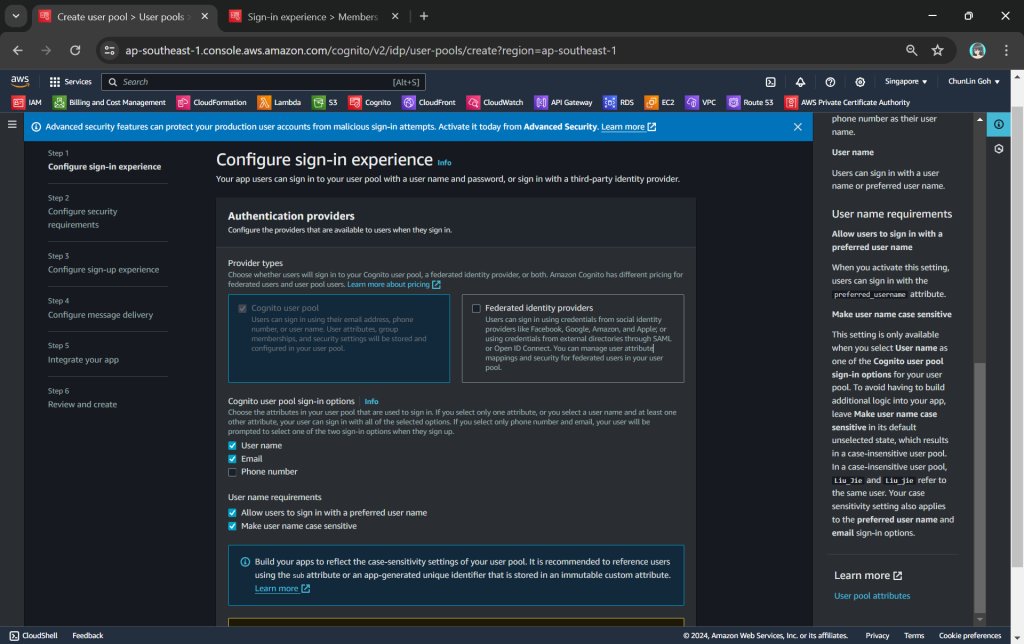

To setup Cognito, firstly, we need to configure the User Pool by specifying sign-in options. User pool is a managed user directory service that provides authentication and user management capabilities for our apps. It enables us to offload the complexity of user authentication and management to AWS.

Please take note that Cognito user pool sign-in options cannot be changed after the user pool has been created. Hence, kindly think carefully during the configuration.

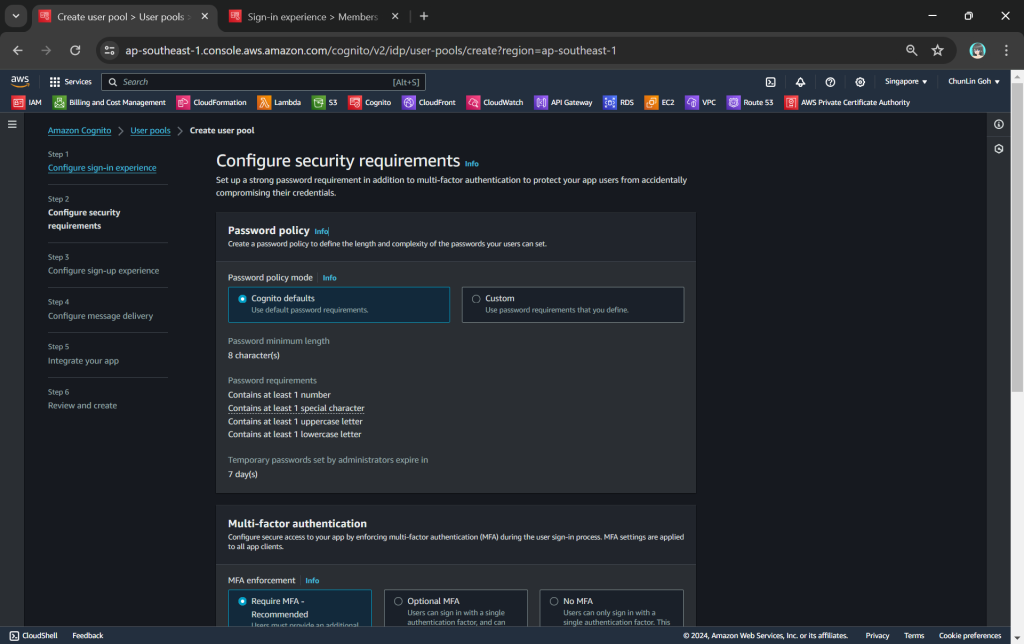

Secondly, we need to configure password policy and choose whether to enable Multi-Factor Authentication (MFA).

By default, Cognito comes with a password policy that ensures our users maintain a password with a minimum length and complexity. For password reset, it will also generate a temporary password to the user which will expire in 7 days, by default.

MFA adds an extra layer of security to the authentication process by requiring users to provide additional verification factors to gain access to their accounts. This reduces the risk of unauthorised access due to compromised passwords.

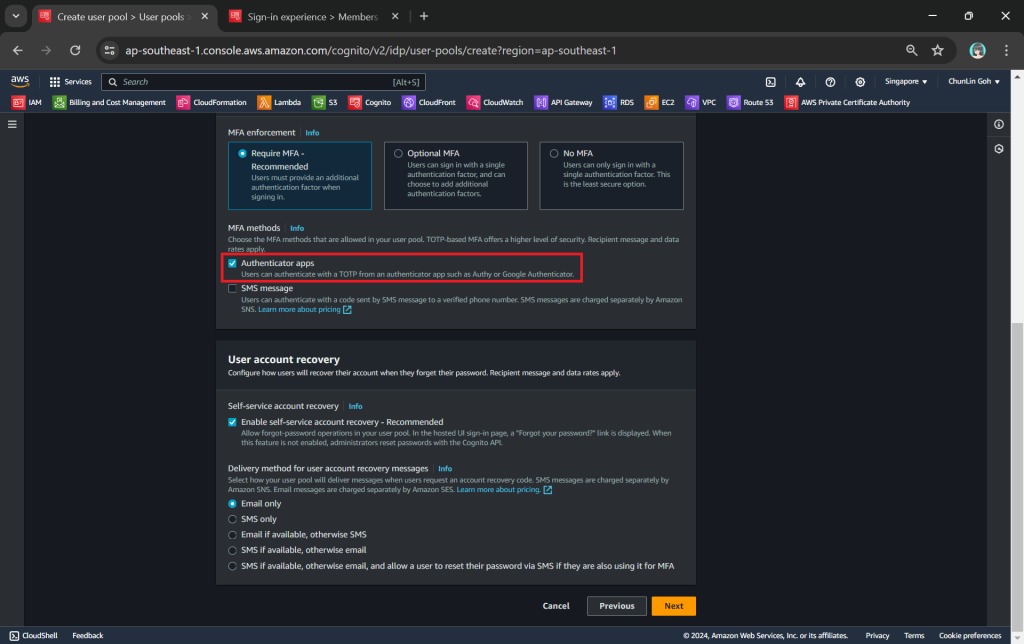

As shown in the screenshot above, one of the methods is called TOTP. TOTP stands for Time-Based One-Time Password. It is a form of multi-factor authentication (MFA) where a temporary passcode is generated by the authenticator app, adding a layer of security beyond the typical username and password.

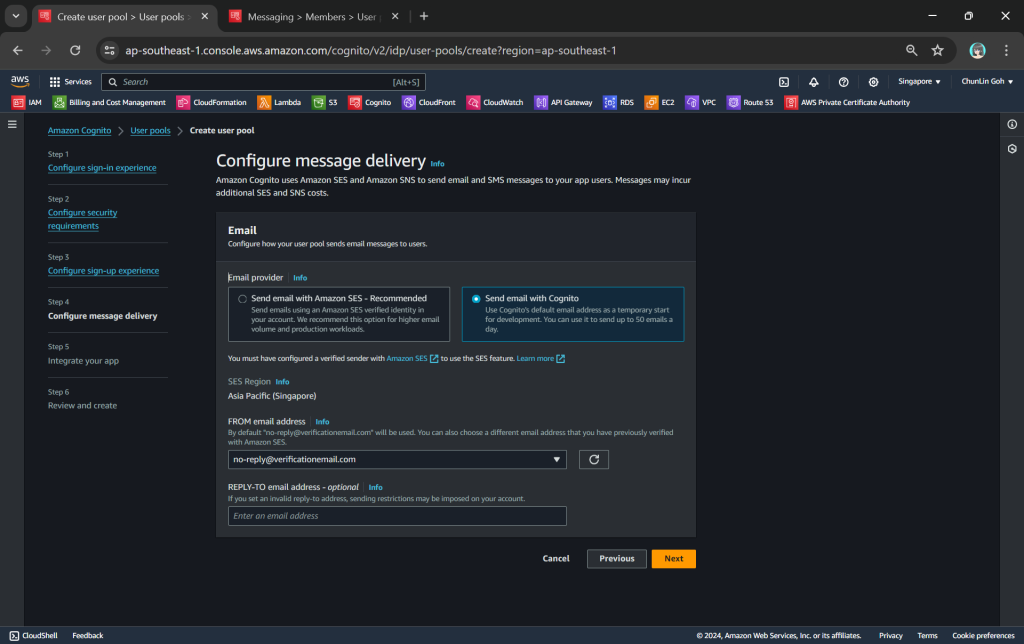

Thirdly, we will be configuring Cognito to allow user account recovery as well as new user registration. Both of these by default require email delivery. For example, when users request an account recovery code, an email with the code should be sent to the user. Also, when there is a new user signing up, there should be emails sent to verify and confirm the new account of the user. So, how do we handle the email delivery?

Ideally, we should be setting up another service known as Amazon SES (Simple Email Service), an email sending service provided by AWS, to deliver the emails. However, for testing purpose, we can choose to use Cognito default email address as well. This approach is normally only suitable for development purpose because we can only use it to send up to 50 emails a day.

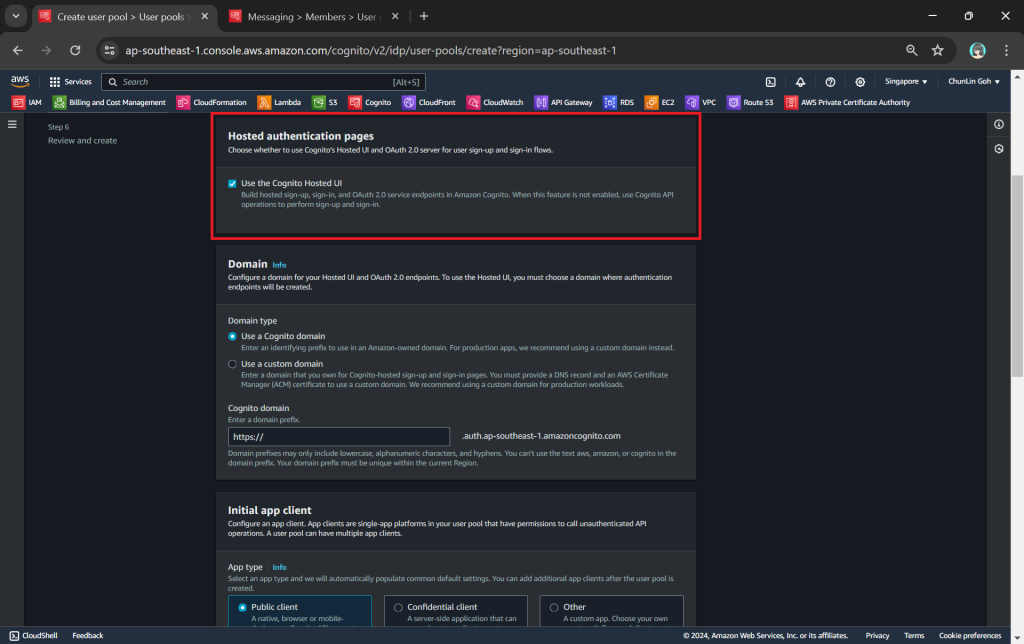

Finally, we will be using the hosted authentication pages for user sign-in and sign-up, as demonstrated below.

Step 2: Register Our Web App in Cognito

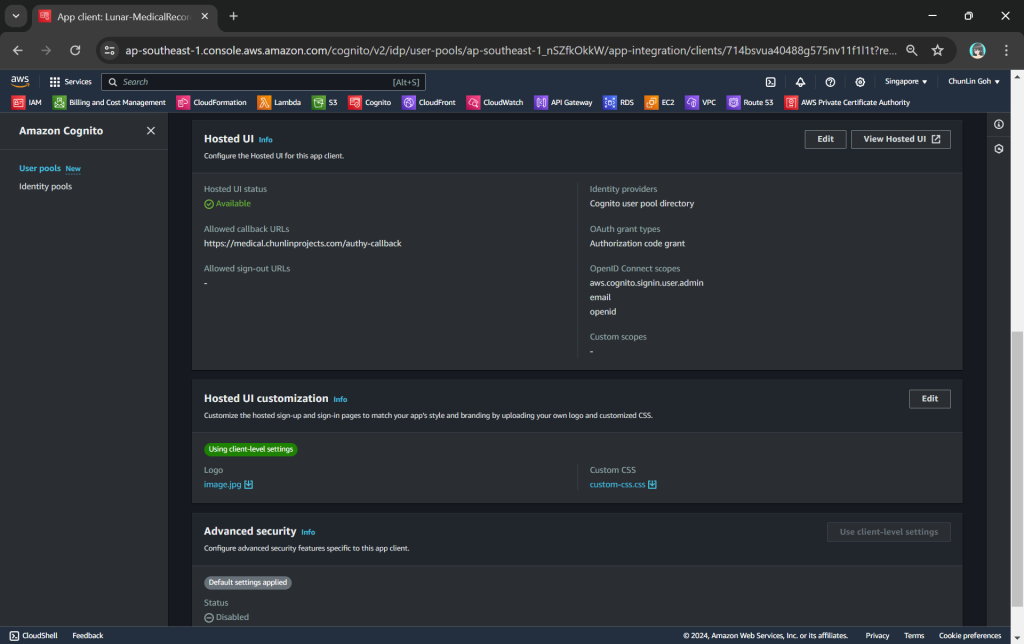

To integrate our app with Cognito, we still need to setup the app client. An App Client is a configuration entity that allows our app to interact with the user pool. It is essentially an application-specific configuration that defines how users will authenticate and interact with our user pool. For example, we have setup a new app client for our medical records app as shown in the following screenshot.

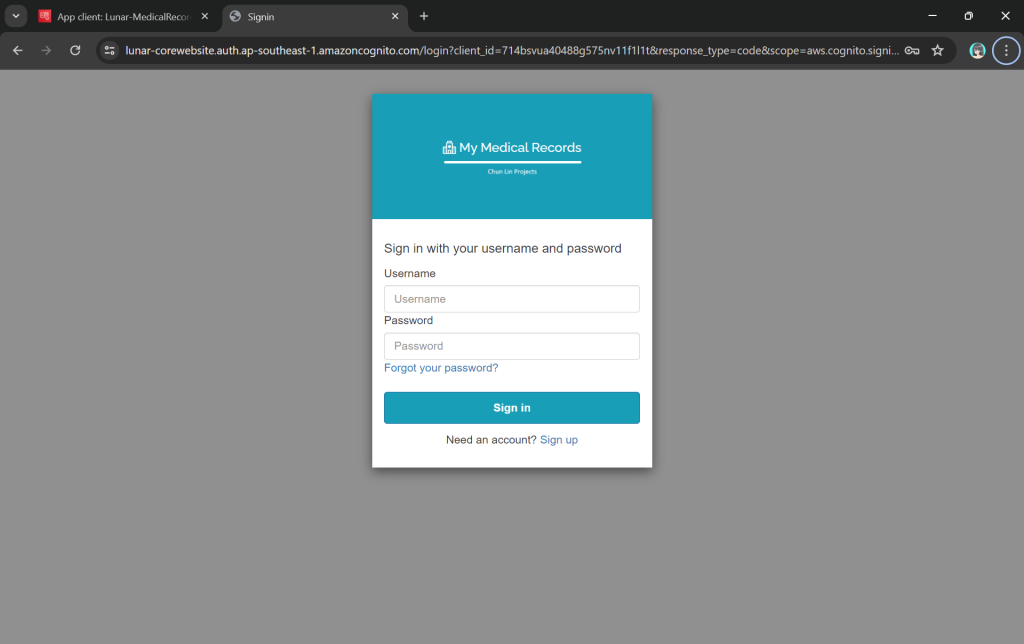

As shown in the screenshot above, we are able to to specify customisation settings for the built-in hosted UI experience. Please take note that we are only able to customise the look-and-feel of the default “login box”, so we cannot modify the layout of the entire hosted UI web page, as demonstrated below.

In the setup of the app client above, we have configured the callback URL to /authy-callback. So where does this lead to? It actually points to a new Lambda function which is in charge of the authentication.

Step 3: Retrieve Access Token from Cognito Token Endpoint

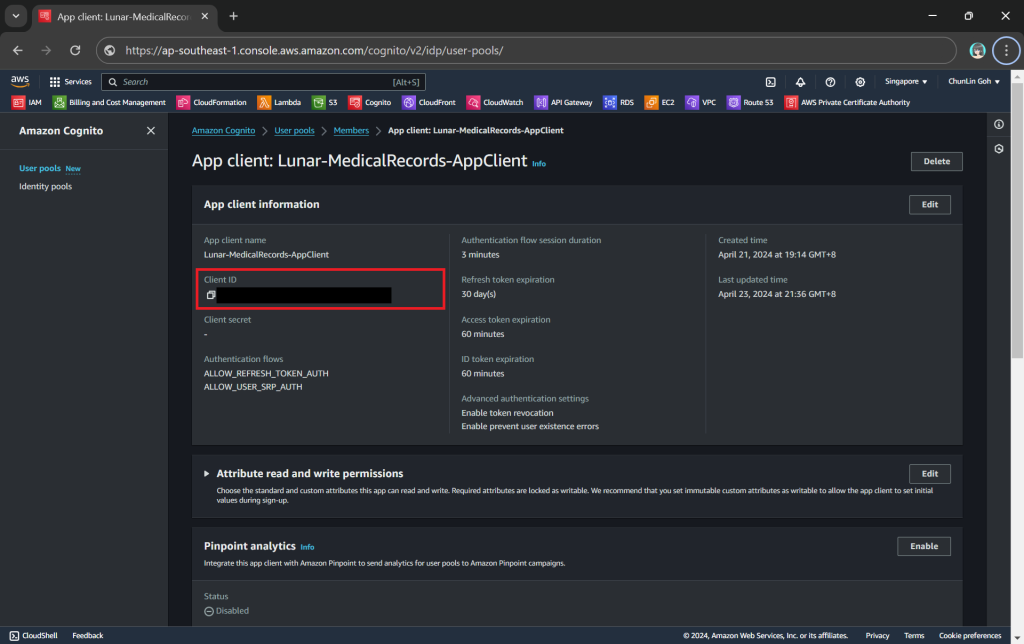

Here, Cognito uses the OAuth 2.0 authorization code grant flow. Hence, after successful authentication, Cognito redirects the user back to the specified callback URL with an authorisation code included in the query string with the name code. Our authentication Lambda function thus needs to makes a back-end request to the Cognito token endpoint, including the authorisation code, client ID, and redirect URI to exchange the authorisation code for an access token, refresh token, and ID token.

auth_code = event['queryStringParameters']['code']

token_url = "https://lunar-corewebsite.auth.ap-southeast-1.amazoncognito.com/oauth2/token"

client_id = "<client ID to be found in AWS Console>"

callback_url = "https://medical.chunlinprojects.com/authy-callback"

params = {

"grant_type": "authorization_code",

"client_id": client_id,

"code": auth_code,

"redirect_uri": callback_url

}

http = urllib3.PoolManager()

tokens_response = http.request_encode_body(

"POST",

token_url,

encode_multipart = False,

fields = params,

headers = {'Content-Type': 'application/x-www-form-urlencoded'})

token_data = tokens_response.data

tokens = json.loads(token_data)

As shown in the code above, the token endpoint URL for a Cognito user pool generally follows the following structure.

https://<your-domain>.auth.<region>.amazoncognito.com/oauth2/token

A successful response from the token endpoint typically is a JSON object which includes:

- access_token: Used to access protected resources;

- id_token: Contains identity information about the user;

- refresh_token: Used to obtain new access tokens;

- expires_in: Lifetime of the access token in seconds.

Hence we can retrieve the medical records if there is an access_token but return an “HTTP 401 Unauthorized” response if there is no access_token returned.

if 'access_token' not in tokens:

return {

'statusCode': 401,

'body': get_401_web_content(),

'headers': {

'Content-Type': 'text/html'

}

}

else:

access_token = tokens['access_token']

return {

'statusCode': 200,

'body': get_web_content(access_token),

'headers': {

'Content-Type': 'text/html'

}

}

The function get_401_web_content is responsible to retrieve a static web page showing 401 error message from the S3 bucket and return it to the frontend, as shown in the code below.

def get_401_web_content():

bucket_name = 'lunar.medicalrecords.static'

object_key = '401.html'

response = s3_client.get_object(

Bucket=bucket_name,

Key=object_key

)

object_data = response['Body'].read()

content = object_data.decode('utf-8')

return content

Step 4: Retrieve Content Based on Username

For the get_web_content function, we will be passing the access token to the Lambda that we developed earlier to retrieve the HTML content from the Object Lambda Access Point. As shown in the following code, we invoke the Lambda function synchronously and wait for the response.

def get_web_content(access_token):

useful_tokens = {

'access_token': access_token

}

lambda_response = lambda_client.invoke(

FunctionName='lunar-medicalrecords-frontend',

InvocationType='RequestResponse',

Payload=json.dumps(useful_tokens)

)

lambda_response_payload = lambda_response['Payload'].read().decode('utf-8')

web_content = (json.loads(lambda_response_payload))['body']

return web_content

In the Lambda function lunar-medicalrecords-frontend, we will no longer need to hardcode the object key as chunlin.json. Instead, we can just retrieve the user name from the Cognito using the access token, as highlighted in bold in the code below.

...

import boto3

cognito_idp_client = boto3.client('cognito-idp')

def lambda_handler(event, context):

if 'access_token' not in event:

return {

'statusCode': 200,

'body': get_homepage_web_content(),

'headers': {

'Content-Type': 'text/html'

}

}

else:

cognitio_response = cognito_idp_client.get_user(AccessToken = event['access_token'])

username = cognitio_response['Username']

try:

bucket_name = 'ol-lunar-medicalreco-t5uumihstu69ie864td6agtnaps1a--ol-s3'

object_key = f'{username}.json'

...

except Exception as err:

return {

'statusCode': 500,

'body': json.dumps(str(err))

}

The get_homepage_web_content function above basically is to retrieve a static homepage from the S3 bucket. It is similar to how the get_401_web_content function above works.

Step 5: Store Access Token in Cookies

We need to take note that the auth_code above in the OAuth 2.0 authorisation code grant flow can only be used once. This is because single-use auth_code prevents replay attacks where an attacker could intercept the authorisation code and try to use it multiple times to obtain tokens. Hence, our implementation above will break if we refresh our web page after logging in.

To solve this issue, we will be saving the access token in a cookie when the user first signs in. After that, as long as we detect that there is a valid access token in the cookie, we will not use the auth_code.

In order to save an access token in a cookie, there are several important considerations to ensure security and proper functionality:

- Set the

Secureattribute to ensure the cookie is only sent over HTTPS connections. This helps protect the token from being intercepted during transmission; - Use the

HttpOnlyattribute to prevent client-side scripts from accessing the cookie. This helps mitigate the risk of cross-site scripting (XSS) attacks; - Set an appropriate expiration time for the cookie. Since access tokens typically have a short lifespan, ensure the cookie does not outlive the token’s validity.

Thus the code at Step 3 above can be improved as follows.

def lambda_handler(event, context):

now = datetime.now(timezone.utc)

if 'cookies' in event:

for cookie in event['cookies']:

if cookie.startswith('access_token='):

access_token = cookie.replace("access_token=", "")

break

if 'access_token' in locals():

returned_html = get_web_content(access_token)

return {

'statusCode': 200,

'headers': {

'Content-Type': 'text/html'

},

'body': returned_html

}

return {

'statusCode': 401,

'body': get_401_web_content(),

'headers': {

'Content-Type': 'text/html'

}

}

else:

...

if 'access_token' not in tokens:

...

else:

access_token = tokens['access_token']

cookies_expiry = now + timedelta(seconds=tokens['expires_in'])

return {

'statusCode': 200,

'headers': {

'Content-Type': 'text/html',

'Set-Cookie': f'access_token={access_token}; path=/; secure; httponly; expires={cookies_expiry.strftime("%a, %d %b %Y %H:%M:%S")} GMT'

},

'body': get_web_content(access_token)

}

With this, now we can safely refresh our web page and there should be no case of reusing the same auth_code repeatedly.

Wrap-Up

In summary, we can conclude the infrastructure that we have gone through above in the following diagram.

References

- Introducing Amazon S3 Object Lambda – Use Your Code to Process Data as It Is Being Retrieved from S3;

- Amazon S3 Object Lambda;

- Using Amazon S3 Object Lambda to Dynamically Watermark Images as They Are Retrieved;

- Boto3: S3 Client get_object;

- Boto3: S3 Client write_get_object_response;

- AWS Lambda: How To Remove Environmental Variables from Configuration;

- How to use the code returned from Cognito to get AWS credentials?;

- Cognito AssociateSoftwareToken: token does not have required scopes.