There is a popular yet simple checklist on how good a software team is from Joel Spolsky, who has been the CEO of Stack Overflow until last year (2019). The checklist is called the Joel Test. The test has only 12 items but 7 of them are related about DevOps, debugging, and testing.

Software testing makes sure that the software is doing exactly what it is supposed to do and it also points out all the problems and errors found in the software. Hence, involving testing as early as possible and as frequent as possible is a key to build a quality software which will be accepted by the customers or the clients.

There are many topics I’d love to cover about testing. However, in this article, I will only focus on my recent learning about setting up automated GUI testing for my UWP program on Windows 10.

Appium

One of the key challenges in testing UWP app is to do the GUI testing. In the early stage, it’s possible to do that manually by clicking around the app. However, as the app grows larger, testing it manually is very time consuming. After sharing my thoughts with my senior Riza Marhaban, he introduced me a test automation framework called Appium.

What is Appium? Appium is basically an open source test automation framework for iOS, Android, and Windows apps. Here it says Windows apps because besides UWP, using it to test WPF app is possible as well.

Together with Windows App Driver which enables Appium by using new APIs added in Windows 10 Anniversary Edition, we can use them to do GUI test automation on Windows apps. The following video demonstrates the results of GUI testing with Appium in my demo project Lunar.Paint.Uwp.

Here, I will list down those great tutorials about automated GUI testing of UWP apps using Appium which are ranked top in Google Search:

- Automated UI Testing of a UWP App using Appium by Yassine Benabbas (May 2016);

- UI Testing for Windows Apps with WinAppDriver and Appium by Matteo Pagani (August 2019);

- WinAppDriver – Test Any App with Appium’s Selenium-like Tests on Windows by Scott Hanselman (November 2016);

- Testing the UI of Windows Apps with Appium by Bruno Sossino (March 2017);

- Testing Your Windows App with Appium in Windows 10 and Visual Studio 2015 by Jeremy Lindsay (November 2016).

Some of them were written about four years ago when Barack Obama was still the President of the USA. In addition, none of them continues the story with DevOps. Hence, my article here will talk about GUI testing with Appium from the beginning of a new UWP project until it gets built on Azure DevOps.

Getting Started with Windows Template Studio for UWP

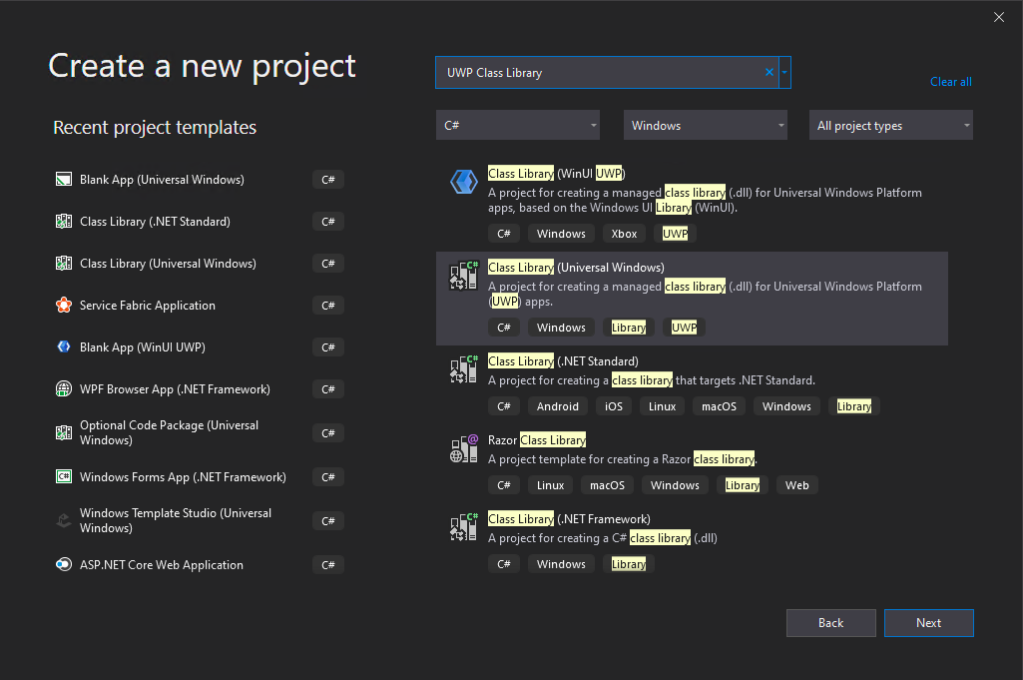

Here, I will start a new UWP project using Windows Template Studio.

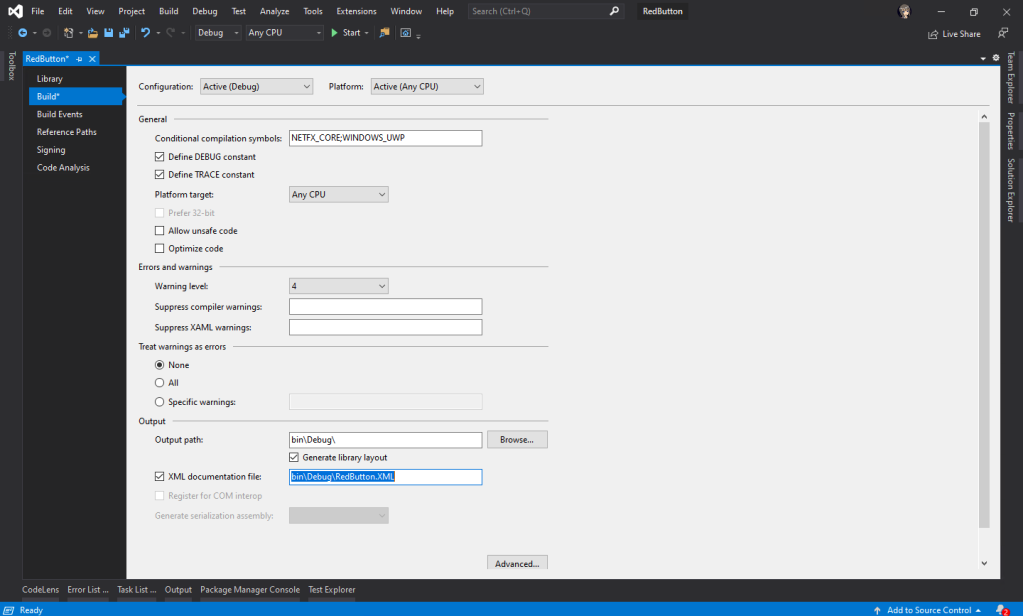

There is one section in the project configuration called Testing, as shown in the screenshot above. In order to use Appium, we need to add the testing with Win App Driver feature. After that, we shall see a Test Project suffixed with “Tests.WinAppDriver” being added.

By default, the test project has already come with necessary NuGet packages, such as Appium.WebDriver and MSTest.

Writing GUI Test Cases: Setup

The test project comes with a file called BasicTest.cs. In the file, there are two important variables, i.e. WindowsApplicationDriverUrl and AppToLaunch.

The WindowsApplicationDriverUrl is pointing to the server of WinAppDriver which we will install later. Normally we don’t need to change it as the default value will be “http://127.0.0.1:4723”.

The AppToLaunch variable is the one we need to change. Here, we need to replace the part before “!App” with the Package Family Name, which can be found in the Packaging tab of the UWP app manifest, as shown in the screenshot below.

Take note that there is a line of comment right above the AppToLaunch variable. It says, “The app must also be installed (or launched for debugging) for WinAppDriver to be able to launch it.” This is a very important line. It means when we are testing locally, we need to make sure the latest of our UWP app is deployed locally. Also, it means that the UWP app needs to be available on the Build Agent which we will talk about in later part of this article.

I will not go through on how to write the test cases as they are available on my GitHub project: https://github.com/goh-chunlin/Lunar.Paint.Uwp/tree/master/Lunar.Paint.Uwp.Tests.WinAppDrive. Instead, I will highlight a few important points here.

Writing GUI Test Cases: AccessibilityId

In the test cases, to identify the GUI element in the program, we need to use

AppSession.FindElementByAccessibilityId(<The AccessibilityId of the GUI Element>);

By default, the AccessibilityId is mapped to the x:Name of the XAML control in our UWP app. For example, we have a “Enter” button as follow.

<Button x:Name="WelcomeScreenEnterButton" Content="Enter"... />

To access this button, in the test code, we can do like the following.

var welcomeScreenEnterButton = AppSession.FindElementByAccessibilityId("WelcomeScreenEnterButton");

Of course, if we want to have an AccessibilityId which is different from the Name of the XAML control (or the XAML control doesn’t have a Name), then we can specify the AccessibilityId in the XAML directly as follows.

<Button x:Name="WelcomeScreenEnterButton"

AutomationProperties.AutomationId="EnterButton"

Content="Enter"... />

Then to access this button, in the test code, we need to use EnterButton instead.

var welcomeScreenEnterButton = AppSession.FindElementByAccessibilityId("EnterButton");

Writing GUI Test Cases: AccessibilityName

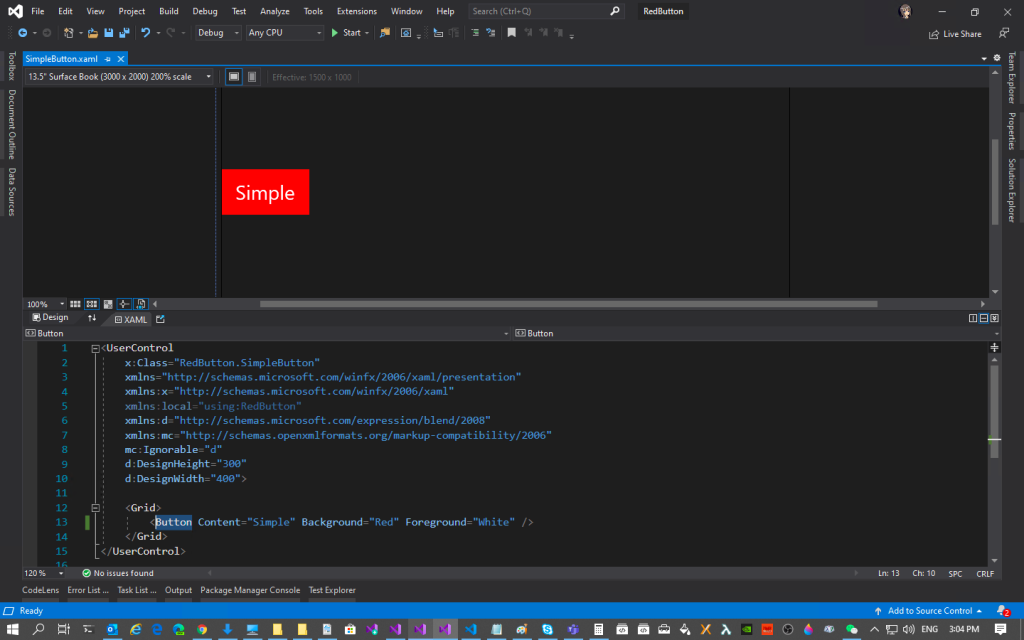

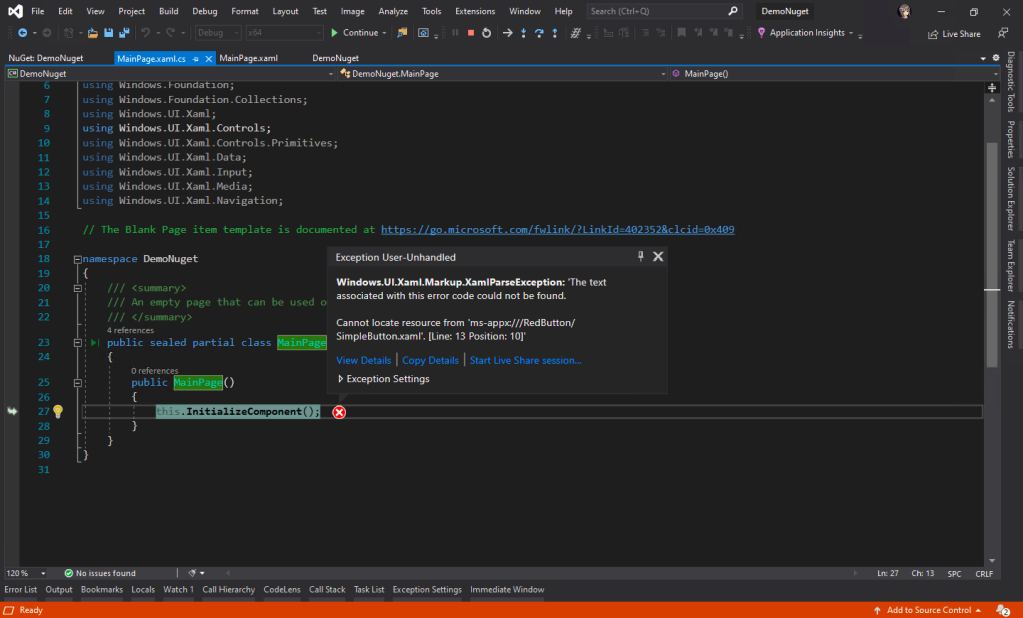

The method above works well with XAML controls which are having simple text as the content. If the content property is not string, for example if the XAML control is a Grid that consists of many other XAML controls or the XAML control is a custom user control, then Appium will fail to detect the control with the AccessibilityId with the following exception message “OpenQA.Selenium.WebDriverException: An element could not be located on the page using the given search parameters”.

Thanks to GeorgiG from UltraPay, there is a solution to this problem. As GeorgiG pointed out in his post on Stack Overflow, the workaround is to overwrite the AutomationProperties.Name with a non-empty string value, such as “=”.

Hence, in my demo project, I have the following code for a Grid.

<Grid x:Name="WelcomeScreen" AutomationProperties.Name="-">

...

</Grid>

Then in the test cases, I can easily access the Grid with the following code.

var welcomeScreen = AppSession.FindElementByAccessibilityId("WelcomeScreen");

Writing GUI Test Cases: Inspect Tool

The methods listed out above work fine for the XAML controls in our program. How about for the prompt? For example, when user clicks on the “Open” button and an “Open” window is prompted. How do we instruct Appium to react to that?

Here, we will need a tool called Inspect.

We first need to access the Developer Command Prompt for Visual Studio. Then we type “Inspect” to launch the Inspect tool.

Next, we can mouse over the Open prompt to find out the AccessibilityId of the GUI element that we need to access. For example, the AccessibilityId of the area where we key in the file name is 1148, as shown in the screenshot below.

This explains why in the test cases, we have the following code to access it.

var openFileText = AppSession.FindElementByAccessibilityId("1148");

There is also a very good tutorial on how to deal with the Save prompt in the WinAppDriver sample on GitHub. In the sample, it shows how to interact with the Save prompt in the Notepad via Appium.

Alright, that’s all for how to write GUI test cases for our UWP app with Appium. I have the some simple test cases written in my demo project which has its source code available on my GitHub repo, please feel free to review it: https://github.com/goh-chunlin/Lunar.Paint.Uwp/tree/master/Lunar.Paint.Uwp.Tests.WinAppDriver.

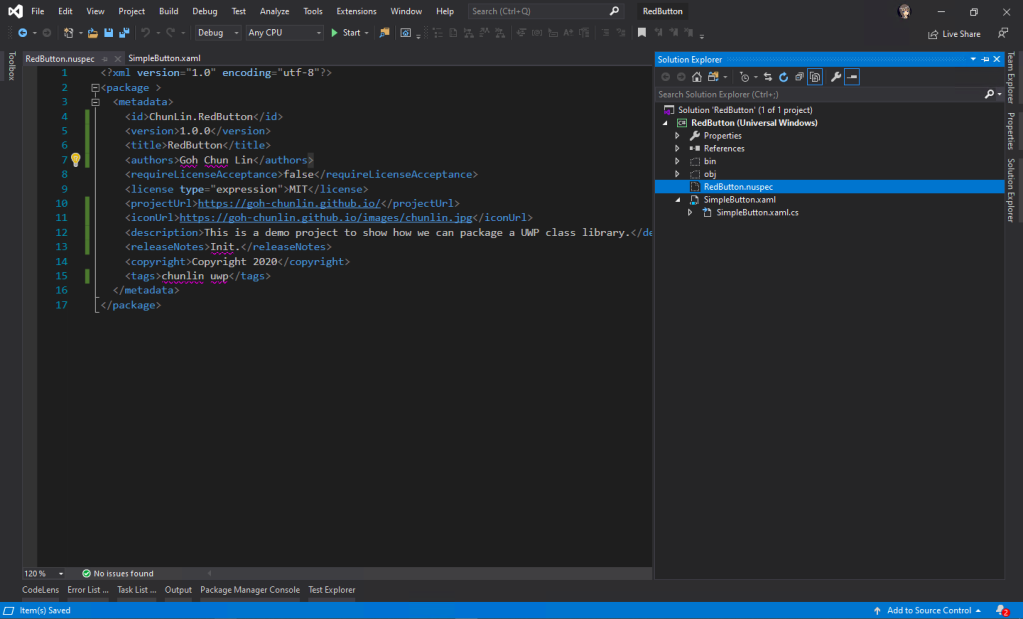

Azure DevOps Build Pipeline Setup

Now, we have done our software test locally. How do we make the testing to be part of our build pipeline on Azure DevOps?

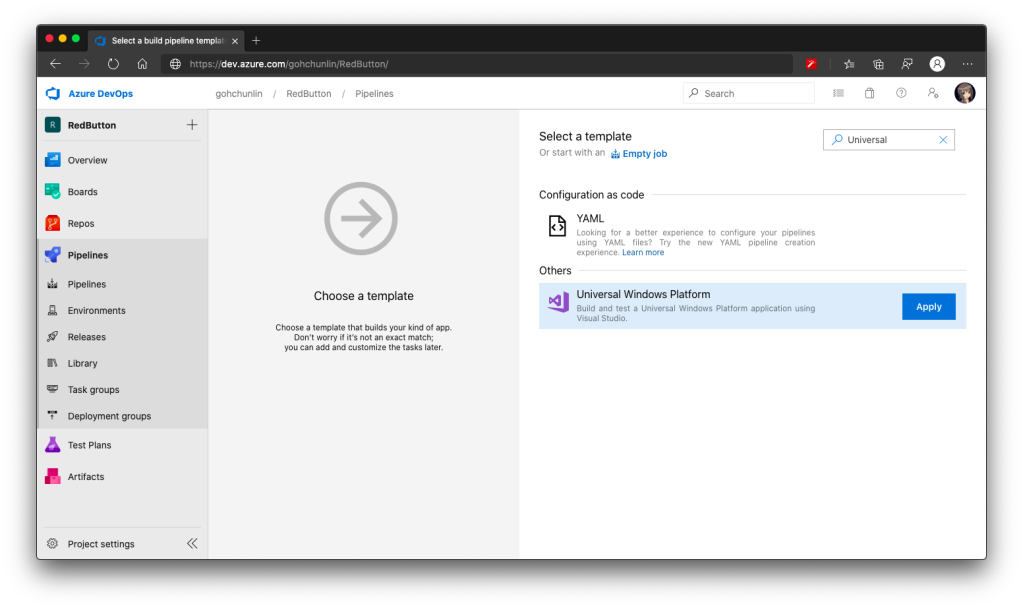

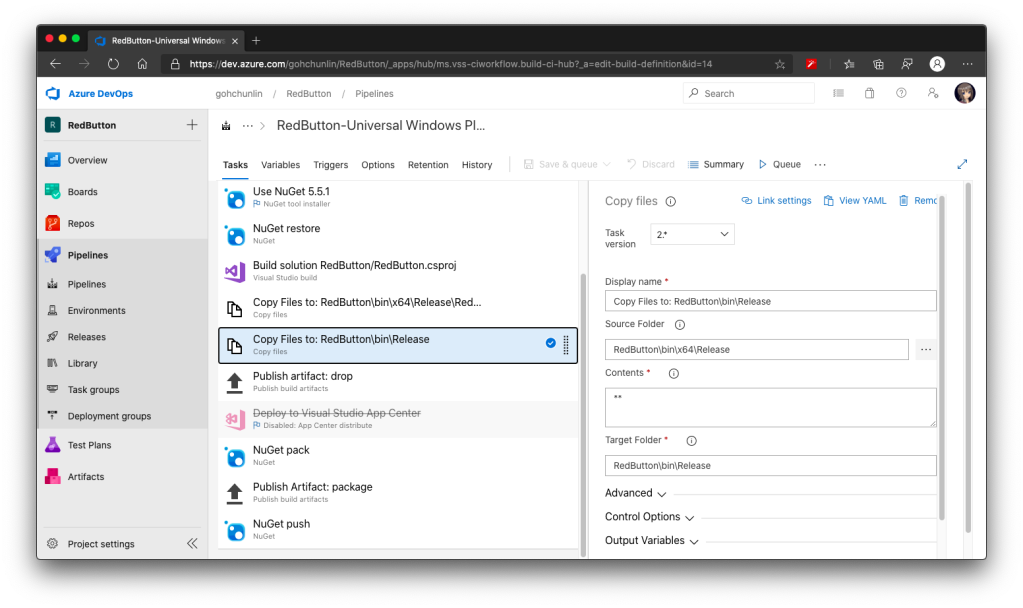

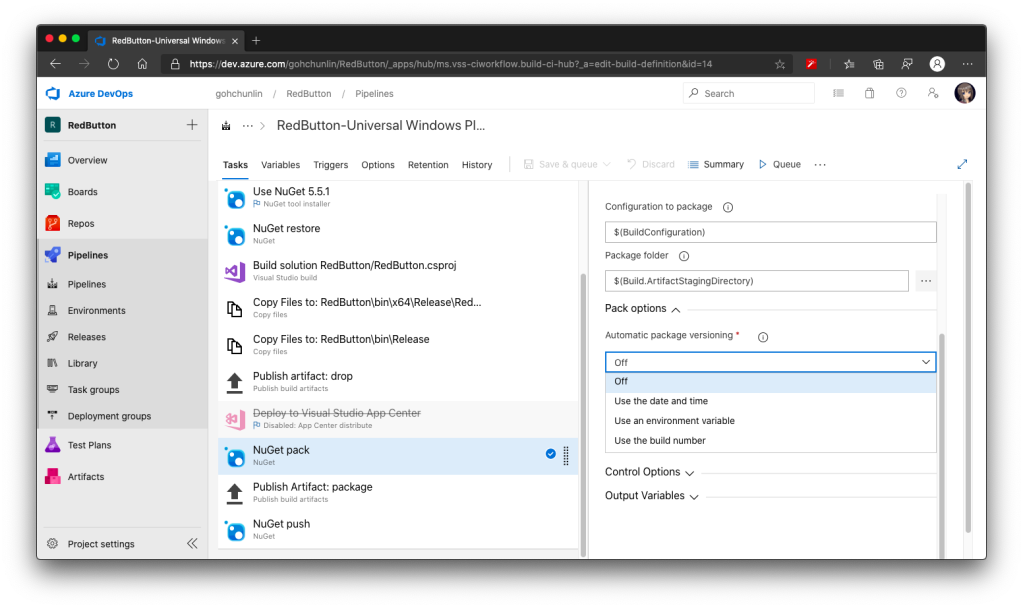

This turns out to be quite a complicated setup. Here, I setup the build pipeline based on the .NET Desktop pipeline in the template.

Next, we need to make sure the pipeline is building our solution with VS2019 on Windows 10 at least. Otherwise, we will receive the error “Error CS0234: The type or namespace name ‘ApplicationModel’ does not exist in the namespace ‘Windows’ (are you missing an assembly reference?)” in the build pipeline.

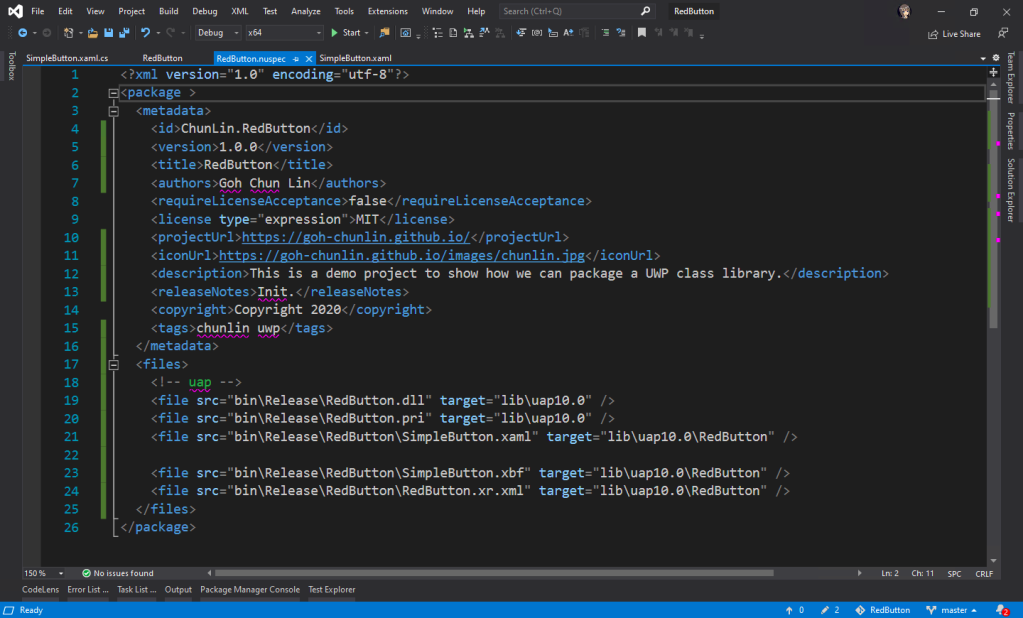

Now, if we queue our pipeline again, we will encounter a new error which states that “Error APPX0104: Certificate file ‘xxxxxx.pfx’ not found.” This is because for UWP app, we need to package our app with a cert. However, by default, the cert will not be committed to the Git repository. Hence, there is no such cert in the build pipeline.

To solve this problem, we need to first head to the Library of the Pipelines and add the following Variable Group.

Take note that, the required cert is now still not yet available on the pipeline. Hence, we need to upload the cert as one of the Secured Files in the Library as well.

So, how to we move this cert from the Library to the build pipeline? We need the following task.

This is not enough because the task will only copy the cert to a temporary storage on the build agent. However, when the agent tries to build, it will still be searching for the cert in the project folder of our UWP app, i.e. Lunar.Paint.Uwp.

Hence, as shown in the screenshot above, we have two more powershell script tasks to do a little more work.

The first script is to add the cert to the certificate store in the build agent. The script can be found on Damien Aicheh’s excellent tutorial about installing cert on Azure DevOps pipeline.

The second script after it is to copy the cert from the temporary storage in the build agent to the project folder.

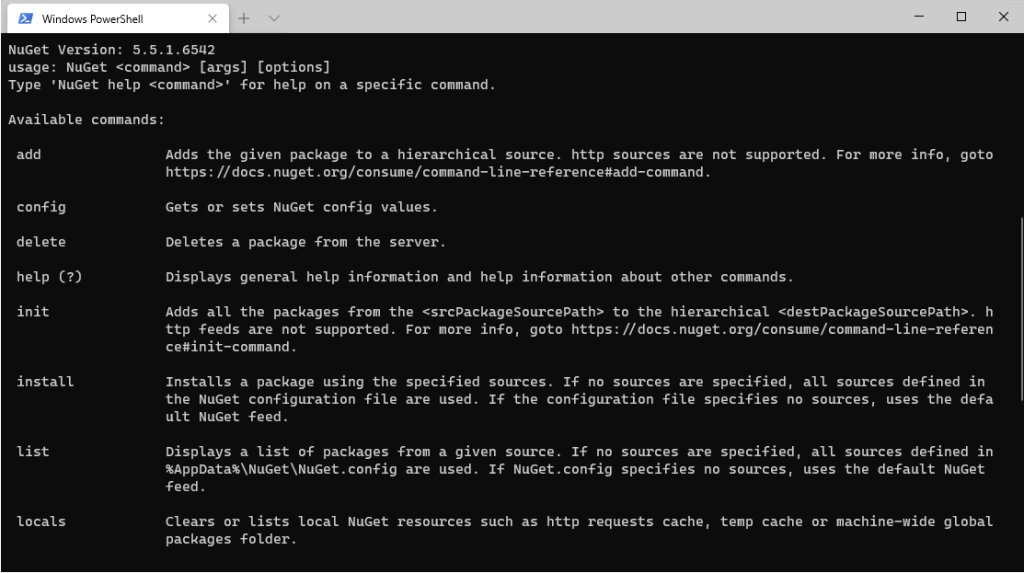

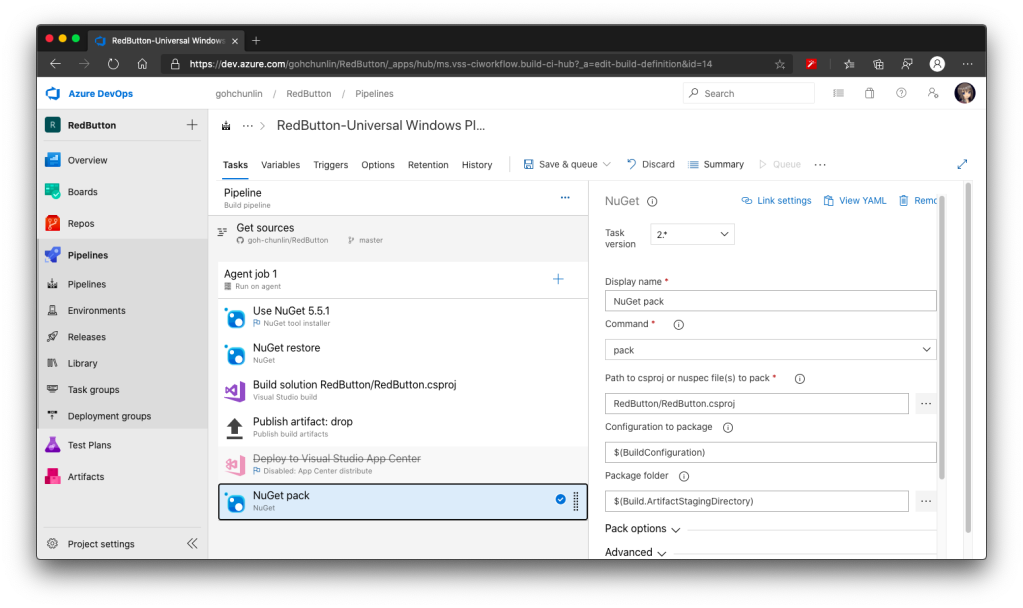

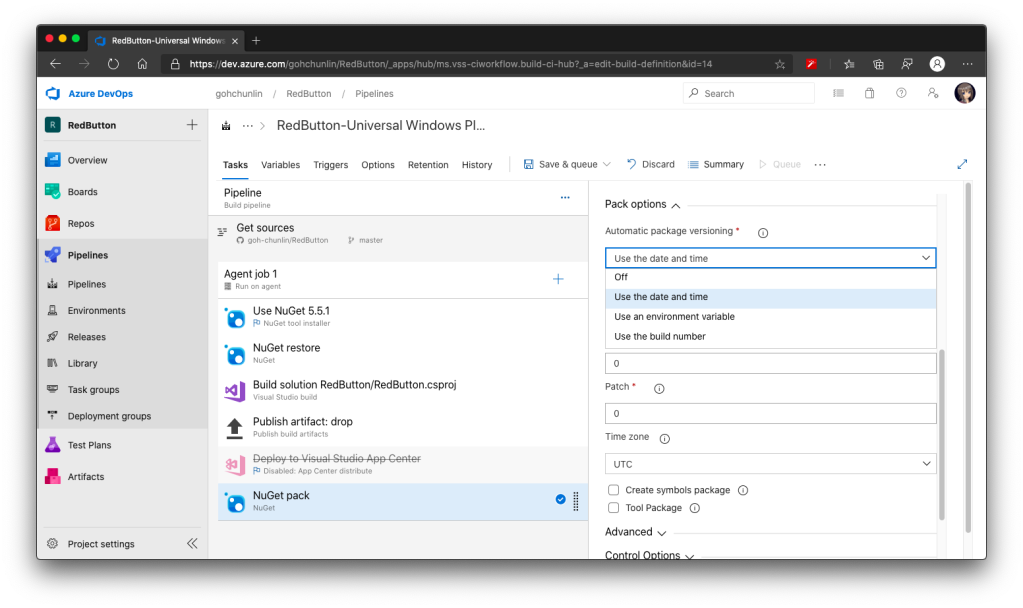

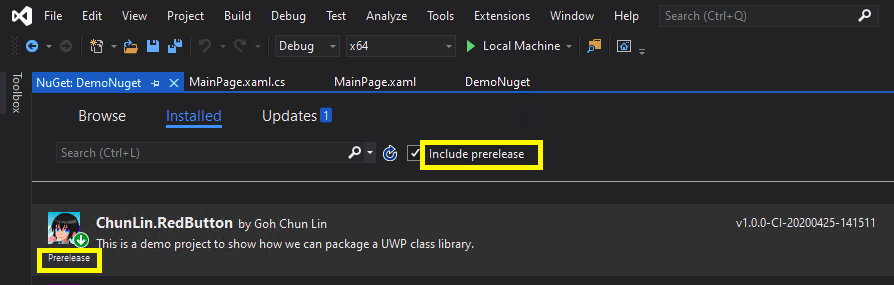

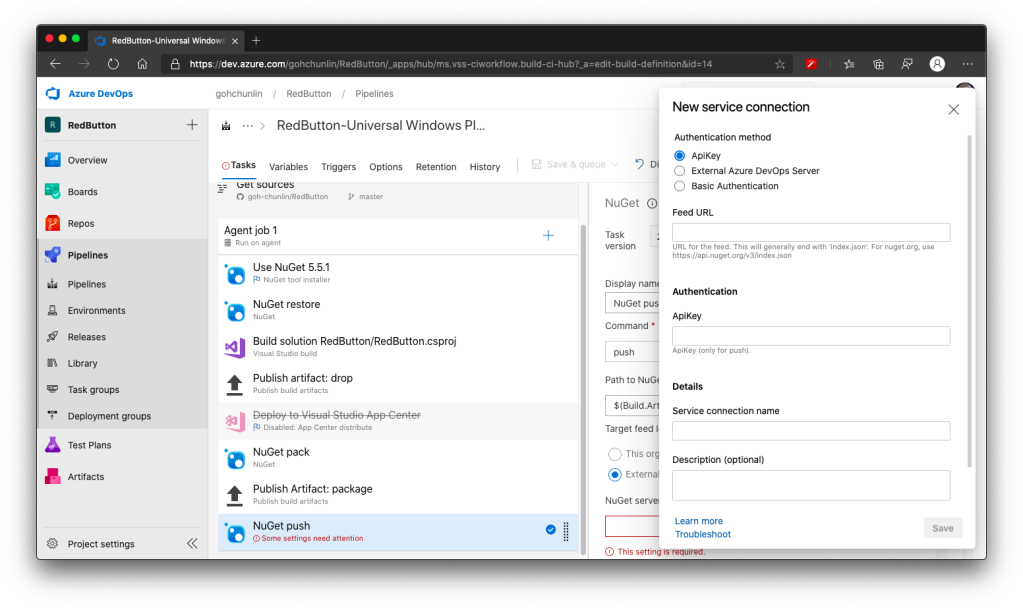

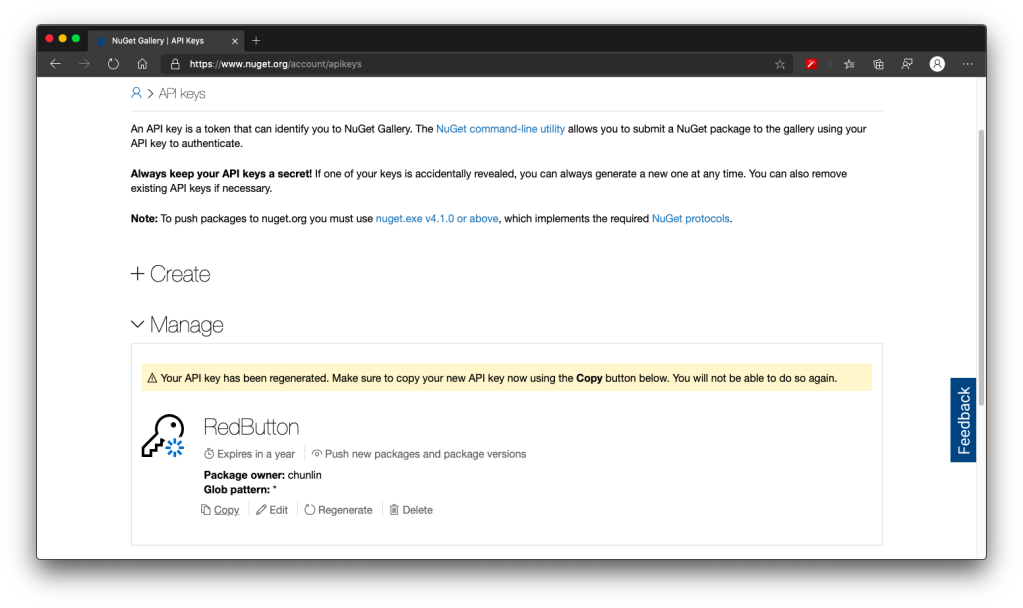

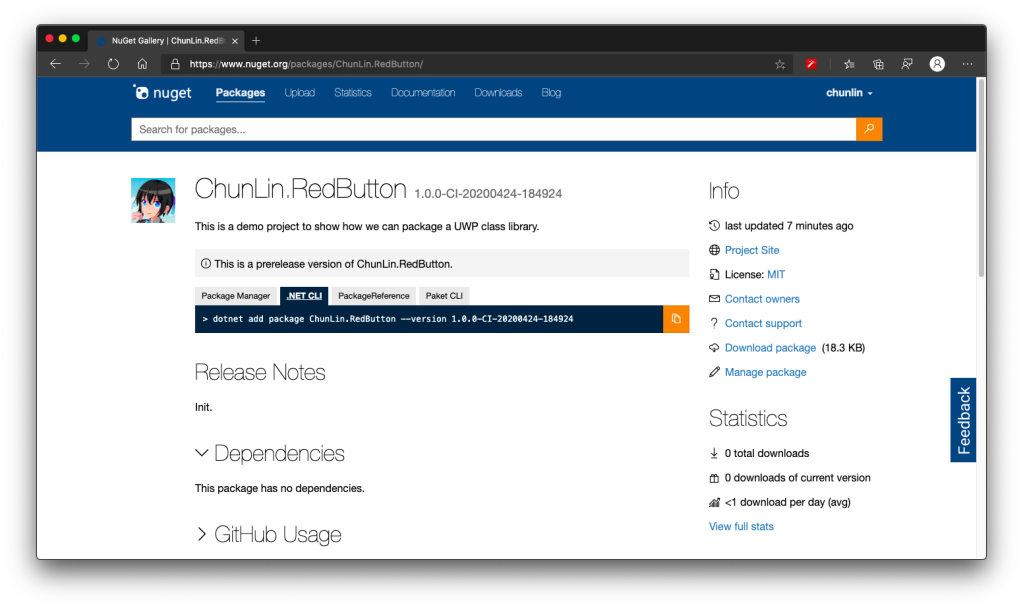

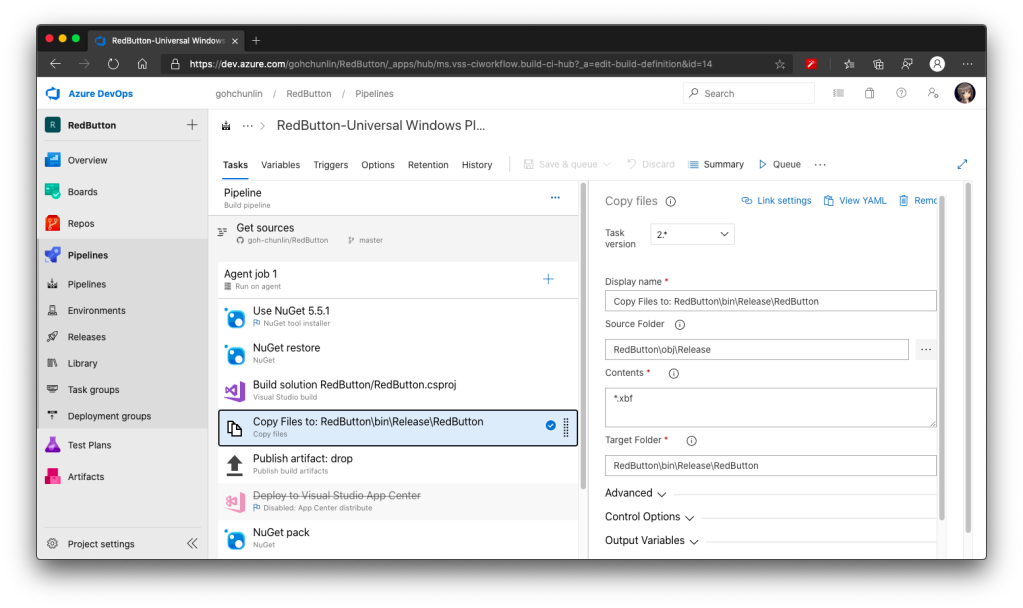

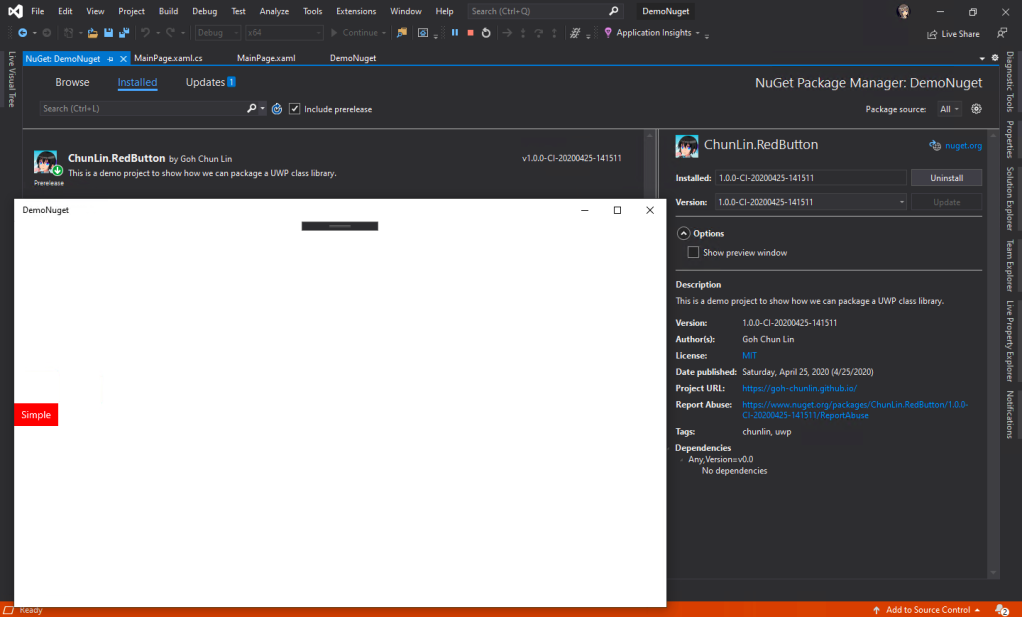

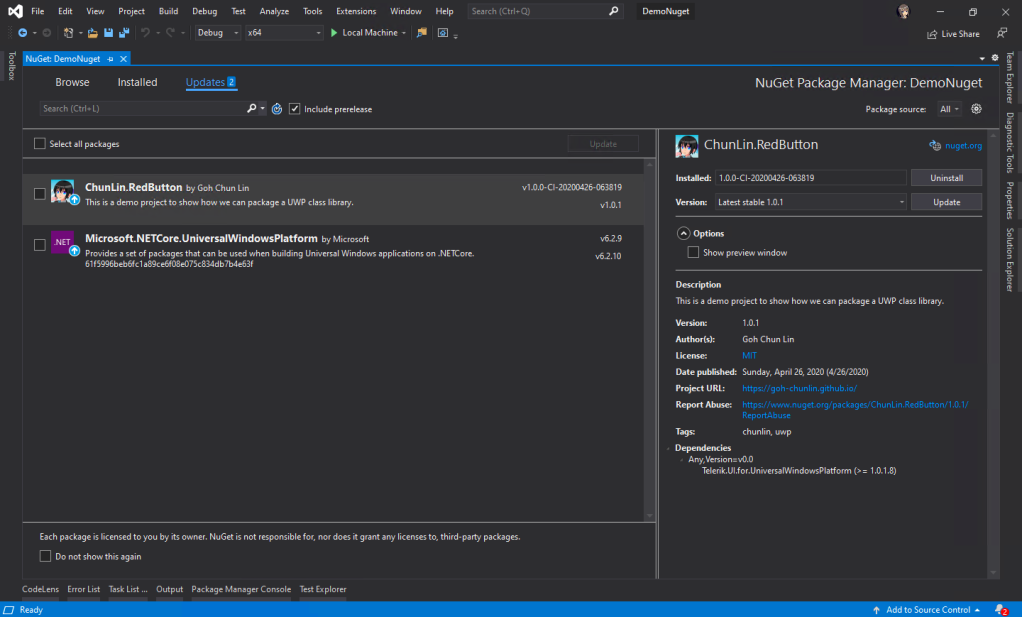

Oh ya, as you can see in the screenshot above, I am using NuGet 5.5.1. By default, the NuGet is 4.4.1 in the template. I am worried that it may cause some problems as it did when it was building UWP NuGet library, so I change it to 5.5.1, which is the latest stable version.

With these three new tasks, the build task should be executed correctly.

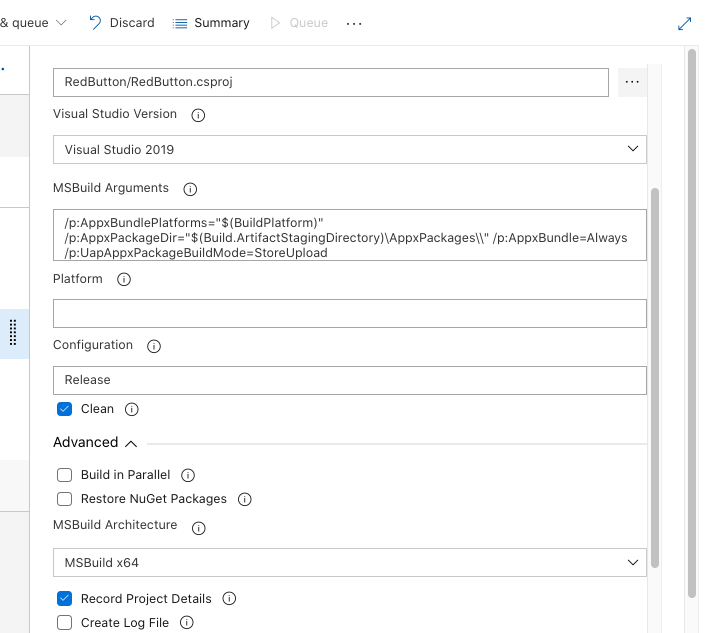

Here, my BuildPlatform is x64 and the BuildConfiguration is set to release. Also in the MSBuild Arguments, I specify the PackageCertificatePassword because otherwise it will throw an error in the build process saying “[error]C:\Program Files (x86)\Microsoft Visual Studio\2019\Enterprise\MSBuild\Microsoft\VisualStudio\v16.0\AppxPackage\Microsoft.AppXPackage.Targets(828,5): Error : Certificate could not be opened: Lunar.Paint.Uwp_TemporaryKey.pfx.”

Introduction of WinAppDriver to the Build Pipeline

Okay, so how do we run the test cases above on Azure DevOps?

Actually, it only requires the following five steps as highlighted in the following screenshot.

Firstly, we need to start the WinAppDriver.

Secondly, we need to introduce two tasks after it to execute some PowerShell scripts. Before showing what they are doing, we need to recall one thing.

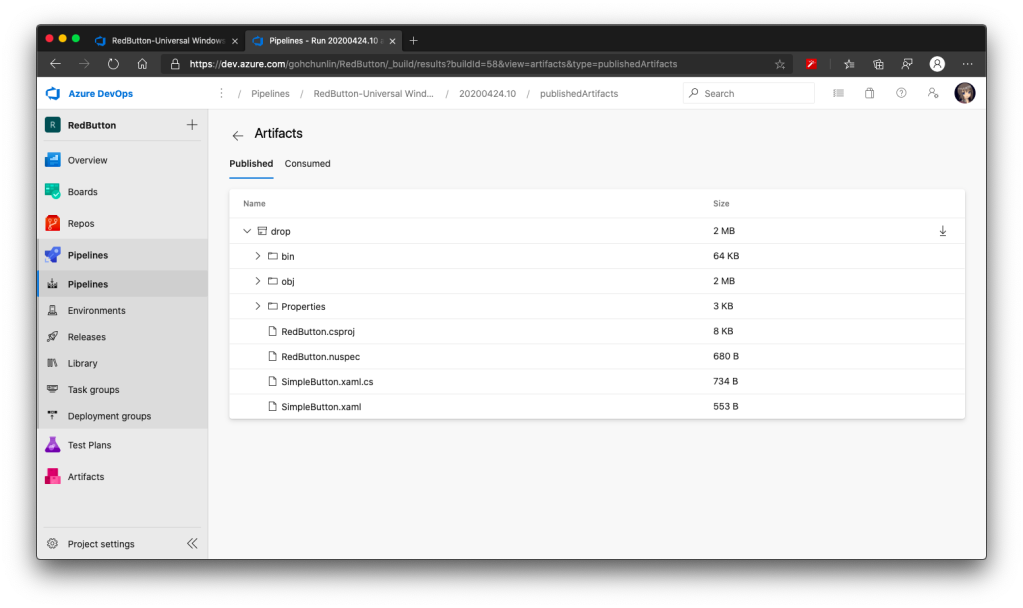

Remember the one line of comment above the AppToLaunch variable in our test project? It says, “The app must also be installed (or launched for debugging) for WinAppDriver to be able to launch it.” Hence, we must install the UWP app using the files in AppxPackages generated by the Build task. This is what the two Powershell tasks are doing.

The first Powershell task is to import the cert to the store.

Import-Certificate -FilePath $(Build.ArtifactStagingDirectory)\AppxPackages\Lunar.Paint.Uwp_1.0.0.0_Test\Lunar.Paint.Uwp_1.0.0.0_x64.cer -CertStoreLocation 'Cert:\LocalMachine\Root' -Verbose

The second task, as shown in the following screenshot, is to install the UWP app using Add-AppDevPackage.ps1. Take note that here we need to do SilentContinue else it will wait for user interaction and cause the pipeline to be stuck.

At the point of writing this article, the Windows Template Studio automatically sets the Targeting of the UWP app to be “Windows 10, version 2004 (10.0; Build 19041)”. However, the Azure DevOps pipeline is still not yet updated to Windows 10 v2004, so we should lower the Target Version to be v1903 and minimum version to be v1809 in order to have the project built successfully on the Azure DevOps pipeline.

Thirdly, we will need the test with VsTest. This task exists in the default template and nothing needs to be changed here.

Fourthly, we need to stop the WinAppDriver.

That’s all. Now when the Build Pipeline is triggered, we can see the GUI test cases are being run as well.

In addition, Azure DevOps will also give us a nice test report for each of our builds, as shown in the following the screenshot.

Conclusion: To Be Continued

Well, this is actually just the beginning of testing journey. I will continue to learn more about software testing especially in the DevOps part and share with you all in the future.

Feel free to leave a comment here to share with other readers and me about your thoughts. Thank you!

Project Links

- GitHub Repo: https://github.com/goh-chunlin/Lunar.Paint.Uwp

- Azure DevOps: https://dev.azure.com/gohchunlin/Lunar.Paint.Uwp