Two years ago when I visited Microsoft Singapore office, their engineers, Chun Siong and Say Bin, gave me Satya Nadella’s book, Hit Refresh, as well as a Raspberry Pi 3 as gift.

In fact I also got another Raspberry Pi from my brother few years ago and it was running on Windows 10 IoT. It is now in my office together with monitor, keyboard, and mouse. Due to the covid lockdown, I am not allowed to go back to office yet. Hence, I think it’s time to see how we can setup this new Raspberry Pi for headless SSH access with my MacBook.

The method I use here is the headless approach which is suitable if you don’t have access to the GUI to set up a wireless LAN on the Raspberry Pi. Hence, you don’t need to connect monitor, external keyboard, or mouse to the Raspberry Pi at all.

Step 0: Things to Purchase

Besides the Raspberry Pi, we also need to get some other things ready first before we can proceed to setup the device. Most of the things here I bought from Challenger.

Item 1: Toshiba microSDHC UHS-I Card 32GB with SD Adapter

Raspberry Pi uses a microSD card as a hard drive. We can either use a Class 10 microSD card or UHS for Ultra High Speed. Here we are using UHS Class 1. The reason why we do not choose anything greater than 32GB is also because according to the SD specifications, any SD card larger than 32GB is an SDXC card and has to be formatted with the exFAT filesystem which is not supported yet by the Raspberry Pi bootloader. There is of course solutions for this if more SD space is needed for your use cases, please read about it more on Raspberry Pi documentation. The microSD card we choose here is a 32GB SDHC using FAT32 file system which is supported by the Raspberry Pi, so we are safe.

Item 2: USB Power Cable

All models up to the Raspberry Pi 3 require a microUSB power connector (Raspberry Pi 4 uses a USB-C connector). If your Raspberry Pi doesn’t come with the power supply, you can get one from the official website which has the official universal micro USB power supply recommended for Raspberry Pi 1, 2, and 3.

Item 3: Cat6 Ethernet Cable (Optional)

It’s possible to setup Raspberry Pi over WiFi because Model 3 B comes with support of WiFi. However to play safe, I also prepare an Ethernet cable. In the market now, we can find Cat6 Ethernet cable easily which is suitable for transferring heavy files and communication with a local network.

Item 4: USB 2.0 Ethernet Adapter (Optional)

Again, this item is optional if you don’t plan to setup the Raspberry Pi through Ethernet cable and you are not using a machine like MacBook which doesn’t have an Ethernet port.

Step 1: Flash Raspbian Image to SD Card

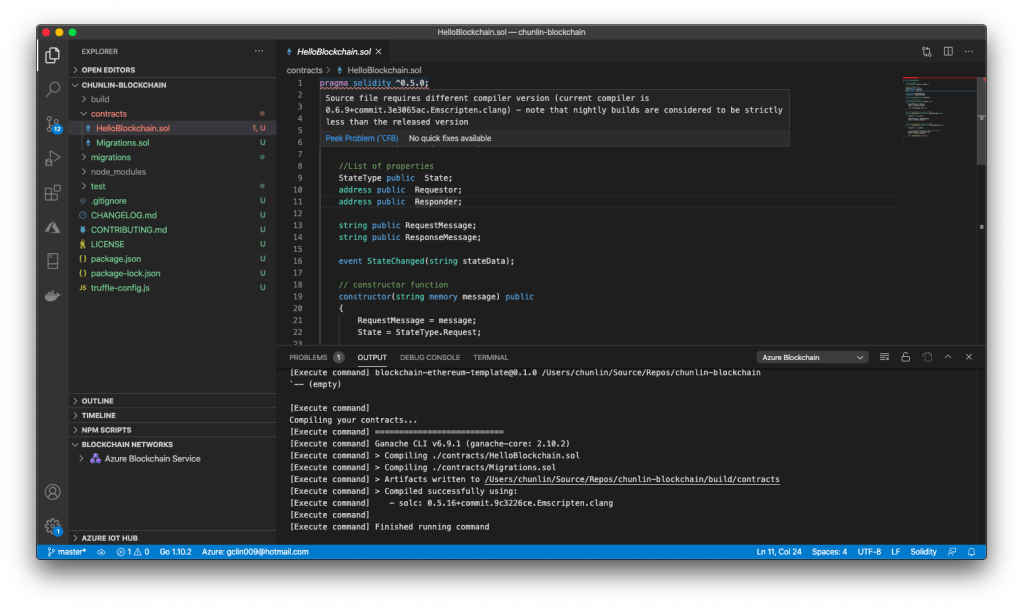

With the SD card ready, we can now proceed to burn the OS image to it. Raspberry Pi OS (previously called Raspbian) is the official operating system for all models of the Raspberry Pi. There are three types of Raspberry Pi OS we can choose from. Since I don’t need to use the desktop version of it, I go ahead with the Raspberry Pi OS Lite, which is also the smallest in size. Feel free to choose the type that suits your use case most.

Take note that here the Raspberry Pi OS is already based on Debian Buster, which is the development codename for Debian 10 released in July 2019.

After downloading the zip file to MacBook, we need to burn the image to the SD card.

Since the microSD card we bought above comes with the adapter, so we can easily slot them into the MacBook (which has a SD slot). To burn the OS image to the SD card, we can use Etcher for macOS.

The first step in Etcher is to select the Raspberry Pi OS zip file we downloaded earlier. Then we select the microSD card as the target. Finally we just need to click on the “Flash!” button.

After it’s done, we may need to pull out the SD card from our machine and then plug it back in in order to see the image that we flash. On MacBook, it is appears as a volume called “boot” in the Finder.

Step 2: Enabling Raspberry Pi SSH

To enable SSH, we need to place an empty file called “ssh” (without extension) in the root of the “boot” with the following command.

touch /Volumes/boot/ssh

This will later allow us to login to the Raspberry Pi over SSH with the username pi and password raspberry.

Step 3: Adding WiFi Network Info

Again, we need to place a file called “wpa_supplicant.conf” in the root of the “boot”. Then in the file, we will put the following as its content.

network={

ssid="NETWORK-NAME"

psk="NETWORK-PASSWORD"

}

The WPA in the file name stands for WiFi Protected Access, a security and security certification program built by the Wi-Fi Alliance® to secure wireless computer networks.

The wpa_supplicant is a free software implementation of an IEEE 802.11i supplicant (To understand what supplicant is, please read here). Using wpa_supplicant to configure WiFi connection on Raspberry Pi is going to be straightforward.

Hence, in order to setup the WiFi connection on the Raspberry Pi, now we just need to specify our WiFi network name (SSID) and its password in the configuration of the wpa_supplicant.

Step 3a: Buster Raspberry Pi Onwards

However, with the latest Buster Raspberry Pi OS release, we must also add a few more lines at the top of the wpa_supplicant.conf as shown below.

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

update_config=1

network={

ssid="NETWORK-NAME"

psk="NETWORK-PASSWORD"

}

The “ctrl_interface” is for the control interface. When it’s specified, wpa_supplicant will allow external programs to manage wpa_supplicant. For example, we can use wpa_cli, a WPA command line client, to interact with the wpa_supplicant. Here, “/var/run/wpa_supplicant” is the recommended directory for sockets and by default, wpa_cli will use it when trying to connect with wpa_supplicant.

In the Troubleshooting section near the end of this article, I will show how we can use wpa_cli to scan and list network names.

In addition, access control for the control interface can be configured by setting the directory to allow only members of a group to use sockets. This way, it is possible to run wpa_supplicant as root (since it needs to change network configuration and open raw sockets) and still allow GUI/CLI components to be run as non-root users. Here we allow only members of “netdev” who can manage network interfaces through the network manager and Wireless Interface Connection Daemon (WICD).

Finally, we have “update_config”. This option is to allow wpa_supplicant to overwrite configuration file whenever configuration is changed. This is required for wpa_cli to be able to store the configuration changes permanently, so we set it to 1.

Step 3b: Raspberry Pi 3 B+ and 4 B

According to the Raspberry Pi documentation, if you are using the Raspberry Pi 3 B+ and Raspberry Pi 4 B, you will also need to set the country code, so that the 5GHz networking can choose the correct frequency bands. With the country code, it looks something as such.

country=SG

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=netdev

update_config=1

network={

ssid="NETWORK-NAME"

psk="NETWORK-PASSWORD"

}

The first line is setting the country in which the device is used. Its value is the country code following ISO 3166 Alpha-2 country code. Here I put SG because I am now in Singapore.

Take note that, Raspberry Pi 3 B doesn’t support 5GHz WiFi networks. It only can work with 2.4GHz WiFi networks.

In the place where I am staying, we have a dual-band router. It uses two bands: 2.4GHz and 5GHz. So my Raspberry Pi can only connect to the 2.4GHz WiFi network.

By the way, 5GHz WiFi or 5G WiFi has nothing to do with the 5G mobile network. =)

Step 4: Boot the Raspberry Pi

Now we can eject the SD card from our MacBook and plug it into the Raspberry Pi. After that, we proceed to power on the Raspberry Pi after plug the USB power cable into it.

By default, the hostname of a new Raspberry Pi is raspberrypi. Hence, before we proceed, we need to remove all the keys belonging to raspberrypi.local with the following command, just so that we have a clean start.

ssh-keygen -R raspberrypi.local

Don’t worry if you get a host not found error for this command.

Of course, if you know the Raspberry Pi IP address, you can use its IP address as well instead of “raspberrypi.local”.

Next, let’s login to the Raspberry Pi with the following commands using the username and password in the Step 2 above.

ssh pi@raspberrypi.local

Step 5: Access Raspberry Pi Successfully

Now we should be able to access the Raspberry Pi (If not, please refer to the Troubleshooting section near the end of this article).

Now we have to do a few additional configurations for our Raspberry Pi using the following command.

sudo raspi-config

Firstly, we need to change the password by selecting the “1 Change User Password” item as shown in the screenshot above. Don’t use the default password for security purposes.

Secondly, we also need to change the hostname of the device which is under the “2 Network Options” item. Don’t always use the default hostname else we will end up with many Raspberry Pi using the same “raspberrypi” as the hostname.

Thirdly, under the “4 Localisation Options”, please make sure the Locale (by default should be UTF-8 chosen), Time Zone, and WLAN Country (should be the same we set in Step 3b) are correct.

Finally, if you are also on a new image, we are recommended to expand the file system under the “7 Advanced Options” item.

Now we can proceed to reboot our Raspberry Pi with the following command.

sudo reboot

Step 6: Get Updates

After the Raspberry Pi is successfully rebooted, please login to the device again to do updates with the following commands.

sudo apt-get update -y sudo apt-get upgrade -y

After this, if you would like to switch off the Raspberry Pi, please shut it down properly with the following command else it may corrupt the SD card.

sudo shutdown -h now

Troubleshooting WiFi Connection

When I first started connecting to the Raspberry Pi over WiFi, the connection always fails. That’s why in the end I chose to connect the Raspberry Pi with my laptop through Ethernet cable first before I could do some troubleshooting.

Connecting with Ethernet Cable

To connect to the Raspberry Pi via Ethernet cable, we just need to make sure that in the Network settings of our MacBook, the Ethernet connection status is connected, as shown in the following screenshot.

We also have to make sure that we have “Using DHCP” selected for “Configure IPv4” option. Finally, we also need to check that the “Location” at the top of the dialog box has “Automatic” selected for this Ethernet network configuration.

That’s all to setup the Ethernet connection between the Raspberry Pi and our MacBook.

Troubleshooting the WiFi Connection

If you also have problems in WiFi connection even after you have rebooted the Raspberry Pi, then you can try the following methods after you can access the Raspberry Pi through Ethernet cable.

- To get the network interfaces:

ip link show - To list network names (SSID):

iwlist wlan0 scan | grep ESSID - To edit or review the WiFi settings on the Raspberry Pi:

sudo nano /etc/wpa_supplicant/wpa_supplicant.conf - To show wireless devices and their configuration:

iw list

In the output of iwlist wlan0 frequency command, we can see that all broadcasting WiFi channels are having frequency in the 2.4GHz range, as shown in the following screenshot. Hence we know that the Raspberry Pi 3 Model B can only do 2.4GHz.

This is a very crucial thing to take note of because I wrongly set the SSID of a 5GHz WiFi network in the WPA Supplicant configuration file and the Raspberry Pi could not connect to the WiFi network at all.

We can also use wpa_cli to scan and list the network names, as shown in the following screenshot.

In the above scan results, you can see that there are 12 networks that the Raspberry Pi can pick up, the frequency that they are broadcasting on (again, it is shown in the range of 2.4GHz), the signal strength, the security type, and network name (SSID).

You may ask why I need to specify the interface in the wpa_cli command. This is because wpa_cli default interface is actually not the correct WiFi interface the Raspberry Pi has. As shown in the command “ip link show”, we are using wlan0 for WiFi connection. However, if we don’t specify the interface in wpa_cli, then we will get the following issue.

This is the problem discussed in the Arch Linux forum and solved in February 2018.

Conclusion

That’s all to get my Raspberry Pi up and running. Please let me know in comment section if you have any doubts about the steps listed above.

Here, I’d like to thank Mitch Allen for writing the great tutorial on how to setup Raspberry Pi over WiFi. His tutorial also includes instructions for Windows users, so if you are on Windows, please refer to his tutorial as well.

References

- Headless Raspberry Pi 3 B+ SSH WiFi Setup (Mac + Windows);

- Raspberry Pi Connect to Wifi Using wpa_supplicant;

- Example wpa_supplicant Configuration File;

- How To : Use wpa_cli To Connect To A Wireless Network;

- GitHub Issues: Network Manager vs wpa_supplicant;

- Connect to the Pi Robot from a Mac;

- Does Pi3 Wi-Fi Support 5 GHz and Does it Need an Extra Antenna?