It has been almost half a year since the first case relating to the COVID-19 pandemic in Singapore, the country I am now working at, was confirmed. Two days after the first case was confirmed in Singapore, eight travellers entering Malaysia, my home country, from Singapore were confirmed to be infected as well.

Since then, we were asked to work from home as travel restriction is applied in both countries. While the situation is not getting better, it’s quite disappointing to know that there are still people believing that COVID-19 is a hoax.

Fortunately, there are still a lot more people working hard in this tough period. Earlier on, my friend who is doing research in Colorado told me that she’s working hard with a group of scientists to educate the public about the virus.

In addition, to aid the researchers and data scientists in an effort to combat the pandemic disease, Google BigQuery also decided to host a repository of public datasets from JHU CSSE (Johns Hopkins Center for Systems Science and Engineering). With the public datasets, we can now query up to 1TB for free each month on COVID-19 datasets and the queries over COVID-19 data are free (until 15th of September 2020).

In my previous article, I talked about how Google BigQuery could work together with Google Data Studio to render beautiful reports without any coding. Thus, in this article, I will show how we can write a simple web client in Golang to fetch data from the BigQuery via its API.

BigQuery Public Datasets Programme

There are a huge number of datasets hosted by Google where we can access and integrate them into our applications but Google pays for the storage. Using the public datasets, we only need to pay for the queries we perform on the data.

In order to access the public datasets, we first need to enable them through the Google BigQuery documentation (I find this to be quite funny because Google makes the enabling link to be so hidden). In the “Using the Web UI” page, as shown in the screenshot below, we can then find an URL which will let us open the public datasets project manually through browser (Remember to update the &page=project to your project in GCP).

There are also detailed steps written in the documentation of Data Analytics Products (Yes, the same info is spread all over different places).

The COVID-19 Dataset

Once we have done the steps above, we shall see the public datasets, including the COVID-19 datasets, available in our Google BigQuery. The dataset that I will be using in this article is the covid19_jhu_csse, a daily updated data repository for COVID-19 from JHU CSSE.

There are four tables under the dataset where the first three recording the number of confirmed cases, the number of reported deaths, and the number of recovered cases, respectively, in each of the country or region.

The interesting about the first three tables is that they recorded the numbers of each day in a separated column. Hence, every day, there will be one new column added to three of the tables. I’m not sure why they do so but this actually requires us to write our own client in order to get the data. Google Data Studio cannot work well with dynamic column names.

Luckily, there is a fourth table called summary which actually has just one date column and every record for each day is one row instead of one column. This is a more SQL-friendly table and can be integrated with Google Data Studio easily.

In this article, I will demonstrate using 1st, 2nd, and 4th table in order to show how we can programmatically get the data through the BigQuery API.

BigQuery Client Library for Golang

There are many client libraries of Google BigQuery for different types of programming languages, including C#. In this article, we choose to use Golang.

Before we proceed, we need to make sure that we have already enabled the BigQuery API for our project in the GCP. From the GCP Cloud Console, we will get the credential which will allow us to connect to the Google BigQuery and thus we must keep this credential file in a safe and secret place.

Now we can proceed to build our Golang client.

Firstly, we need to install the client library using go get command.

go get -u cloud.google.com/go/bigquery

Secondly, we need to initialise a Google BigQuery client.

ctx := context.Background()

client, err := bigquery.NewClient(ctx, projectID)

if err != nil {

log.Fatalf("bigquery.NewClient: %v", err)

}

defer client.Close()

Querying the Tables

Next, we can start to query the data in the BigQuery.

rows, err := queryData1(ctx, client)

if err != nil {

log.Fatal(err)

}

queryResult := processQueryResult1(rows)

If we have other different queries for different tables or even datasets, we can continue to query in the same way as above.

So what does queryData1 look like? It is basically as simple as follows.

func queryData1(ctx context.Context, client *bigquery.Client) (*bigquery.RowIterator, error) {

query := client.Query("<SQL here>")

return query.Read(ctx)

}

For example, if we are fetching the date as well as numbers of confirmed cases and deaths, we will be using the the following SQL.

`SELECT

CAST(date as STRING) as date,

IFNULL(confirmed, 0) as confirmed_cases,

IFNULL(deaths, 0) as deaths

FROM ` + "`bigquery-public-data.covid19_jhu_csse.summary`" + ` ORDER BY date;

There are a few things to take note here is the use of CAST.

It casts the date field to string otherwise we may encounter problems such as having error of “schema field date of type DATE is not assignable to struct field date of type time.Time” when we unmarshal the returned JSON from the BigQuery in Golang later. The reason why I choose CAST is because casting from a date type to a string is independent of time zone and is of the form YYYY-MM-DD.

In addition, we also use IFNULL to make sure that the value in the confirmed_cases and deaths are always non-negative integers. In the original tables, the numbers can be null.

Now, we just need to have a struct where we can apply RowIterator.Next() to load each row into it. The struct that corresponding to the SQL above is as follows.

type QueryResultDataRow struct {

Date string `bigquery:"date"`

ConfirmedCases int64 `bigquery:"confirmed_cases"`

Deaths int64 `bigquery:"deaths"`

}

To iterate, we can use the code below.

func processQueryResult1(iter *bigquery.RowIterator) []QueryResultDataRow {

var result []QueryResultDataRow

for {

var row QueryResultDataRow

err := iter.Next(&row)

if err == iterator.Done {

break

}

if err != nil {

log.Print(err)

continue

}

result = append(result, row)

}

return result

}

Here, I’d like to share that there was a mistake I made when I wrote the code above. I forgot that I should end the for loop when the iterator is done, i.e. when err == iterator.Done. So the return statement will never reach. Please take note of this when you are writing this type of iteration.

Challenge: The Tables Having Dates as Columns

If you would like to challenge yourself to retrieve the data from the tables having dates as their columns, it is possible too, just with a few challenges.

First challenge is that we are not sure when the dataset will be updated. So, we can never be sure for the value of the last column. Since the dataset will be updated daily, to be safe, we can let the date of two days ago to be the last column in our query.

Second challenge is the format of the date. We cannot use the Golang magical reference date (Mon, Jan 2 15:04:05 MST 2006) to format the date because of the underscores found in the column name. There is a very interesting discussion about the origin of the magical reference date on Stack Overflow, in case you are interested, but it’s not important here. Hence, we will use the following code to format the date instead.

latestDateInQuery := fmt.Sprintf("_%v_%v_%v", int(d.Month()), d.Day(), d.Year() - 2000)

So the following code will help us to get the count from the second latest, if not the latest, column.

latestDate := time.Now().AddDate(0, 0, -2)

latestDateInQuery := fmt.Sprintf("_%v_%v_%v", int(latestDate.Month()), latestDate.Day(), latestDate.Year()-2000)

Once we get the column name, we can then use it in the following query.

`SELECT

IFNULL(province_state, "") AS place,

country_region,

latitude,

longitude,

(` + latestDateInQuery + `) AS count

FROM ` + "`bigquery-public-data.covid19_jhu_csse.confirmed_cases`;"

Visualising the Data

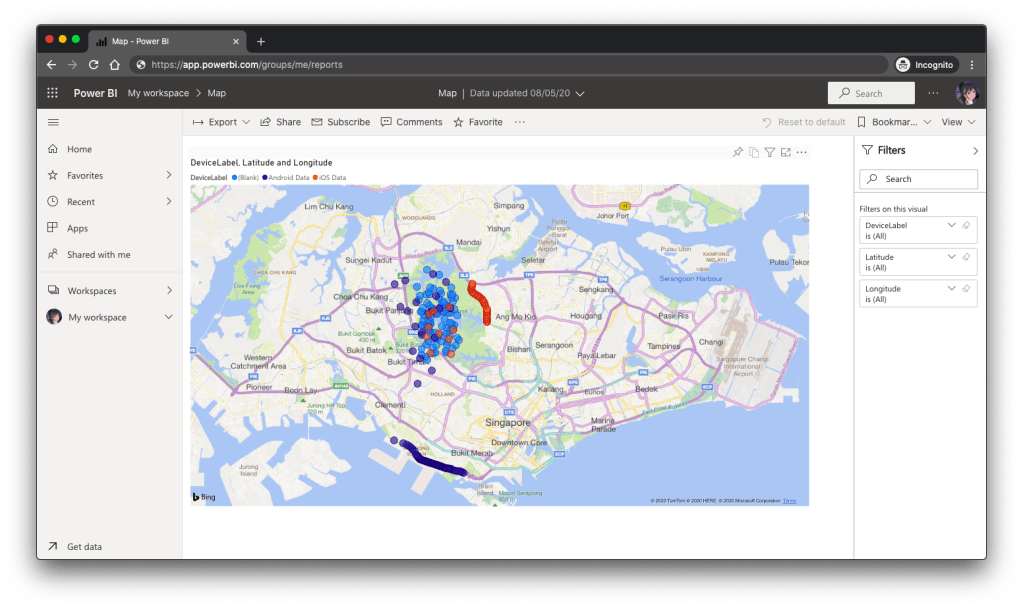

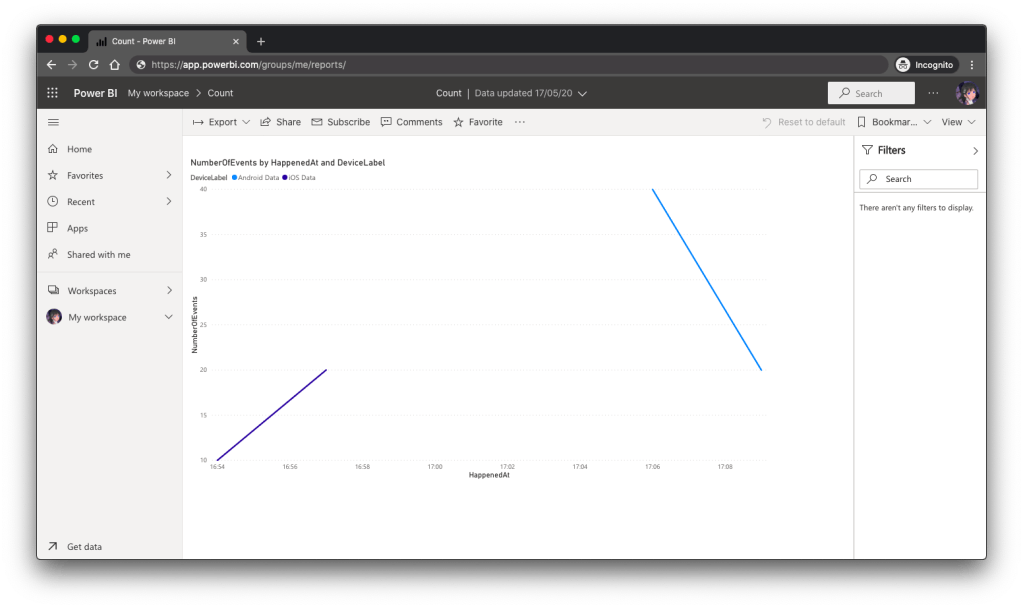

With the queries above, we can then easily generate results with Google Charts. Here, I use the Line Chart and the GeoChart.

There is an interesting feature in GeoChart is that, by default, when we are using latitude and longitude instead of the address to identify the places, the text shown on the map tooltip will be the latitude and longitude, which is not user friendly. However, we can actually change the text by putting a description column right after the longitude column, as discussed over here on Google Groups. It’s interesting because this is said to be an undocumented support for such a column. So we’re not sure where this will stop working.

Next, I am using web page done with Material Design to display the charts. Please enjoy the following screenshots.

That’s all for the COVID-19 dashboard done using Golang and Google BigQuery. Also, thanks to JHU CSSE and Google, we are able to access to such an important data for free.

Finally, I’d like to wish all of you and your loved ones to stay safe and healthy.