On a chilly November morning, I attended the Google DevFest 2024 in Singapore. Together with my friends, we attended a workshop titled “Gemini Masterclass: How to Unlock Its Power with Prompting, Functions, and Agents.” The session was led by two incredible speakers, Martin Andrews and Sam Witteveen.

Martin, who holds a PhD in Machine Learning and has been an Open Source advocate since 1999. Sam is a Google Developer Expert in Machine Learning. Both of them are also organisers of the Machine Learning Singapore Meetup group. Together, they delivered an engaging and hands-on workshop about Gemini, the advanced LLM from Google.

Thanks to their engaging Gemini Masterclass, I have taken my first steps into the world of LLMs. This blog post captures what I learned and my journey into the fascinating world of Gemini.

About LLM and Gemini

LLM stands for Large Language Model. To most people, an LLM is like a smart friend who can answer almost all our questions with responses that are often accurate and helpful.

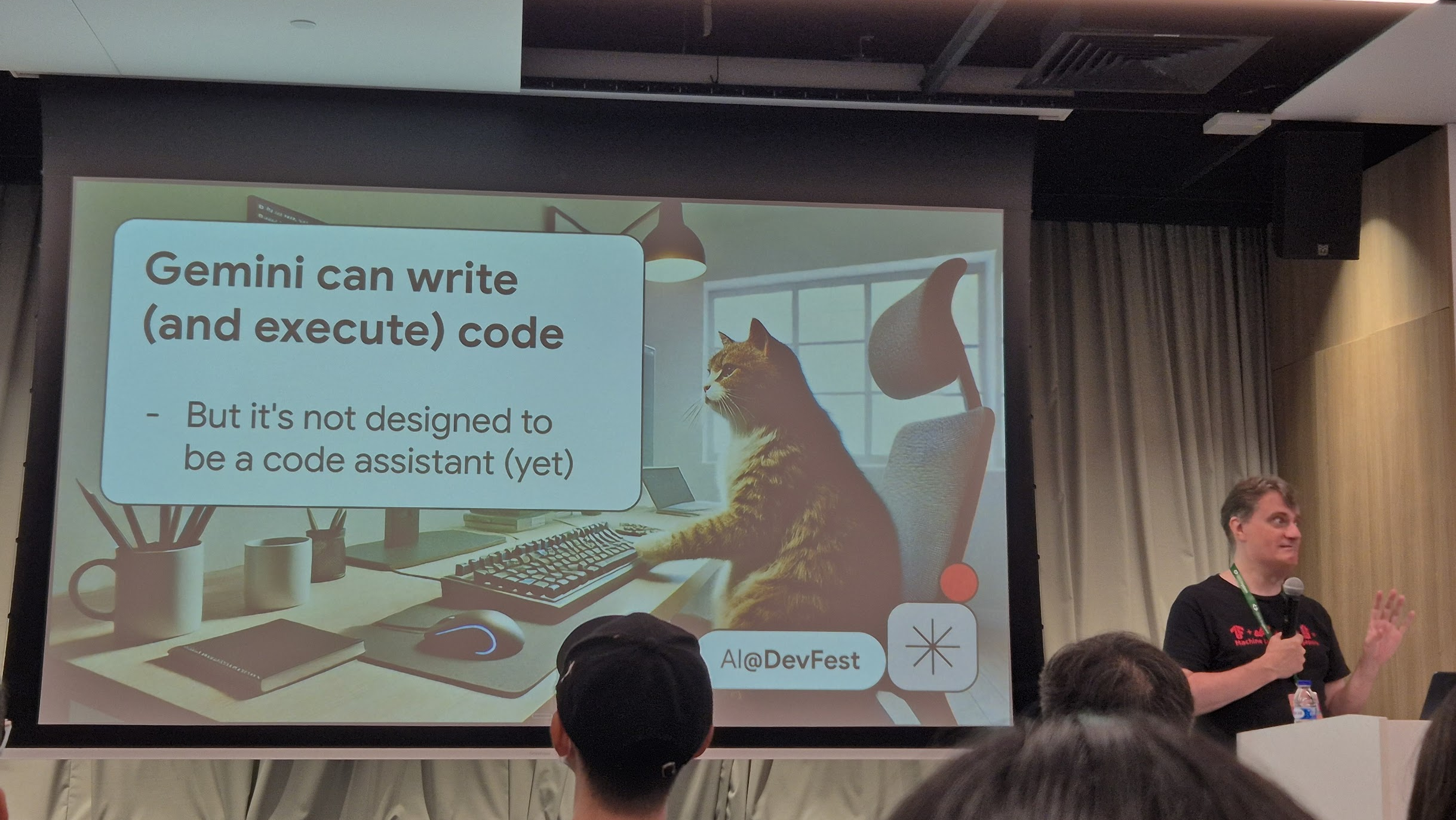

As a LLM, Gemini is trained on large amount of text data and can perform a wide range of tasks: answering questions, writing stories, summarising long documents, or even helping to debug code. What makes them special is their ability to “understand” and generate language in a way that feels natural to us.

Many of my developer friends have started using Gemini as a coding assistant in their IDEs. While it is good at that, Gemini is much more than just a coding tool.

Gemini is designed to not only respond to prompts but also act as an assistant with an extra set of tools. To make the most of Gemini, it is important to understand how it works and what it can (and cannot) do. With the knowledge gained from the DevFest workshop, I decided to explore how Gemini could assist with optimising relic choices in a game called Honkai: Star Rail.

Honkai: Star Rail and Gemini for Its Relic Recommendations

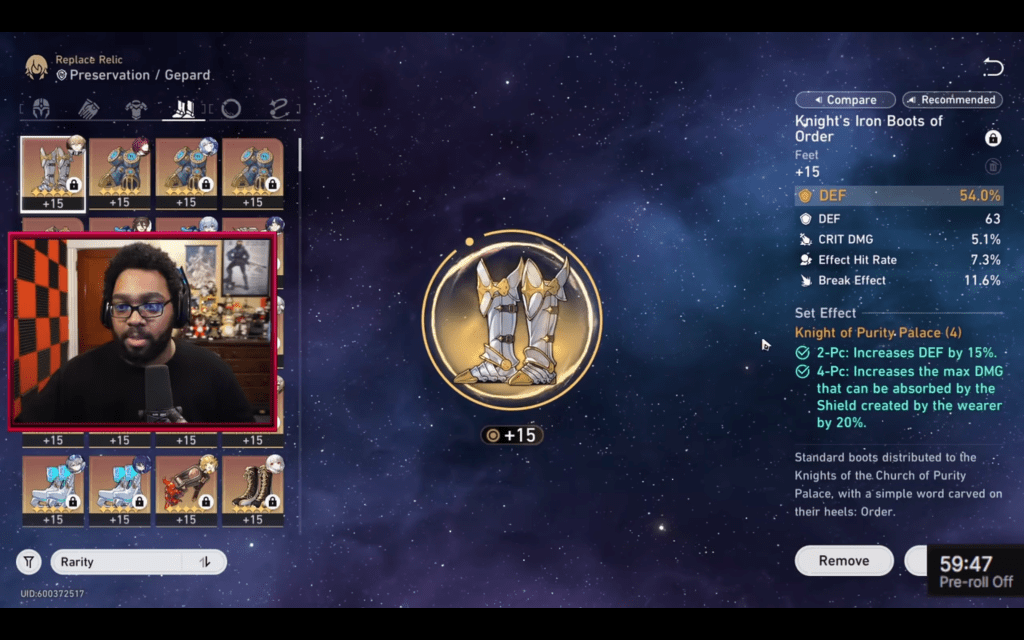

Honkai: Star Rail (HSR) is a popular RPG that has captured the attention of players worldwide. One of the key features of the game is its relic system, where players equip their characters with relics like hats, gloves, or boots to boost stats and unlock special abilities. Each relic has unique attributes, and selecting the right sets of relics for a character can make a huge difference in gameplay.

As a casual player, I often found myself overwhelmed by the number of options and the subtle synergies between different relic sets. Finding the good relic combination for each character was time-consuming.

This is where LLMs like Gemini come into play. With the ability to process and analyse complex data, Gemini can help players make smarter decisions.

In this blog post, I will briefly show how this Gemini-powered relic recommendation system can analyse a player’s current characters to suggest the best options for them. Then it will also explain the logic behind its recommendations, helping us to understand why certain relics are ideal.

Setup the Project

To make my project code available to everyone, I used Google Colab, a hosted Jupyter Notebook service that requires no setup to use and provides free access to computing resources, including GPUs and TPUs. You can access my code by clicking on the button below.

In my project, I used the google-generativeai Python library, which is pre-installed in Colab. This library serves as a user-friendly API for interacting with Google LLMs, including Gemini. It makes it easy for us to integrate Gemini capabilities directly into our code.

Next, we will need to import the necessary libraries.

The first library to import is definitely the google.generativeai. Without it, we cannot interact with Gemini easily. Then we have google.colab.userdata which securely retrieves sensitive data, like our API key, directly from the Colab notebook environment.

We will also use IPython.display for displaying results in a readable format, such as Markdown.

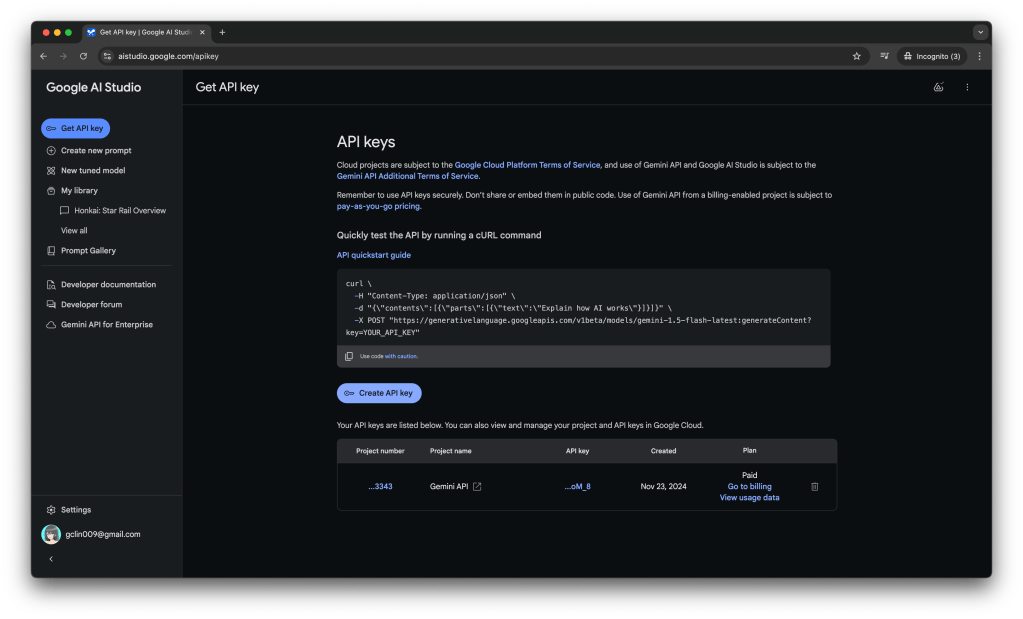

In the Secret section, we will have two records, i.e.

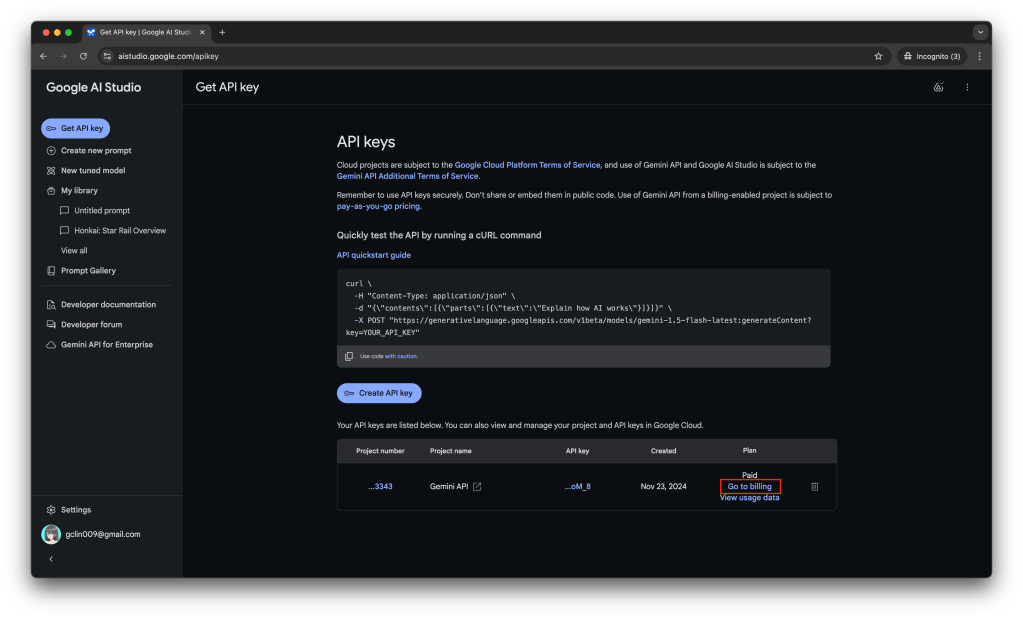

HONKAI_STAR_RAIL_PLAYER_ID: Your HSR player UID. It is used later to personalise relic recommendations.GOOGLE_API_KEY: The API key that we can get from Google AI Studio to authenticate with Gemini.

Once we have initialised the google.generativeai library with the GOOGLE_API_KEY, we can proceed to specify the Gemini model we will be using.

The choice of model is crucial in LLM projects. Google AI Studio offers several options, each representing a trade-off between accuracy and cost. For my project, I choose models/gemini-1.5-flash-8b-001, which provided a good balance for this experiment. Larger models might offer slightly better accuracy but at a significant cost increase.

Hallucination and Knowledge Limitation

We often think of LLMs like Gemini as our smart friends who can answer any question. But just like even our smartest friend can sometimes make mistakes, LLMs have their limits too.

Gemini knowledge is based on the data it was trained on, which means it doesn’t actually know everything. Sometimes, it might hallucinate, i.e. model invents information that sounds plausible but not actually true.

While Gemini is trained on a massive dataset, its knowledge is not unlimited. As a responsible AI, it acknowledges its limitations. So, when it cannot find the answer, it will tell us that it lacks the necessary information rather than fabricating a response. This is how Google builds safer AI systems, as part of its Secure AI Framework (SAIF).

To overcome these constraints, we need to employ strategies to augment the capabilities of LLMs. Techniques such as integrating Retrieval-Augmented Generation (RAG) and leveraging external APIs can help bridge the gap between what the model knows and what it needs to know to perform effectively.

System Instructions

Leveraging System Instructions is a way to improve the accuracy and reliability of Gemini responses.

System instructions are prompts given before the main query in order to guide Gemini. These instructions provide crucial context and constraints, significantly enhancing the accuracy and reliability of the generated output.

The specific design and phrasing of the system instructions provided to the Gemini is crucial. Effective system instructions provide Gemini with the necessary context and constraints to generate accurate and relevant responses. Without carefully crafted system instructions, even the most well-designed prompt can yield poor results.

Context Framing

As we can see from the example above, writing clear and effective system instructions requires careful thought and a lot of testing.

This is just one part of a much bigger picture called Context Framing, which includes preparing data, creating embeddings, and deciding how the system retrieves and uses that data. Each of these steps needs expertise and planning to make sure the solution works well in real-world scenarios.

You might have heard the term “Prompt Engineering,” and it sounds kind of technical, but it is really about figuring out how to ask the LLM the right questions in the right way to get the best answers from an LLM.

While context framing and prompt engineering are closely related and often overlap, they emphasise different aspects of the interaction with the LLM.

Stochasticity

While experimenting with Gemini, I noticed that even if I use the exact same prompt, the output can vary slightly each time. This happens because LLMs like Gemini have a built-in element of randomness , known as Stochasticity.

For example, when querying for DPS characters, Lingsha was inconsistently included in the results. While this might seem like a minor variation, it underscores the probabilistic nature of LLM outputs and suggests that running multiple queries might be needed to obtain a more reliable consensus.

Hence, it is important to treat writing efficient system instruction and prompt as iterative processes. so that we can experiment with different phrasings to find what works best and yields the most consistent results.

Temperature Tuning

We can also reduce the stochasticity of Gemini response through adjusting parameters like temperature. Lower temperatures typically reduce randomness, leading to more consistent outputs, but also may reduce creativity and diversity.

Temperature is an important parameter for balancing predictability and diversity in the output. Temperature, a number in the range of 0.0 to 2.0 with default to be 1.0 in gemini-1.5-flash model, indicates the probability distribution over the vocabulary in the model when generating text. Hence, a lower temperature makes the model more likely to select words with higher probabilities, resulting in more predictable and focused text.

Function Calls

A major limitation of using system instructions alone is their static nature.

For example, my initial system instructions included a list of HSR characters, but this list is static. The list does not include newly released characters or characters specific to the player’s account. In order to dynamically access a player’s character database and provide personalised recommendations, I integrated Function Calls to retrieve real-time data.

For fetching the player’s HSR character data, I leveraged the open-source Python library mihomo. This library provides an interface for accessing game data, enabling dynamic retrieval of a player’s characters and their attributes. This dynamic data retrieval is crucial for generating truly personalised relic recommendations.

Defining the functions in my Python code was only the first step. To use function calls, Gemini needed to know which functions were available. We can provide this information to Gemini as shown below.

model = genai.GenerativeModel('models/gemini-1.5-flash-8b-001', tools=[get_player_name, get_player_starfaring_companions])

After we pass a query to a Gemini, the model returns a structured object that includes the names of relevant functions and their arguments based on the prompt, as shown in the screenshot below.

Using descriptive function names is essential for successful function calling with LLMs because the accuracy of function calls depends heavily on well-designed function names in our Python code. Inaccurate naming can directly impact the reliability of the entire system.

If our Python function is named incorrectly, for example, calling a function get_age but it returns the name of the person, Gemini might select that function wrongly when the prompt is asking for age.

As shown in the screenshot above, the prompt requested information about all the characters of the player. Gemini simply determines which function to call and provides the necessary arguments. Gemini does not directly execute the functions. The actual execution of the function needs to be handled by us, as demonstrated in the screenshot below.

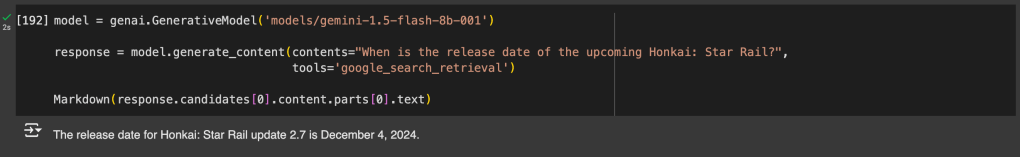

Grounding with Google Search

Function calls are a powerful way to access external data, but they require pre-defined functions and APIs.

To go beyond these limits and gather information from many online sources, we can use Gemini grounding feature with Google Search. This feature allows Gemini to google and include what it finds in its answers. This makes it easier to get up-to-date information and handle questions that need real-time data.

With this feature enabled, we thus can ask Gemini to get some real-time data from the Internet, as shown below.

Building a Semantic Knowledge Base with Pinecone

System instructions and Google search grounding provide valuable context, but a structured knowledge base is needed to handle the extensive data about HSR relics.

Having explored system instructions and Google search grounding, the next challenge is to manage the extensive data about HSR relics. We need a way to store and quickly retrieve this information, enabling the system to generate timely and accurate relic recommendations. Thus we will need to use a vector database ideally suited for managing the vast dataset of relic information.

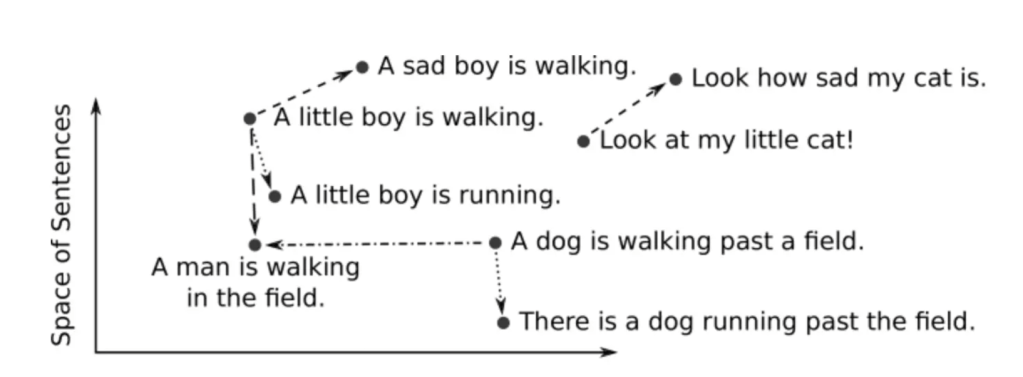

Vector databases, unlike traditional databases that rely on keyword matching, store information as vectors enabling efficient similarity searches. This allows for retrieving relevant relic sets based on the semantic meaning of a query, rather than relying solely on keywords.

There are many options for vector database, but I choose Pinecone. Pinecone, a managed service, offered the scalability needed to handle the HSR relic dataset and the robust API essential for reliable data access. Its availability of a free tier is also a significant factor because it allows me to keep costs low during the development of my project.

Pinecone’s well-documented API and straightforward SDK make integration surprisingly easy. To get started, simply follow the Pinecone documentation to install the SDK in our code and retrieve the API key.

# Import the Pinecone library

from pinecone.grpc import PineconeGRPC as Pinecone

from pinecone import ServerlessSpec

import time

# Initialize a Pinecone client with your API key

pc = Pinecone(api_key=userdata.get('PINECONE_API_KEY'))

I prepare my Honkai: Star Rail relic data, which I have previously organised into a JSON structure. This data includes information on each relic set’s two-piece and four-piece effects. Here’s a snippet to illustrate the format:

[

{

"name": "Sacerdos' Relived Ordeal",

"two_piece": "Increases SPD by 6%",

"four_piece": "When using Skill or Ultimate on one ally target, increases the ability-using target's CRIT DMG by 18%, lasting for 2 turn(s). This effect can stack up to 2 time(s)."

},

{

"name": "Scholar Lost in Erudition",

"two_piece": "Increases CRIT Rate by 8%",

"four_piece": "Increases DMG dealt by Ultimate and Skill by 20%. After using Ultimate, additionally increases the DMG dealt by the next Skill by 25%."

},

...

]

With the relic data organised in Pinecone, the next challenge is to enable similarity searches with vector embedding. Vector embedding captures the semantic meaning of the text, allowing Pinecone to identify similar relic sets based on their inherent properties and characteristics.

Now, we can generate vector embeddings for the HSR relic data using Pinecone. The following code snippet illustrates this process which is to convert textual descriptions of relic sets into numerical vector embeddings. These embeddings capture the semantic meaning of the relic set descriptions, enabling efficient similarity searches later.

# Load relic set data from the JSON file

with open('/content/hsr-relics.json', 'r') as f:

relic_data = json.load(f)

# Prepare data for Pinecone

relic_info_data = [

{"id": relic['name'], "text": relic['two_piece'] + " " + relic['four_piece']} # Combine relic set descriptions

for relic in relic_data

]

# Generate embeddings using Pinecone

embeddings = pc.inference.embed(

model="multilingual-e5-large",

inputs=[d['text'] for d in relic_info_data],

parameters={"input_type": "passage", "truncate": "END"}

)

print(embeddings)

As shown in the code above, we use the multilingual-e5-large model, a text embedding model from Microsoft research, to generate a vector embedding for each relic set. The multilingual-e5-large model works well on messy data and it is good for short queries.

Pinecone ability to perform fast similarity searches relies on its indexing mechanism. Without an index, searching for similar relic sets would require comparing each relic set’s embedding vector to every other one, which would be extremely slow, especially with a large dataset. I choose Pinecone serverless index hosted on AWS for its automatic scaling and reduced infrastructure management.

# Create a serverless index

index_name = "hsr-relics-index"

if not pc.has_index(index_name):

pc.create_index(

name=index_name,

dimension=1024,

metric="cosine",

spec=ServerlessSpec(

cloud='aws',

region='us-east-1'

)

)

# Wait for the index to be ready

while not pc.describe_index(index_name).status['ready']:

time.sleep(1)

The dimension parameter specifies the dimensionality of the vector embeddings. Higher dimensionality generally allows for capturing more nuanced relationships between data points. For example, two relic sets might both increase ATK, but one might also increase SPD while the other increases Crit DMG. A higher-dimensional embedding allows the system to capture these subtle distinctions, leading to more relevant recommendations.

For the metric parameter which measures the similarity between two vectors (representing relic sets), we use the cosine metric which is suitable for measuring the similarity between vector embeddings generated from text. This is crucial for understanding how similar two relic descriptions are.

With the vector embeddings generated, the next step was to upload them into my Pinecone index. Pinecone uses the upsert function to add or update vectors in the index. The following code snippet shows how we can upsert the generated embeddings into the Pinecone index.

# Target the index where you'll store the vector embeddings

index = pc.Index("hsr-relics-index")

# Prepare the records for upsert

# Each contains an 'id', the embedding 'values', and the original text as 'metadata'

records = []

for r, e in zip(relic_info_data, embeddings):

records.append({

"id": r['id'],

"values": e['values'],

"metadata": {'text': r['text']}

})

# Upsert the records into the index

index.upsert(

vectors=records,

namespace="hsr-relics-namespace"

)

The code uses the zip function to iterate through both the list of prepared relic data and the list of generated embeddings simultaneously. For each pair, it creates a record for Pinecone with the following attributes.

- id: Name of the relic set to ensure uniqueness;

- values: The vector representing the semantic meaning of the relic set effects;

- metadata: The original description of the relic effects, which will be used later for providing context to the user’s recommendations.

Implementing Similarity Search in Pinecone

With the relic data stored in Pinecone now, we can proceed to implement the similarity search functionality.

def query_pinecone(query: str) -> dict:

# Convert the query into a numerical vector that Pinecone can search with

query_embedding = pc.inference.embed(

model="multilingual-e5-large",

inputs=[query],

parameters={

"input_type": "query"

}

)

# Search the index for the three most similar vectors

results = index.query(

namespace="hsr-relics-namespace",

vector=query_embedding[0].values,

top_k=3,

include_values=False,

include_metadata=True

)

return results

The function above takes a user’s query as input, converts it into a vector embedding using Pinecone’s inference endpoint, and then uses that embedding to search the index, returning the top three most similar relic sets along with their metadata.

Relic Recommendations with Pinecone and Gemini

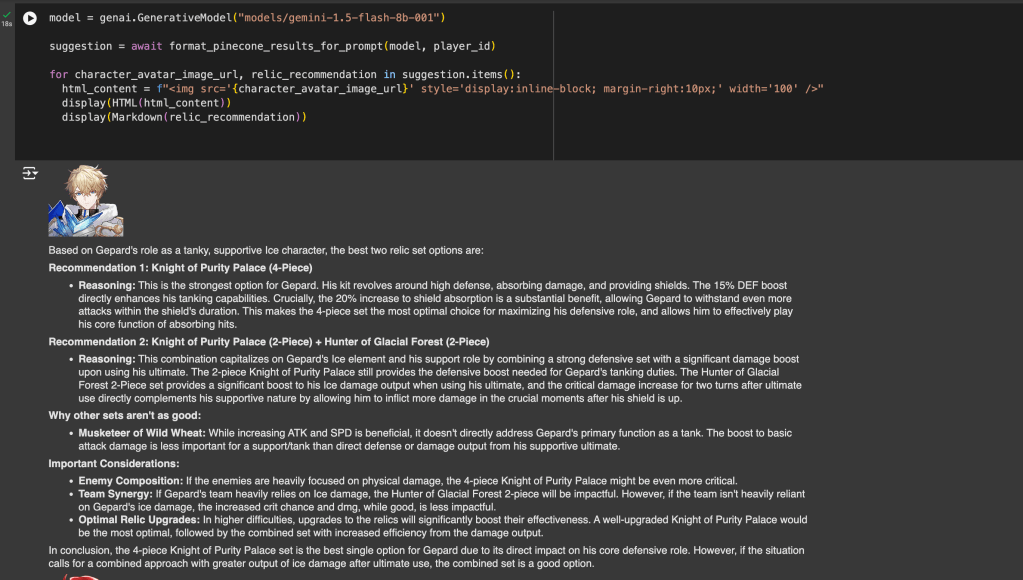

With the integration with Pinecode, we design the initial prompt to pick relevant relic sets from Pinecone. After that, we take the results from Pinecone and combine them with the initial prompt to create a richer, more informative prompt for Gemini, as shown in the following code.

from google.generativeai.generative_models import GenerativeModel

async def format_pinecone_results_for_prompt(model: GenerativeModel, player_id: int) -> dict:

character_relics_mapping = await get_player_character_relic_mapping(player_id)

result = {}

for character_name, (character_avatar_image_url, character_description) in character_relics_mapping.items():

print(f"Processing Character: {character_name}")

additional_character_data = character_profile.get(character_name, "")

character_query = f"Suggest some good relic sets for this character: {character_description} {additional_character_data}"

pinecone_response = query_pinecone(character_query)

prompt = f"User Query: {character_query}\n\nRelevant Relic Sets:\n"

for match in pinecone_response['matches']:

prompt += f"* {match['id']}: {match['metadata']['text']}\n" # Extract relevant data

prompt += "\nBased on the above information, recommend two best relic sets and explain your reasoning. Each character can only equip with either one 4-piece relic or one 2-piece relic with another 2-piece relic. You cannot recommend a combination of 4-piece and 2-piece together. Consider the user's query and the characteristics of each relic set."

response = model.generate_content(prompt)

result[character_avatar_image_url] = response.text

return result

The code shows that we are doing both prompt engineering (designing the initial query to get relevant relics) and context framing (combining the initial query with the retrieved relic information to get a better overall recommendation from Gemini).

First the code retrieves data about the player’s characters, including their descriptions, images, and relics the characters currently are wearing. The code then gathers potentially relevant data about each character from a separate data source character_profile which has more information, such as gameplay mechanic about the characters that we got from the Game8 Character List. With the character data, the query will find similar relic sets in the Pinecone database.

After Pinecone returns matches, the code constructs a detailed prompt for the Gemini model. This prompt includes the character’s description, relevant relic sets found by Pinecone, and crucial instructions for the model. The instructions emphasise the constraints of choosing relic sets: either a 4-piece set, or two 2-piece sets, not a mix. Importantly, it also tells Gemini to consider the character’s existing profile and to prioritise fitting relic sets.

Finally, the code sends this detailed prompt to Gemini, receiving back the recommended relic sets.

Langtrace

Using LLMs like Gemini is sure exciting, but figuring out what is happening “under the hood” can be tricky.

If you are a web developer, you are probably familiar with Grafana dashboards. They show you how your web app is performing, highlighting areas that need improvement.

Langtrace is like Grafana, but specifically for LLMs. It gives us a similar visual overview, tracking our LLM calls, showing us where they are slow or failing, and helping us optimise the performance of our AI app.

Langtrace is not only useful for tracing our LLM calls, it also offers metrics on token counts and costs, as shown in the following screenshot.

Wrap-Up

Building this Honkai: Star Rail (HSR) relic recommendation system is a rewarding journey into the world of Gemini and LLMs.

I am incredibly grateful to Martin Andrews and Sam Witteveen for their inspiring Gemini Masterclass at Google DevFest in Singapore. Their guidance helped me navigate the complexities of LLM development, and I learned firsthand the importance of careful prompt engineering, the power of system instructions, and the need for dynamic data access through function calls. These lessons underscore the complexities of developing robust LLM apps and will undoubtedly inform my future AI projects.

Building this project is an enjoyable journey of learning and discovery. I encountered many challenges along the way, but overcoming them deepened my understanding of Gemini. If you’re interested in exploring the code and learning from my experiences, you can access my Colab notebook through the button below. I welcome any feedback you might have!