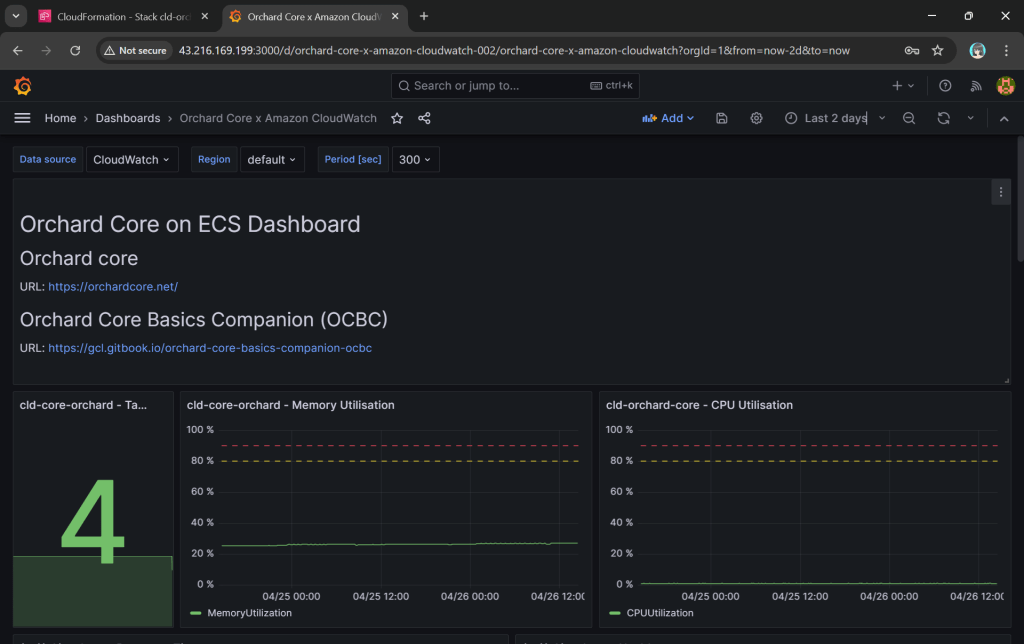

In the previous article, we have discussed about how we can build a custom monitoring pipeline that has Grafana running on Amazon ECS to receive metrics and logs, which are two of the observability pillars, sent from the Orchard Core on Amazon ECS. Today, we will proceed to talk about the third pillar of observability, traces.

Source Code

The CloudFormation templates and relevant C# source codes discussed in this article is available on GitHub as part of the Orchard Core Basics Companion (OCBC) Project: https://github.com/gcl-team/Experiment.OrchardCore.Main.

About Grafana Tempo

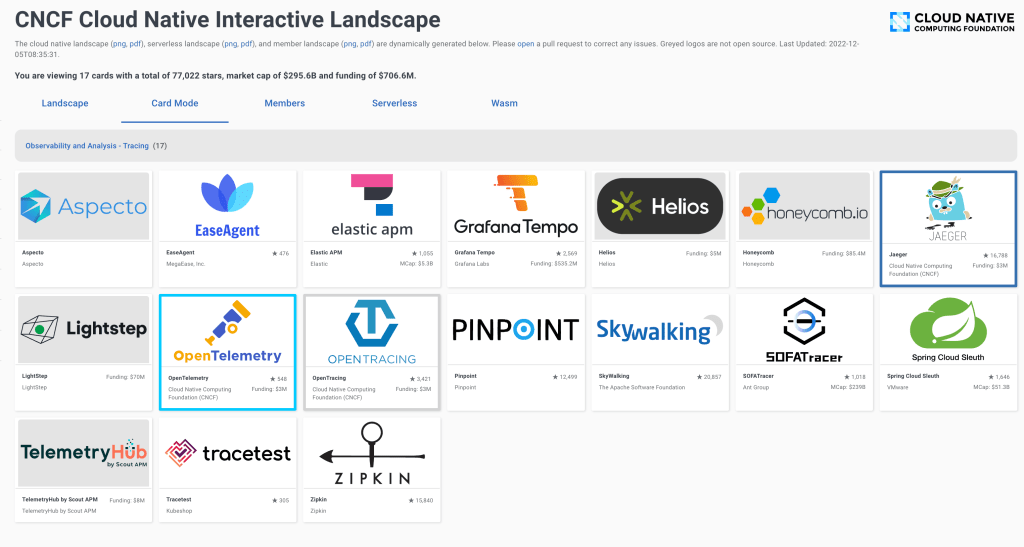

To capture and visualise traces, we will use Grafana Tempo, an open-source, scalable, and cost-effective tracing backend developed by Grafana Labs. Unlike other tracing tools, Tempo does not require an index, making it easy to operate and scale.

We choose Tempo because it is fully compatible with OpenTelemetry, the open standard for collecting distributed traces, which ensures flexibility and vendor neutrality. In addition, Tempo seamlessly integrates with Grafana, allowing us to visualise traces alongside metrics and logs in a single dashboard.

Finally, being a Grafana Labs project means Tempo has strong community backing and continuous development.

About OpenTelemetry

With a solid understanding of why Tempo is our tracing backend of choice, let’s now dive deeper into OpenTelemetry, the open-source framework we use to instrument our Orchard Core app and generate the trace data Tempo collects.

OpenTelemetry is a Cloud Native Computing Foundation (CNCF) project and a vendor-neutral, open standard for collecting traces, metrics, and logs from our apps. This makes it an ideal choice for building a flexible observability pipeline.

OpenTelemetry provides SDKs for instrumenting apps across many programming languages, including C# via the .NET SDK, which we use for Orchard Core.

OpenTelemetry uses the standard OTLP (OpenTelemetry Protocol) to send telemetry data to any compatible backend, such as Tempo, allowing seamless integration and interoperability.

Setup Tempo on EC2 With CloudFormation

It is straightforward to deploy Tempo on EC2.

Let’s walk through the EC2 UserData script that installs and configures Tempo on the instance.

First, we download the Tempo release binary, extract it, move it to a proper system path, and ensure it is executable.

wget https://github.com/grafana/tempo/releases/download/v2.7.2/tempo_2.7.2_linux_amd64.tar.gz

tar -xzvf tempo_2.7.2_linux_amd64.tar.gz

mv tempo /usr/local/bin/tempo

chmod +x /usr/local/bin/tempo

Next, we create a basic Tempo configuration file at /etc/tempo.yaml to define how Tempo listens for traces and where it stores trace data.

echo "

server:

http_listen_port: 3200

distributor:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

storage:

trace:

backend: local

local:

path: /tmp/tempo/traces

" > /etc/tempo.yaml

Let’s breakdown the configuration file above.

The http_listen_port allows us to set the HTTP port (3200) for Tempo internal web server. This port is used for health checks and Prometheus metrics.

After that, we configure where Tempo listens for incoming trace data. In the configuration above, we enabled OTLP receivers via both gRPC and HTTP, the two protocols that OpenTelemetry SDKs and agents use to send data to Tempo. Here, the ports 4317 (gRPC) and 4318 (HTTP) are standard for OTLP.

Last but not least, in the configuration, as demonstration purpose, we use the simplest one, local storage, to write trace data to the EC2 instance disk under /tmp/tempo/traces. This is fine for testing or small setups, but for production we will likely want to use services like Amazon S3.

In addition, since we are using local storage on EC2, we can easily SSH into the EC2 instance and directly inspect whether traces are being written. This is incredibly helpful during debugging. What we need to do is to run the following command to see whether files are being generated when our Orchard Core app emits traces.

ls -R /tmp/tempo/traces

The configuration above is intentionally minimal. As our setup grows, we can explore advanced options like remote storage, multi-tenancy, or even scaling with Tempo components.

Finally, in order to enable Tempo to start on boot, we create a systemd unit file that allows Tempo to start on boot and automatically restart if it crashes.

cat <<EOF > /etc/systemd/system/tempo.service

[Unit]

Description=Grafana Tempo service

After=network.target

[Service]

ExecStart=/usr/local/bin/tempo -config.file=/etc/tempo.yaml

Restart=always

RestartSec=5

User=root

LimitNOFILE=1048576

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reexec

systemctl daemon-reload

systemctl enable --now tempo

This systemd service ensures that Tempo runs in the background and automatically starts up after a reboot or a crash. This setup is crucial for a resilient observability pipeline.

Understanding OTLP Transport Protocols

In the previous section, we configured Tempo to receive OTLP data over both gRPC and HTTP. These two transport protocols are supported by the OTLP, and each comes with its own strengths and trade-offs. Let’s break them down.

Tempo has native support for gRPC, and many OpenTelemetry SDKs default to using it. gRPC is a modern, high-performance transport protocol built on top of HTTP/2. It is the preferred option when performanceis critical. gRPC also supports streaming, which makes it ideal for high-throughput scenarios where telemetry data is sent continuously.

However, gRPC is not natively supported in browsers, so it is not ideal for frontend or web-based telemetry collection unless a proxy or gateway is used. In such scenarios, we will normally choose HTTP which is browser-friendly. HTTP is a more traditional request/response protocol that works well in restricted environments.

Since we are collecting telemetry from server-side like Orchard Core running on ECS, gRPC is typically the better choice due to its performance benefits and native support in Tempo.

Please take note that since gRPC requires HTTP/2, which some environments, for example, IoT devices and embedding systems, might not have mature gRPC client support, OTLP over HTTP is often preferred in simpler or constrained systems.

gRPC allows multiplexing over a single connection using HTTP/2. Hence, in gRPC, all telemetry signals, i.e. logs, metrics, and traces, can be sent concurrently over one connection. However, with HTTP, each telemetry signal needs a separate POST request to its own endpoint as listed below to enforce clean schema boundaries, simplify implementation, and stay aligned with HTTP semantics.

- Logs:

/v1/logs; - Metrics:

/v1/metrics; - Traces:

/v1/traces.

In HTTP, since each signal has its own POST endpoint with its own protobuf schema in the body, there is no need for the receiver to guess what is in the body.

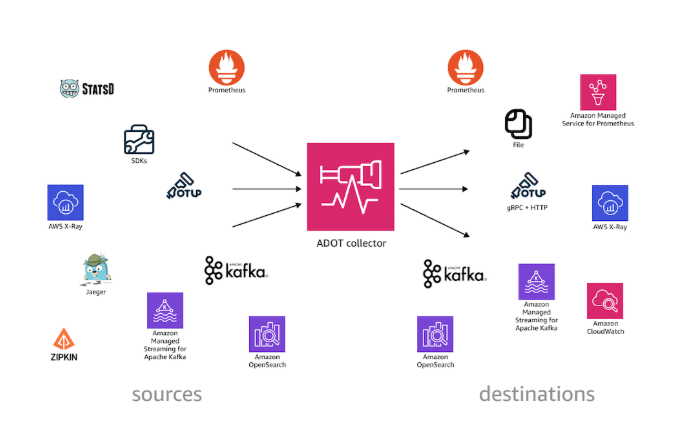

AWS Distro for Open Telemetry (ADOT)

Now that we have Tempo running on EC2 and understand the OTLP protocols it supports, the next step is to instrument our Orchard Core to generate and send trace data.

The following code snippet shows what a typical direct integration with Tempo might look like in an Orchard Core.

builder.Services

.AddOpenTelemetry()

.ConfigureResource(resource => resource.AddService(serviceName: "cld-orchard-core"))

.WithTracing(tracing => tracing

.AddAspNetCoreInstrumentation()

.AddOtlpExporter(options =>

{

options.Endpoint = new Uri("http://<tempo-ec2-host>:4317");

options.Protocol = OpenTelemetry.Exporter.OtlpExportProtocol.Grpc;

})

.AddConsoleExporter());

This approach works well for simple use cases during development stage, but it comes with trade-offs that are worth considering. Firstly, we couple our app directly to the observability backend, reducing flexibility. Secondly, central management becomes harder when we scale to many services or environments.

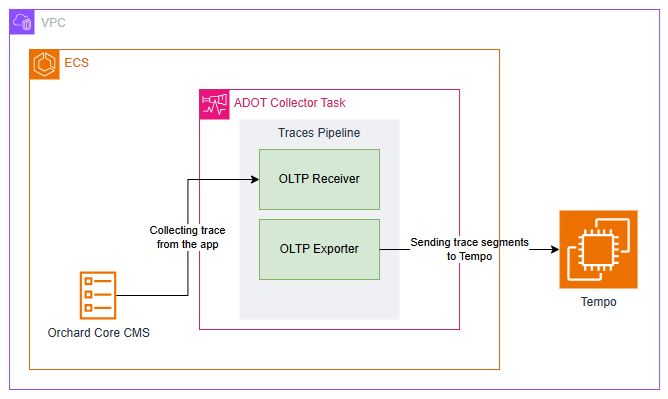

This is where AWS Distro for OpenTelemetry (ADOT) comes into play.

ADOT is a secure, AWS-supported distribution of the OpenTelemetry project that simplifies collecting and exporting telemetry data from apps running on AWS services, for example our Orchard Core on ECS now. ADOT decouples our apps from the observability backend, provides centralised configuration, and handles telemetry collection more efficiently.

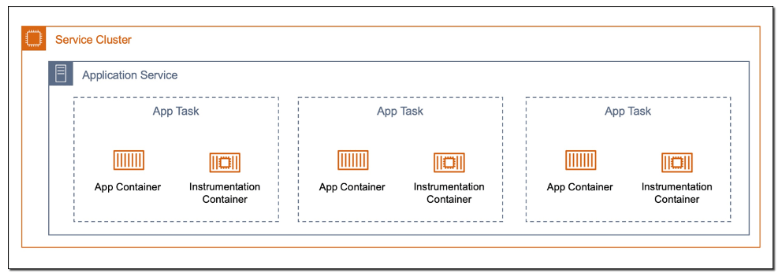

Sidecar Pattern

We can deploy the ADOT in several ways, such as running it on a dedicated node or ECS service to receive telemetry from multiple apps. We can also take the sidecar approach which cleanly separates concerns. Our Orchard Core app will focus on business logic, while a nearby ADOT sidecar handles telemetry collection and forwarding. This mirrors modern cloud-native patterns and gives us more flexibility down the road.

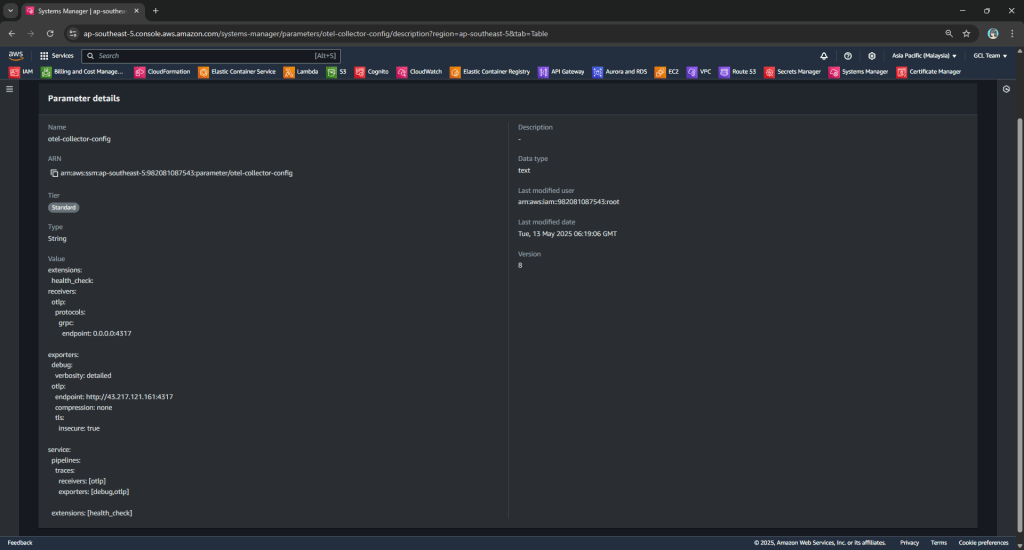

The following CloudFormation template shows how we deploy ADOT as a sidecar in ECS using CloudFormation. The collector config is stored in AWS Systems Manager Parameter Store under /myapp/otel-collector-config, and injected via the AOT_CONFIG_CONTENT environment variable. This keeps our infrastructure clean, decoupled, and secure.

ecsTaskDefinition:

Type: AWS::ECS::TaskDefinition

Properties:

Family: !Ref ServiceName

NetworkMode: awsvpc

ExecutionRoleArn: !GetAtt ecsTaskExecutionRole.Arn

TaskRoleArn: !GetAtt iamRole.Arn

ContainerDefinitions:

- Name: !Ref ServiceName

Image: !Ref OrchardCoreImage

...

- Name: adot-collector

Image: public.ecr.aws/aws-observability/aws-otel-collector:latest

LogConfiguration:

LogDriver: awslogs

Options:

awslogs-group: !Sub "/ecs/${ServiceName}-log-group"

awslogs-region: !Ref AWS::Region

awslogs-stream-prefix: adot

Essential: false

Cpu: 128

Memory: 512

HealthCheck:

Command: ["/healthcheck"]

Interval: 30

Timeout: 5

Retries: 3

StartPeriod: 60

Secrets:

- Name: AOT_CONFIG_CONTENT

ValueFrom: !Sub "arn:${AWS::Partition}:ssm:${AWS::Region}:${AWS::AccountId}:parameter/otel-collector-config"

There are several interesting and important details in the CloudFormation snippet above that are worth calling out. Let’s break them down one by one.

Firstly, we choose awsvpc as the NetworkMode of the ECS task. In awsvpc, each container in the ECS task, i.e. our Orchard Core container and the ADOT sidecar, receives its own ENI (Elastic Network Interface). This is great for network-level isolation. With this setup, we can reference the sidecar from our Orchard Core using its container name through ECS internal DNS, i.e. http://adot-collector:4317.

Secondly, we include a health check for the ADOT container. ECS will use this health check to restart the container if it becomes unhealthy, improving reliability without manual intervention. In November 2022, Paurush Garg from AWS added the healthcheck component with the new ADOT collector release, so we can simply specify that we will be using this healthcheck component in the configuration that we will discuss next.

Yes, the configuration! Instead of hardcoding the ADOT configuration into the task definition, we inject it securely at runtime using the AOT_CONFIG_CONTENT secret. This environment variable AOT_CONFIG_CONTENT is designed to enable us to configure the ADOT collector. It will override the config file used in the ADOT collector entrypoint command.

AOT_CONFIG_CONTENT.Wrap-Up

By now, we have completed the journey of setting up Grafana Tempo on EC2, exploring how traces flow through OTLP protocols like gRPC and HTTP, and understanding why ADOT is often the better choice in production-grade observability pipelines.

With everything connected, our Orchard Core app is now able to send traces into Tempo reliably. This will give us end-to-end visibility with OpenTelemetry and AWS-native tooling.

References

- Level Up Your Tracing Platform with OpenTelemetry and Grafana Tempo;

- OTLP Exporter for OpenTelemetry .NET – OltpExporterOptions;

- http2 explained by Daniel Stenberg;

- AWS Distro for OpenTelemetry (ADOT) technical docs – Introduction;

- Deployment patterns for the AWS Distro for OpenTelemetry Collector with Amazon Elastic Container Service;

- Deploying an OpenTelemetry Sidecar on ECS Fargate with Grafana for Logs, Metrics, and Traces.