One of the main reasons why we can easily identify objects in our daily life is because we can tell the boundary of objects easily with our eyes. For example, whenever we see objects, we can tell the edge between the boundary of the object and the background behind it. Hence, there are some images can play tricks on our eyes and confuse our brain with edge optical illusion.

Sobel-Felman Operator in Computer Vision

Similarly, if a machine would like to understand what it sees, edge detection needs to be implemented in its computer vision. Edge detection, one of the image processing techniques, refers to an algorithm for detecting edges in an image when the image has sharp changes.

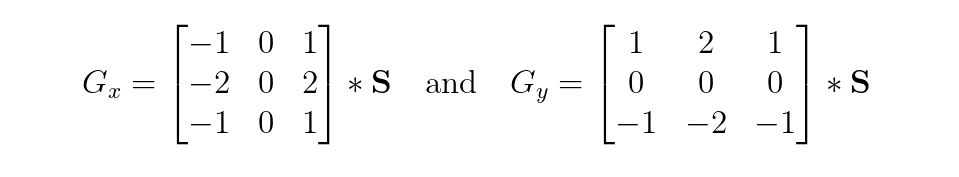

There are many methods for edge detection. One of the methods is using a derivative kernel known as the Sobel-Feldman Operator which can emphasise edges in a given digital image. The operator is based on convolving the image with filters in both horizontal and vertical directions to calculate approximations of the Image Derivatives which will tell us the strength of edges.

The Kernels

The operator uses two 3×3 kernels which are convolved with the original image to calculate approximations of the derivatives for both horizontal and vertical changes.

We define the two 3×3 kernels as follows. Firstly, the one for calculating the horizontal changes.

double[,] xSobel = new double[,]

{

{ -1, 0, 1 },

{ -2, 0, 2 },

{ -1, 0, 1 }

};

Secondly, we have another 3×3 kernel for the vertical changes.

double[,] ySobel = new double[,]

{

{ 1, 2, 1 },

{ 0, 0, 0 },

{ -1, -2, -1 }

};

Loading the Image

Before we continue, we also need to read the image bits into system memory. Here, we will use the LockBits method to lock an existing bitmap in system memory so that it can be changed programmatically. Unlike SetPixel method that we used in our another image processing project, the Image Based CAPTCHA using Jigsaw Puzzle on Blazor, the LockBits method offers better performance for large-scale changes.

Let’s say we have our image in a Bitmap variable sourceImage, then we can perform the following.

int width = sourceImage.Width; int height = sourceImage.Height; int bytes = srcData.Stride * srcData.Height; //Lock source image bits into system memory BitmapData srcData = sourceImage.LockBits(new Rectangle(0, 0, width, height), ImageLockMode.ReadOnly, PixelFormat.Format32bppArgb); byte[] pixelBuffer = new byte[bytes]; //Get the address of the first pixel data IntPtr srcScan0 = srcData.Scan0; //Copy image data to one of the byte arrays Marshal.Copy(srcScan0, pixelBuffer, 0, bytes); //Unlock bits from system memory sourceImage.UnlockBits(srcData);

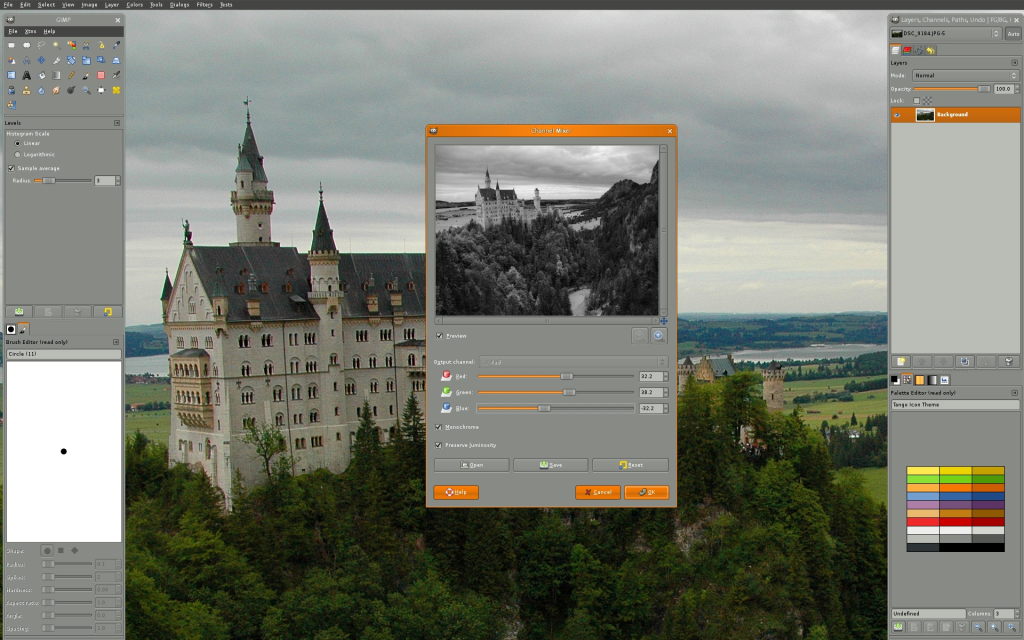

Converting to Grayscale Image

Since our purpose is to identify edges found on objects within the image, it is standard practice to take the original image and convert it to grayscale first so that we can simplifying our problem by ignoring the colours and other noise. Only then we perform the edge detection on this grayscale image.

However, how do we convert colour to grayscale?

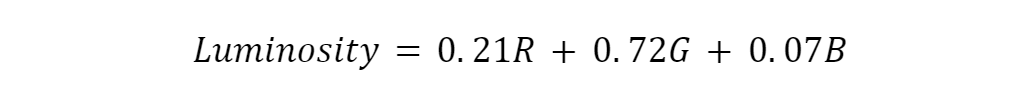

According to GIMP, or GNU Image Manipulation Program, the grayscale can be calculated based on luminosity which is a weighted average to account for human perception, as shown below.

We thus will use the following code to generate a grayscale image from the sourceImage.

float rgb = 0;

for (int i = 0; i < pixelBuffer.Length; i += 4)

{

rgb = pixelBuffer[i] * .21f;

rgb += pixelBuffer[i + 1] * .72f;

rgb += pixelBuffer[i + 2] * .071f;

pixelBuffer[i] = (byte)rgb;

pixelBuffer[i + 1] = pixelBuffer[i];

pixelBuffer[i + 2] = pixelBuffer[i];

pixelBuffer[i + 3] = 255;

}

Image Derivatives and Gradient Magnitude

Now we can finally calculate the approximations of the derivatives. Given S as the grayscale of sourceImage, and Gx and Gy are two images which at each point containing the horizontal and vertical derivative approximations respectively, we have the following.

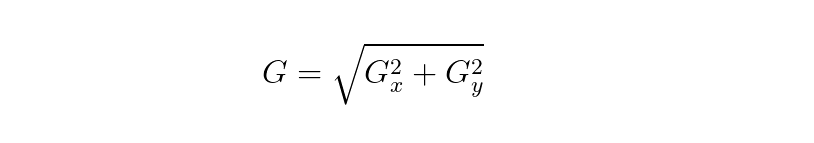

Given such estimates of the Image Derivatives, the gradient magnitude is then computed as follows.

Translating to C#, the formulae above will look like the following code. As we all know, S here is grayscale, so we will only focus on one colour channel instead of all RGB.

//Create variable for pixel data for each kernel

double xg = 0.0;

double yg = 0.0;

double gt = 0.0;

//This is how much our center pixel is offset from the border of our kernel

//Sobel is 3x3, so center is 1 pixel from the kernel border

int filterOffset = 1;

int calcOffset = 0;

int byteOffset = 0;

byte[] resultBuffer = new byte[bytes];

//Start with the pixel that is offset 1 from top and 1 from the left side

//this is so entire kernel is on our image

for (int offsetY = filterOffset; offsetY < height - filterOffset; offsetY++)

{

for (int offsetX = filterOffset; offsetX < width - filterOffset; offsetX++)

{

//reset rgb values to 0

xg = yg = 0;

gt = 0.0;

//position of the kernel center pixel

byteOffset = offsetY * srcData.Stride + offsetX * 4;

//kernel calculations

for (int filterY = -filterOffset; filterY <= filterOffset; filterY++)

{

for (int filterX = -filterOffset; filterX <= filterOffset; filterX++)

{

calcOffset = byteOffset + filterX * 4 + filterY * srcData.Stride;

xg += (double)(pixelBuffer[calcOffset + 1]) * xkernel[filterY + filterOffset, filterX + filterOffset];

yg += (double)(pixelBuffer[calcOffset + 1]) * ykernel[filterY + filterOffset, filterX + filterOffset];

}

}

//total rgb values for this pixel

gt = Math.Sqrt((xg * xg) + (yg * yg));

if (gt > 255) gt = 255;

else if (gt < 0) gt = 0;

//set new data in the other byte array for output image data

resultBuffer[byteOffset] = (byte)(gt);

resultBuffer[byteOffset + 1] = (byte)(gt);

resultBuffer[byteOffset + 2] = (byte)(gt);

resultBuffer[byteOffset + 3] = 255;

}

}

Output Image

With the resultBuffer, we can now generate the output as an image using the following codes.

//Create new bitmap which will hold the processed data Bitmap resultImage = new Bitmap(width, height); //Lock bits into system memory BitmapData resultData = resultImage.LockBits(new Rectangle(0, 0, width, height), ImageLockMode.WriteOnly, PixelFormat.Format32bppArgb); //Copy from byte array that holds processed data to bitmap Marshal.Copy(resultBuffer, 0, resultData.Scan0, resultBuffer.Length); //Unlock bits from system memory resultImage.UnlockBits(resultData);

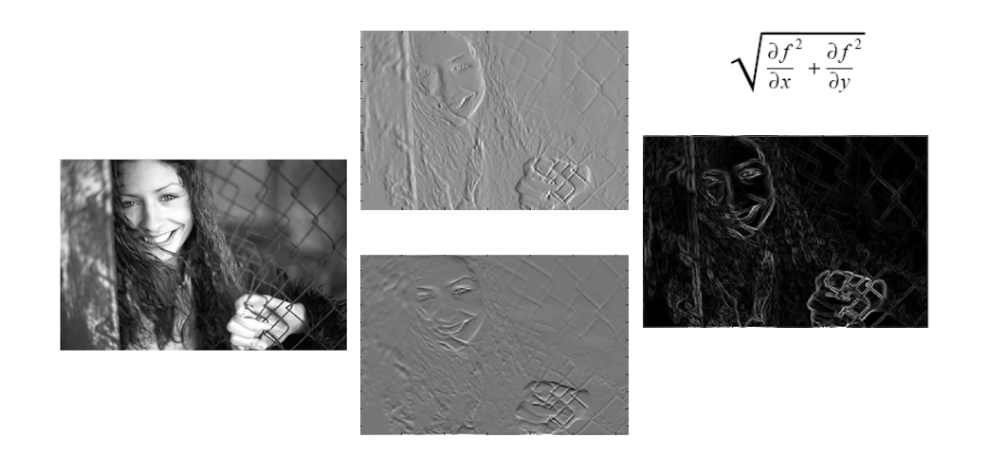

So, let’s say the image below is our sourceImage,

then the algorithm above should return us an image which contains only the detected edges as shown below.

Special Thanks

I am still very new to image processing. Thus, I’d like to thank Andraz Krzisnik who has written a great C# tutorial on applying Sobel-Feldman Operator to an image. The code above is mostly what I learned from his tutorial.

The source code above is also available on my GitHub Gist.

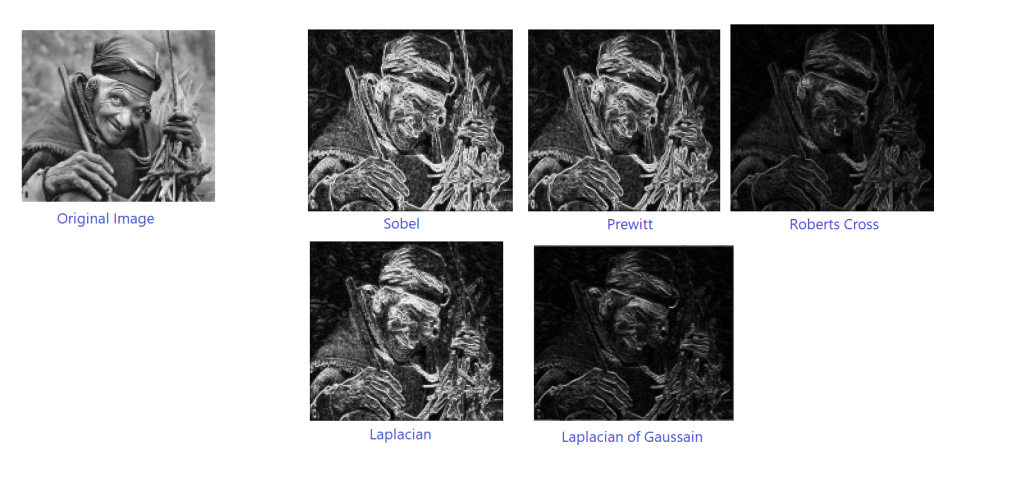

If you are interested in alternative edge detection techniques, you can refer to the paper Study and Comparison of Various Image Edge Detection Techniques.